R. Raghavendra

Morton Filters for Superior Template Protection for Iris Recognition

Jan 15, 2020

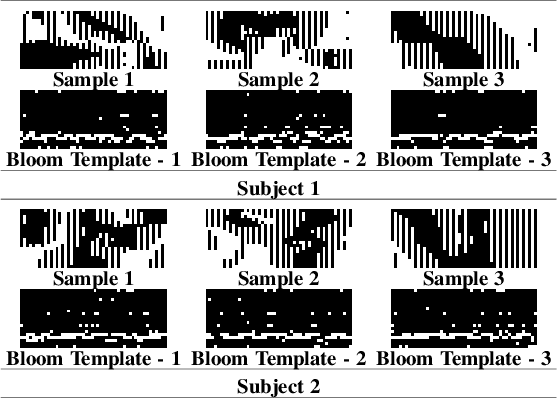

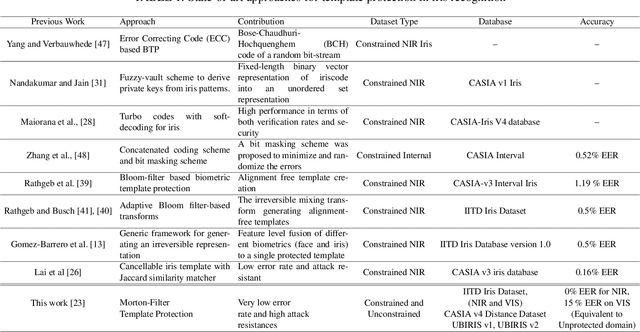

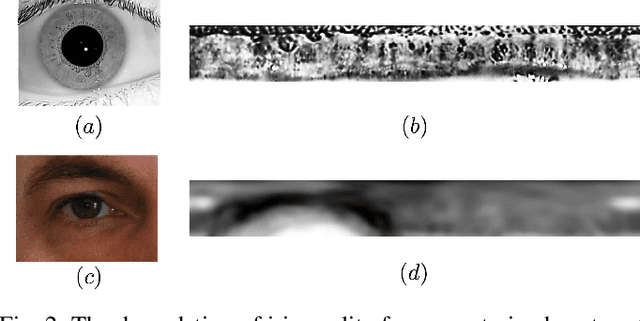

Abstract:We address the fundamental performance issues of template protection (TP) for iris verification. We base our work on the popular Bloom-Filter templates protection & address the key challenges like sub-optimal performance and low unlinkability. Specifically, we focus on cases where Bloom-filter templates results in non-ideal performance due to presence of large degradations within iris images. Iris recognition is challenged with number of occluding factors such as presence of eye-lashes within captured image, occlusion due to eyelids, low quality iris images due to motion blur. All of such degrading factors result in obtaining non-reliable iris codes & thereby provide non-ideal biometric performance. These factors directly impact the protected templates derived from iris images when classical Bloom-filters are employed. To this end, we propose and extend our earlier ideas of Morton-filters for obtaining better and reliable templates for iris. Morton filter based TP for iris codes is based on leveraging the intra and inter-class distribution by exploiting low-rank iris codes to derive the stable bits across iris images for a particular subject and also analyzing the discriminable bits across various subjects. Such low-rank non-noisy iris codes enables realizing the template protection in a superior way which not only can be used in constrained setting, but also in relaxed iris imaging. We further extend the work to analyze the applicability to VIS iris images by employing a large scale public iris image database - UBIRIS(v1 & v2), captured in a unconstrained setting. Through a set of experiments, we demonstrate the applicability of proposed approach and vet the strengths and weakness. Yet another contribution of this work stems in assessing the security of the proposed approach where factors of Unlinkability is studied to indicate the antagonistic nature to relaxed iris imaging scenarios.

Cross-Sensor Periocular Biometrics: A Comparative Benchmark including Smartphone Authentication

Feb 21, 2019

Abstract:The massive availability of cameras and personal devices results in a wide variability between imaging conditions, producing large intra-class variations and performance drop if such images are compared for person recognition. However, as biometric solutions are extensively deployed, it will be common to replace acquisition hardware as it is damaged or newer designs appear, or to exchange information between agencies or applications in heterogeneous environments. Furthermore, variations in imaging bands can also occur. For example, faces are typically acquired in the visible (VW) spectrum, while iris images are captured in the near-infrared (NIR) spectrum. However, cross-spectrum comparison may be needed if for example a face from a surveillance camera needs to be compared against a legacy iris database. Here, we propose a multialgorithmic approach to cope with cross-sensor periocular recognition. We integrate different systems using a fusion scheme based on linear logistic regression, in which fused scores tend to be log-likelihood ratios. This allows easy combination by just summing scores of available systems. We evaluate our approach in the context of the 1st Cross-Spectral Iris/Periocular Competition, whose aim was to compare person recognition approaches when periocular data from VW and NIR images is matched. The proposed fusion approach achieves reductions in error rates of up to 20-30% in cross-spectral NIR-VW comparison, leading to an EER of 0.22% and a FRR of just 0.62% for FAR=0.01%, representing the best overall approach of the mentioned competition.. Experiments are also reported with a database of VW images from two different smartphones, achieving even higher relative improvements in performance. We also discuss our approach from the point of view of template size and computation times, with the most computationally heavy system playing an important role in the results.

DeepIrisNet2: Learning Deep-IrisCodes from Scratch for Segmentation-Robust Visible Wavelength and Near Infrared Iris Recognition

Feb 06, 2019

Abstract:We first, introduce a deep learning based framework named as DeepIrisNet2 for visible spectrum and NIR Iris representation. The framework can work without classical iris normalization step or very accurate iris segmentation; allowing to work under non-ideal situation. The framework contains spatial transformer layers to handle deformation and supervision branches after certain intermediate layers to mitigate overfitting. In addition, we present a dual CNN iris segmentation pipeline comprising of a iris/pupil bounding boxes detection network and a semantic pixel-wise segmentation network. Furthermore, to get compact templates, we present a strategy to generate binary iris codes using DeepIrisNet2. Since, no ground truth dataset are available for CNN training for iris segmentation, We build large scale hand labeled datasets and make them public; i) iris, pupil bounding boxes, ii) labeled iris texture. The networks are evaluated on challenging ND-IRIS-0405, UBIRIS.v2, MICHE-I, and CASIA v4 Interval datasets. Proposed approach significantly improves the state-of-the-art and achieve outstanding performance surpassing all previous methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge