Qiyuan He

Interp3D: Correspondence-aware Interpolation for Generative Textured 3D Morphing

Jan 20, 2026Abstract:Textured 3D morphing seeks to generate smooth and plausible transitions between two 3D assets, preserving both structural coherence and fine-grained appearance. This ability is crucial not only for advancing 3D generation research but also for practical applications in animation, editing, and digital content creation. Existing approaches either operate directly on geometry, limiting them to shape-only morphing while neglecting textures, or extend 2D interpolation strategies into 3D, which often causes semantic ambiguity, structural misalignment, and texture blurring. These challenges underscore the necessity to jointly preserve geometric consistency, texture alignment, and robustness throughout the transition process. To address this, we propose Interp3D, a novel training-free framework for textured 3D morphing. It harnesses generative priors and adopts a progressive alignment principle to ensure both geometric fidelity and texture coherence. Starting from semantically aligned interpolation in condition space, Interp3D enforces structural consistency via SLAT (Structured Latent)-guided structure interpolation, and finally transfers appearance details through fine-grained texture fusion. For comprehensive evaluations, we construct a dedicated dataset, Interp3DData, with graded difficulty levels and assess generation results from fidelity, transition smoothness, and plausibility. Both quantitative metrics and human studies demonstrate the significant advantages of our proposed approach over previous methods. Source code is available at https://github.com/xiaolul2/Interp3D.

VA-$π$: Variational Policy Alignment for Pixel-Aware Autoregressive Generation

Dec 22, 2025Abstract:Autoregressive (AR) visual generation relies on tokenizers to map images to and from discrete sequences. However, tokenizers are trained to reconstruct clean images from ground-truth tokens, while AR generators are optimized only for token likelihood. This misalignment leads to generated token sequences that may decode into low-quality images, without direct supervision from the pixel space. We propose VA-$π$, a lightweight post-training framework that directly optimizes AR models with a principled pixel-space objective. VA-$π$ formulates the generator-tokenizer alignment as a variational optimization, deriving an evidence lower bound (ELBO) that unifies pixel reconstruction and autoregressive modeling. To optimize under the discrete token space, VA-$π$ introduces a reinforcement-based alignment strategy that treats the AR generator as a policy, uses pixel-space reconstruction quality as its intrinsic reward. The reward is measured by how well the predicted token sequences can reconstruct the original image under teacher forcing, giving the model direct pixel-level guidance without expensive free-running sampling. The regularization term of the ELBO serves as a natural regularizer, maintaining distributional consistency of tokens. VA-$π$ enables rapid adaptation of existing AR generators, without neither tokenizer retraining nor external reward models. With only 1% ImageNet-1K data and 25 minutes of tuning, it reduces FID from 14.36 to 7.65 and improves IS from 86.55 to 116.70 on LlamaGen-XXL, while also yielding notable gains in the text-to-image task on GenEval for both visual generation model (LlamaGen: from 0.306 to 0.339) and unified multi-modal model (Janus-Pro: from 0.725 to 0.744). Code is available at https://github.com/Lil-Shake/VA-Pi.

InfoTok: Adaptive Discrete Video Tokenizer via Information-Theoretic Compression

Dec 18, 2025Abstract:Accurate and efficient discrete video tokenization is essential for long video sequences processing. Yet, the inherent complexity and variable information density of videos present a significant bottleneck for current tokenizers, which rigidly compress all content at a fixed rate, leading to redundancy or information loss. Drawing inspiration from Shannon's information theory, this paper introduces InfoTok, a principled framework for adaptive video tokenization. We rigorously prove that existing data-agnostic training methods are suboptimal in representation length, and present a novel evidence lower bound (ELBO)-based algorithm that approaches theoretical optimality. Leveraging this framework, we develop a transformer-based adaptive compressor that enables adaptive tokenization. Empirical results demonstrate state-of-the-art compression performance, saving 20% tokens without influence on performance, and achieving 2.3x compression rates while still outperforming prior heuristic adaptive approaches. By allocating tokens according to informational richness, InfoTok enables a more compressed yet accurate tokenization for video representation, offering valuable insights for future research.

REAR: Rethinking Visual Autoregressive Models via Generator-Tokenizer Consistency Regularization

Oct 06, 2025Abstract:Visual autoregressive (AR) generation offers a promising path toward unifying vision and language models, yet its performance remains suboptimal against diffusion models. Prior work often attributes this gap to tokenizer limitations and rasterization ordering. In this work, we identify a core bottleneck from the perspective of generator-tokenizer inconsistency, i.e., the AR-generated tokens may not be well-decoded by the tokenizer. To address this, we propose reAR, a simple training strategy introducing a token-wise regularization objective: when predicting the next token, the causal transformer is also trained to recover the visual embedding of the current token and predict the embedding of the target token under a noisy context. It requires no changes to the tokenizer, generation order, inference pipeline, or external models. Despite its simplicity, reAR substantially improves performance. On ImageNet, it reduces gFID from 3.02 to 1.86 and improves IS to 316.9 using a standard rasterization-based tokenizer. When applied to advanced tokenizers, it achieves a gFID of 1.42 with only 177M parameters, matching the performance with larger state-of-the-art diffusion models (675M).

Conceptrol: Concept Control of Zero-shot Personalized Image Generation

Mar 09, 2025Abstract:Personalized image generation with text-to-image diffusion models generates unseen images based on reference image content. Zero-shot adapter methods such as IP-Adapter and OminiControl are especially interesting because they do not require test-time fine-tuning. However, they struggle to balance preserving personalized content and adherence to the text prompt. We identify a critical design flaw resulting in this performance gap: current adapters inadequately integrate personalization images with the textual descriptions. The generated images, therefore, replicate the personalized content rather than adhere to the text prompt instructions. Yet the base text-to-image has strong conceptual understanding capabilities that can be leveraged. We propose Conceptrol, a simple yet effective framework that enhances zero-shot adapters without adding computational overhead. Conceptrol constrains the attention of visual specification with a textual concept mask that improves subject-driven generation capabilities. It achieves as much as 89% improvement on personalization benchmarks over the vanilla IP-Adapter and can even outperform fine-tuning approaches such as Dreambooth LoRA. The source code is available at https://github.com/QY-H00/Conceptrol.

Large Language Model-Enhanced Symbolic Reasoning for Knowledge Base Completion

Jan 02, 2025

Abstract:Integrating large language models (LLMs) with rule-based reasoning offers a powerful solution for improving the flexibility and reliability of Knowledge Base Completion (KBC). Traditional rule-based KBC methods offer verifiable reasoning yet lack flexibility, while LLMs provide strong semantic understanding yet suffer from hallucinations. With the aim of combining LLMs' understanding capability with the logical and rigor of rule-based approaches, we propose a novel framework consisting of a Subgraph Extractor, an LLM Proposer, and a Rule Reasoner. The Subgraph Extractor first samples subgraphs from the KB. Then, the LLM uses these subgraphs to propose diverse and meaningful rules that are helpful for inferring missing facts. To effectively avoid hallucination in LLMs' generations, these proposed rules are further refined by a Rule Reasoner to pinpoint the most significant rules in the KB for Knowledge Base Completion. Our approach offers several key benefits: the utilization of LLMs to enhance the richness and diversity of the proposed rules and the integration with rule-based reasoning to improve reliability. Our method also demonstrates strong performance across diverse KB datasets, highlighting the robustness and generalizability of the proposed framework.

Retinexmamba: Retinex-based Mamba for Low-light Image Enhancement

May 06, 2024

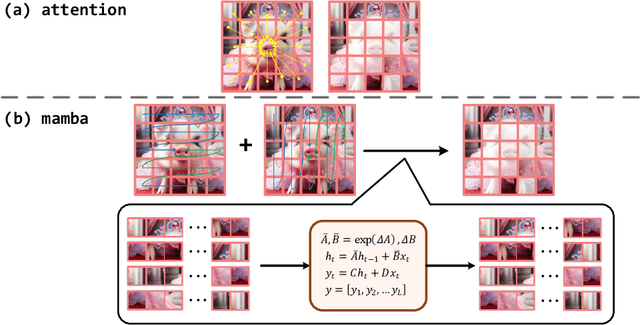

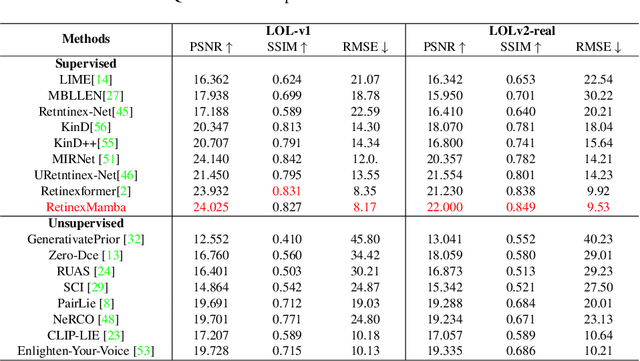

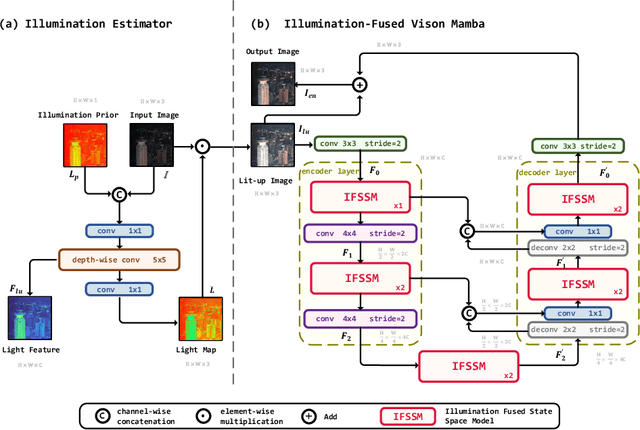

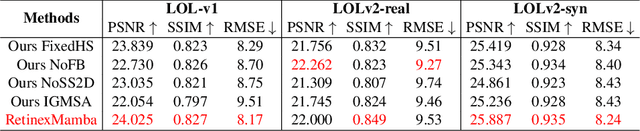

Abstract:In the field of low-light image enhancement, both traditional Retinex methods and advanced deep learning techniques such as Retinexformer have shown distinct advantages and limitations. Traditional Retinex methods, designed to mimic the human eye's perception of brightness and color, decompose images into illumination and reflection components but struggle with noise management and detail preservation under low light conditions. Retinexformer enhances illumination estimation through traditional self-attention mechanisms, but faces challenges with insufficient interpretability and suboptimal enhancement effects. To overcome these limitations, this paper introduces the RetinexMamba architecture. RetinexMamba not only captures the physical intuitiveness of traditional Retinex methods but also integrates the deep learning framework of Retinexformer, leveraging the computational efficiency of State Space Models (SSMs) to enhance processing speed. This architecture features innovative illumination estimators and damage restorer mechanisms that maintain image quality during enhancement. Moreover, RetinexMamba replaces the IG-MSA (Illumination-Guided Multi-Head Attention) in Retinexformer with a Fused-Attention mechanism, improving the model's interpretability. Experimental evaluations on the LOL dataset show that RetinexMamba outperforms existing deep learning approaches based on Retinex theory in both quantitative and qualitative metrics, confirming its effectiveness and superiority in enhancing low-light images.

AID: Attention Interpolation of Text-to-Image Diffusion

Mar 26, 2024

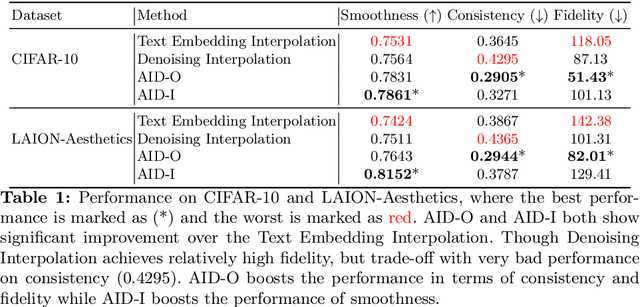

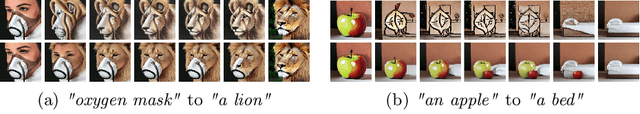

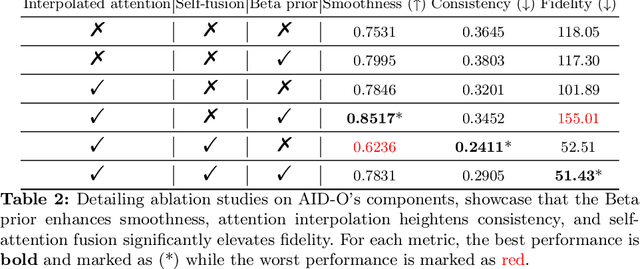

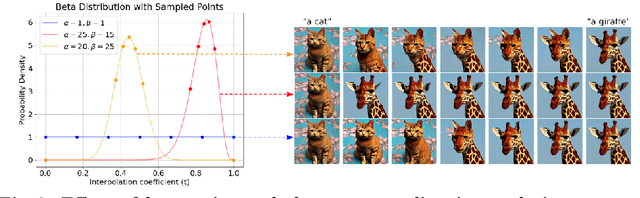

Abstract:Conditional diffusion models can create unseen images in various settings, aiding image interpolation. Interpolation in latent spaces is well-studied, but interpolation with specific conditions like text or poses is less understood. Simple approaches, such as linear interpolation in the space of conditions, often result in images that lack consistency, smoothness, and fidelity. To that end, we introduce a novel training-free technique named Attention Interpolation via Diffusion (AID). Our key contributions include 1) proposing an inner/outer interpolated attention layer; 2) fusing the interpolated attention with self-attention to boost fidelity; and 3) applying beta distribution to selection to increase smoothness. We also present a variant, Prompt-guided Attention Interpolation via Diffusion (PAID), that considers interpolation as a condition-dependent generative process. This method enables the creation of new images with greater consistency, smoothness, and efficiency, and offers control over the exact path of interpolation. Our approach demonstrates effectiveness for conceptual and spatial interpolation. Code and demo are available at https://github.com/QY-H00/attention-interpolation-diffusion.

Can Language Models Act as Knowledge Bases at Scale?

Feb 22, 2024

Abstract:Large language models (LLMs) have demonstrated remarkable proficiency in understanding and generating responses to complex queries through large-scale pre-training. However, the efficacy of these models in memorizing and reasoning among large-scale structured knowledge, especially world knowledge that explicitly covers abundant factual information remains questionable. Addressing this gap, our research investigates whether LLMs can effectively store, recall, and reason with knowledge on a large scale comparable to latest knowledge bases (KBs) such as Wikidata. Specifically, we focus on three crucial aspects to study the viability: (1) the efficiency of LLMs with different sizes in memorizing the exact knowledge in the large-scale KB; (2) the flexibility of recalling the memorized knowledge in response to natural language queries; (3) the capability to infer new knowledge through reasoning. Our findings indicate that while LLMs hold promise as large-scale KBs capable of retrieving and responding with flexibility, enhancements in their reasoning capabilities are necessary to fully realize their potential.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge