Qinwei Xu

Self-Supervised Masking for Unsupervised Anomaly Detection and Localization

May 13, 2022

Abstract:Recently, anomaly detection and localization in multimedia data have received significant attention among the machine learning community. In real-world applications such as medical diagnosis and industrial defect detection, anomalies only present in a fraction of the images. To extend the reconstruction-based anomaly detection architecture to the localized anomalies, we propose a self-supervised learning approach through random masking and then restoring, named Self-Supervised Masking (SSM) for unsupervised anomaly detection and localization. SSM not only enhances the training of the inpainting network but also leads to great improvement in the efficiency of mask prediction at inference. Through random masking, each image is augmented into a diverse set of training triplets, thus enabling the autoencoder to learn to reconstruct with masks of various sizes and shapes during training. To improve the efficiency and effectiveness of anomaly detection and localization at inference, we propose a novel progressive mask refinement approach that progressively uncovers the normal regions and finally locates the anomalous regions. The proposed SSM method outperforms several state-of-the-arts for both anomaly detection and anomaly localization, achieving 98.3% AUC on Retinal-OCT and 93.9% AUC on MVTec AD, respectively.

A Fourier-based Framework for Domain Generalization

May 24, 2021

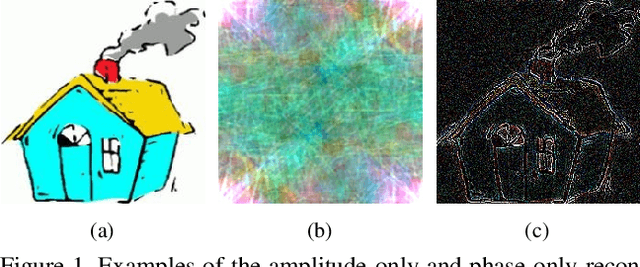

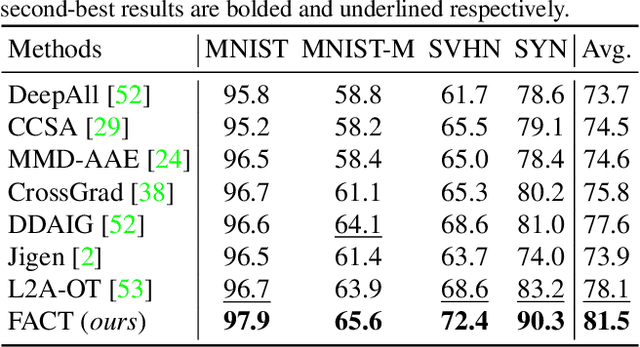

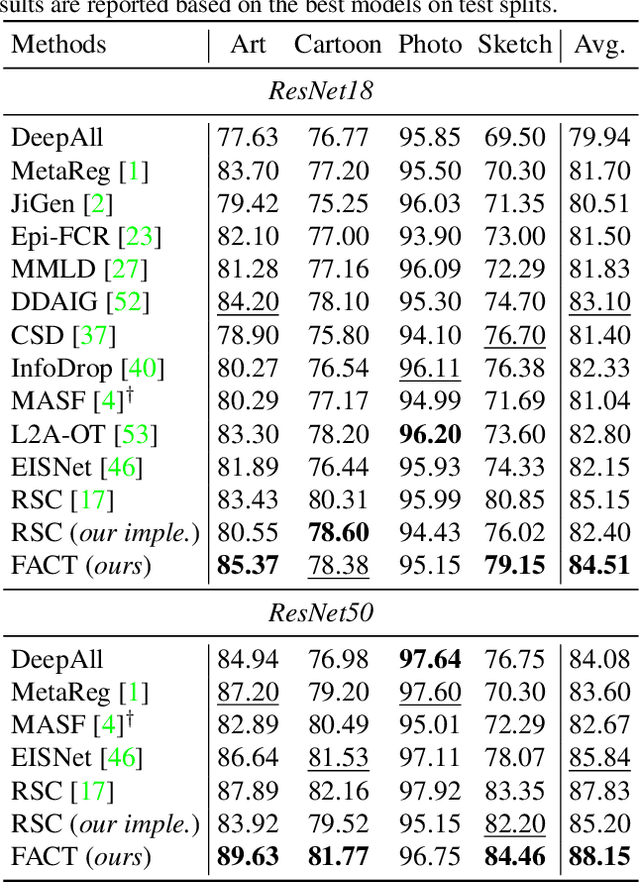

Abstract:Modern deep neural networks suffer from performance degradation when evaluated on testing data under different distributions from training data. Domain generalization aims at tackling this problem by learning transferable knowledge from multiple source domains in order to generalize to unseen target domains. This paper introduces a novel Fourier-based perspective for domain generalization. The main assumption is that the Fourier phase information contains high-level semantics and is not easily affected by domain shifts. To force the model to capture phase information, we develop a novel Fourier-based data augmentation strategy called amplitude mix which linearly interpolates between the amplitude spectrums of two images. A dual-formed consistency loss called co-teacher regularization is further introduced between the predictions induced from original and augmented images. Extensive experiments on three benchmarks have demonstrated that the proposed method is able to achieve state-of-the-arts performance for domain generalization.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge