Qingsong Zhu

VEDA: Uneven light image enhancement via a vision-based exploratory data analysis model

May 25, 2023

Abstract:Uneven light image enhancement is a highly demanded task in many industrial image processing applications. Many existing enhancement methods using physical lighting models or deep-learning techniques often lead to unnatural results. This is mainly because: 1) the assumptions and priors made by the physical lighting model (PLM) based approaches are often violated in most natural scenes, and 2) the training datasets or loss functions used by deep-learning technique based methods cannot handle the various lighting scenarios in the real world well. In this paper, we propose a novel vision-based exploratory data analysis model (VEDA) for uneven light image enhancement. Our method is conceptually simple yet effective. A given image is first decomposed into a contrast image that preserves most of the perceptually important scene details, and a residual image that preserves the lighting variations. After achieving this decomposition at multiple scales using a retinal model that simulates the neuron response to light, the enhanced result at each scale can be obtained by manipulating the two images and recombining them. Then, a weighted averaging strategy based on the residual image is designed to obtain the output image by combining enhanced results at multiple scales. A similar weighting strategy can also be leveraged to reconcile noise suppression and detail preservation. Extensive experiments on different image datasets demonstrate that the proposed method can achieve competitive results in its simplicity and effectiveness compared with state-of-the-art methods. It does not require any explicit assumptions and priors about the scene imaging process, nor iteratively solving any optimization functions or any learning procedures.

Performance Evaluation of Edge-Directed Interpolation Methods for Images

Mar 26, 2013

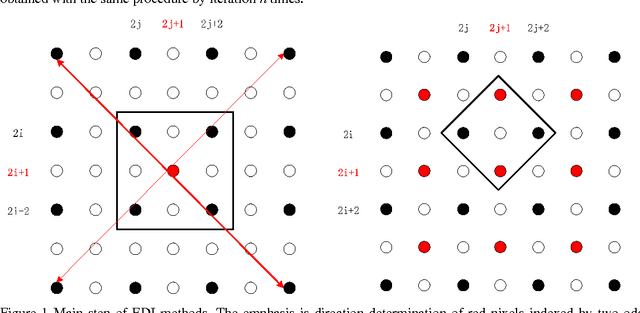

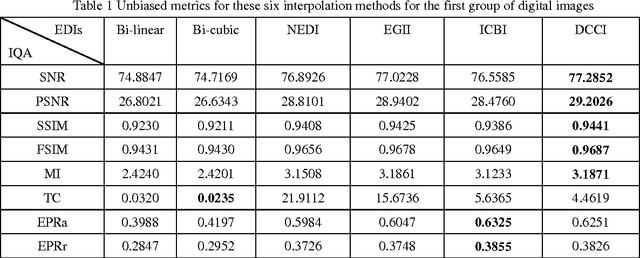

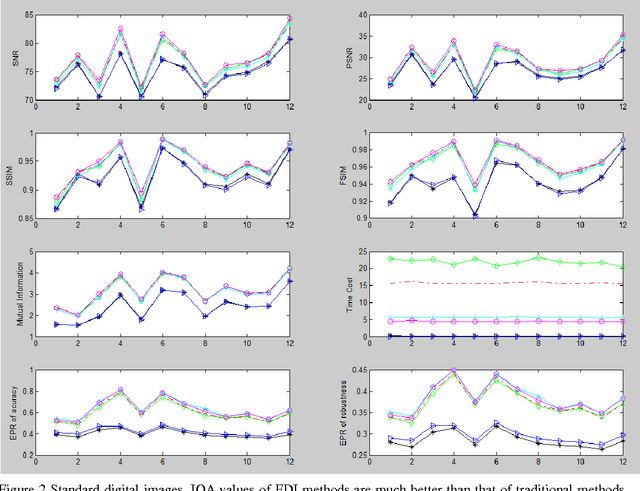

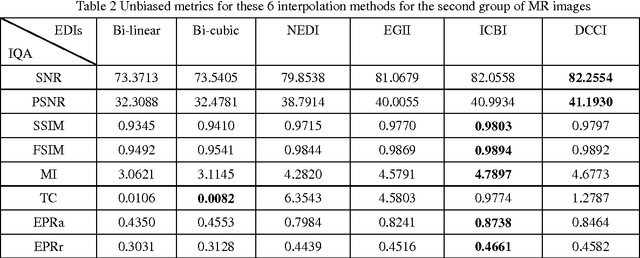

Abstract:Many interpolation methods have been developed for high visual quality, but fail for inability to preserve image structures. Edges carry heavy structural information for detection, determination and classification. Edge-adaptive interpolation approaches become a center of focus. In this paper, performance of four edge-directed interpolation methods comparing with two traditional methods is evaluated on two groups of images. These methods include new edge-directed interpolation (NEDI), edge-guided image interpolation (EGII), iterative curvature-based interpolation (ICBI), directional cubic convolution interpolation (DCCI) and two traditional approaches, bi-linear and bi-cubic. Meanwhile, no parameters are mentioned to measure edge-preserving ability of edge-adaptive interpolation approaches and we proposed two. One evaluates accuracy and the other measures robustness of edge-preservation ability. Performance evaluation is based on six parameters. Objective assessment and visual analysis are illustrated and conclusions are drawn from theoretical backgrounds and practical results.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge