Qiming Zhu

DOMAINEVAL: An Auto-Constructed Benchmark for Multi-Domain Code Generation

Aug 23, 2024

Abstract:Code benchmarks such as HumanEval are widely adopted to evaluate the capabilities of Large Language Models (LLMs), providing insights into their strengths and weaknesses. However, current benchmarks primarily exercise LLMs' capability on common coding tasks (e.g., bubble sort, greatest common divisor), leaving domain-specific coding tasks (e.g., computation, system, cryptography) unexplored. To fill this gap, we propose a multi-domain code benchmark, DOMAINEVAL, designed to evaluate LLMs' coding capabilities thoroughly. Our pipeline works in a fully automated manner, enabling a push-bottom construction from code repositories into formatted subjects under study. Interesting findings are observed by evaluating 12 representative LLMs against DOMAINEVAL. We notice that LLMs are generally good at computation tasks while falling short on cryptography and system coding tasks. The performance gap can be as much as 68.94% (80.94% - 12.0%) in some LLMs. We also observe that generating more samples can increase the overall performance of LLMs, while the domain bias may even increase. The contributions of this study include a code generation benchmark dataset DOMAINEVAL, encompassing six popular domains, a fully automated pipeline for constructing code benchmarks, and an identification of the limitations of LLMs in code generation tasks based on their performance on DOMAINEVAL, providing directions for future research improvements. The leaderboard is available at https://domaineval.github.io/.

Executing Natural Language-Described Algorithms with Large Language Models: An Investigation

Feb 23, 2024

Abstract:Executing computer programs described in natural language has long been a pursuit of computer science. With the advent of enhanced natural language understanding capabilities exhibited by large language models (LLMs), the path toward this goal has been illuminated. In this paper, we seek to examine the capacity of present-day LLMs to comprehend and execute algorithms outlined in natural language. We established an algorithm test set sourced from Introduction to Algorithm, a well-known textbook that contains many representative widely-used algorithms. To systematically assess LLMs' code execution abilities, we selected 30 algorithms, generated 300 random-sampled instances in total, and evaluated whether popular LLMs can understand and execute these algorithms. Our findings reveal that LLMs, notably GPT-4, can effectively execute programs described in natural language, as long as no heavy numeric computation is involved. We believe our findings contribute to evaluating LLMs' code execution abilities and would encourage further investigation and application for the computation power of LLMs.

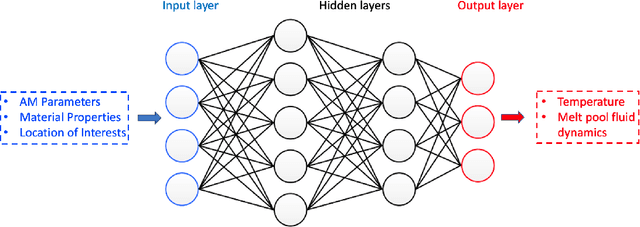

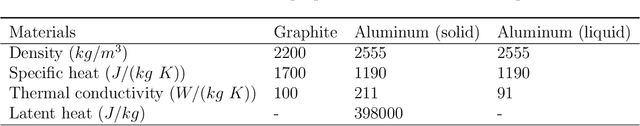

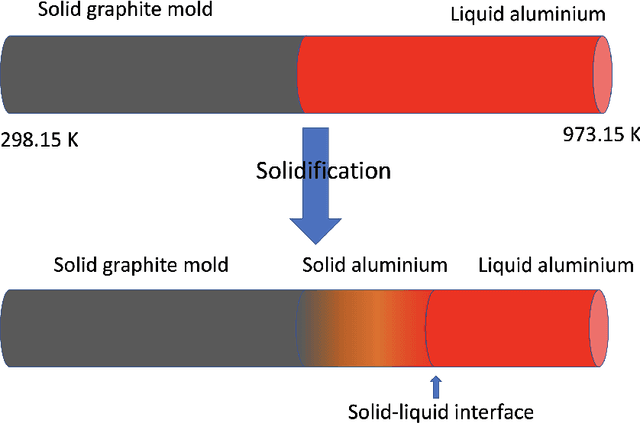

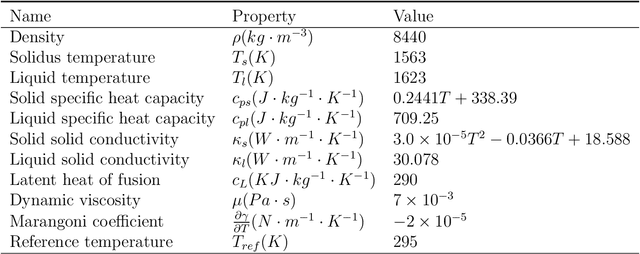

Machine learning for metal additive manufacturing: Predicting temperature and melt pool fluid dynamics using physics-informed neural networks

Jul 28, 2020

Abstract:The recent explosion of machine learning (ML) and artificial intelligence (AI) shows great potential in the breakthrough of metal additive manufacturing (AM) process modeling. However, the success of conventional machine learning tools in data science is primarily attributed to the unprecedented large amount of labeled data-sets (big data), which can be either obtained by experiments or first-principle simulations. Unfortunately, these labeled data-sets are expensive to obtain in AM due to the high expense of the AM experiments and prohibitive computational cost of high-fidelity simulations. We propose a physics-informed neural network (PINN) framework that fuses both data and first physical principles, including conservation laws of momentum, mass, and energy, into the neural network to inform the learning processes. To the best knowledge of the authors, this is the first application of PINN to three dimensional AM processes modeling. Besides, we propose a hard-type approach for Dirichlet boundary conditions (BCs) based on a Heaviside function, which can not only enforce the BCs but also accelerate the learning process. The PINN framework is applied to two representative metal manufacturing problems, including the 2018 NIST AM-Benchmark test series. We carefully assess the performance of the PINN model by comparing the predictions with available experimental data and high-fidelity simulation results. The investigations show that the PINN, owed to the additional physical knowledge, can accurately predict the temperature and melt pool dynamics during metal AM processes with only a moderate amount of labeled data-sets. The foray of PINN to metal AM shows the great potential of physics-informed deep learning for broader applications to advanced manufacturing.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge