Qiming Guo

Spatio-Temporal Graph Unlearning

Nov 12, 2025Abstract:Spatio-temporal graphs are widely used in modeling complex dynamic processes such as traffic forecasting, molecular dynamics, and healthcare monitoring. Recently, stringent privacy regulations such as GDPR and CCPA have introduced significant new challenges for existing spatio-temporal graph models, requiring complete unlearning of unauthorized data. Since each node in a spatio-temporal graph diffuses information globally across both spatial and temporal dimensions, existing unlearning methods primarily designed for static graphs and localized data removal cannot efficiently erase a single node without incurring costs nearly equivalent to full model retraining. Therefore, an effective approach for complete spatio-temporal graph unlearning is a pressing need. To address this, we propose CallosumNet, a divide-and-conquer spatio-temporal graph unlearning framework inspired by the corpus callosum structure that facilitates communication between the brain's two hemispheres. CallosumNet incorporates two novel techniques: (1) Enhanced Subgraph Construction (ESC), which adaptively constructs multiple localized subgraphs based on several factors, including biologically-inspired virtual ganglions; and (2) Global Ganglion Bridging (GGB), which reconstructs global spatio-temporal dependencies from these localized subgraphs, effectively restoring the full graph representation. Empirical results on four diverse real-world datasets show that CallosumNet achieves complete unlearning with only 1%-2% relative MAE loss compared to the gold model, significantly outperforming state-of-the-art baselines. Ablation studies verify the effectiveness of both proposed techniques.

Attacking LLMs and AI Agents: Advertisement Embedding Attacks Against Large Language Models

Aug 25, 2025Abstract:We introduce Advertisement Embedding Attacks (AEA), a new class of LLM security threats that stealthily inject promotional or malicious content into model outputs and AI agents. AEA operate through two low-cost vectors: (1) hijacking third-party service-distribution platforms to prepend adversarial prompts, and (2) publishing back-doored open-source checkpoints fine-tuned with attacker data. Unlike conventional attacks that degrade accuracy, AEA subvert information integrity, causing models to return covert ads, propaganda, or hate speech while appearing normal. We detail the attack pipeline, map five stakeholder victim groups, and present an initial prompt-based self-inspection defense that mitigates these injections without additional model retraining. Our findings reveal an urgent, under-addressed gap in LLM security and call for coordinated detection, auditing, and policy responses from the AI-safety community.

Accessible and Portable LLM Inference by Compiling Computational Graphs into SQL

Feb 05, 2025

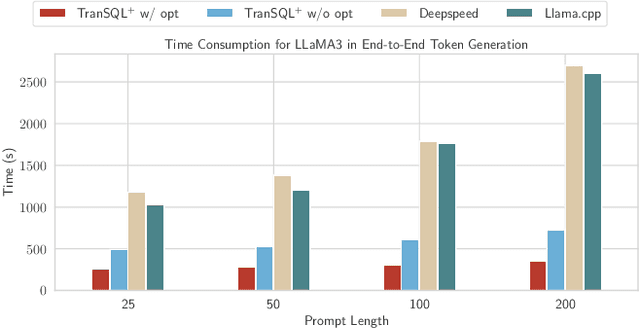

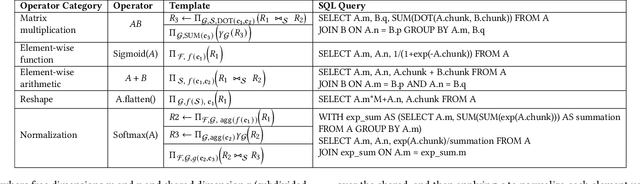

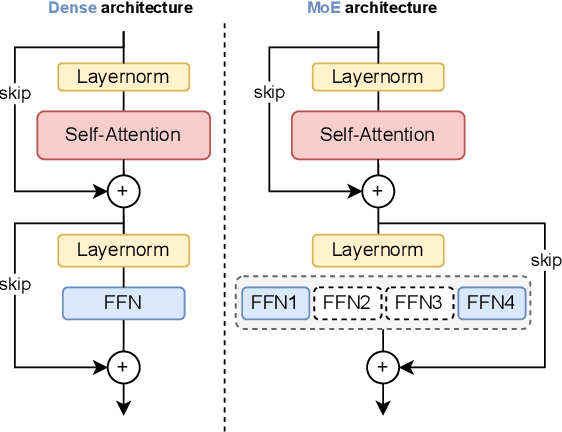

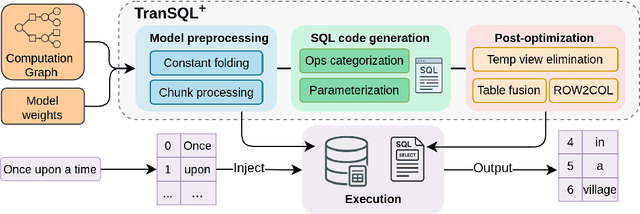

Abstract:Serving large language models (LLMs) often demands specialized hardware, dedicated frameworks, and substantial development efforts, which restrict their accessibility, especially for edge devices and organizations with limited technical resources. We propose a novel compiler that translates LLM inference graphs into SQL queries, enabling relational databases, one of the most widely used and mature software systems globally, to serve as the runtime. By mapping neural operators such as matrix multiplication and attention into relational primitives like joins and aggregations, our approach leverages database capabilities, including disk-based data management and native caching. Supporting key transformer components, such as attention mechanisms and key-value caching, our system generates SQL pipelines for end-to-end LLM inference. Using the Llama3 family as a case study, we demonstrate up to 30x speedup in token generation for memory-constrained scenarios comparable to competitive CPU-based frameworks. Our work offers an accessible, portable, and efficient solution, facilitating the serving of LLMs across diverse deployment environments.

Decoding Linguistic Nuances in Mental Health Text Classification Using Expressive Narrative Stories

Dec 20, 2024Abstract:Recent advancements in NLP have spurred significant interest in analyzing social media text data for identifying linguistic features indicative of mental health issues. However, the domain of Expressive Narrative Stories (ENS)-deeply personal and emotionally charged narratives that offer rich psychological insights-remains underexplored. This study bridges this gap by utilizing a dataset sourced from Reddit, focusing on ENS from individuals with and without self-declared depression. Our research evaluates the utility of advanced language models, BERT and MentalBERT, against traditional models. We find that traditional models are sensitive to the absence of explicit topic-related words, which could risk their potential to extend applications to ENS that lack clear mental health terminology. Despite MentalBERT is design to better handle psychiatric contexts, it demonstrated a dependency on specific topic words for classification accuracy, raising concerns about its application when explicit mental health terms are sparse (P-value<0.05). In contrast, BERT exhibited minimal sensitivity to the absence of topic words in ENS, suggesting its superior capability to understand deeper linguistic features, making it more effective for real-world applications. Both BERT and MentalBERT excel at recognizing linguistic nuances and maintaining classification accuracy even when narrative order is disrupted. This resilience is statistically significant, with sentence shuffling showing substantial impacts on model performance (P-value<0.05), especially evident in ENS comparisons between individuals with and without mental health declarations. These findings underscore the importance of exploring ENS for deeper insights into mental health-related narratives, advocating for a nuanced approach to mental health text analysis that moves beyond mere keyword detection.

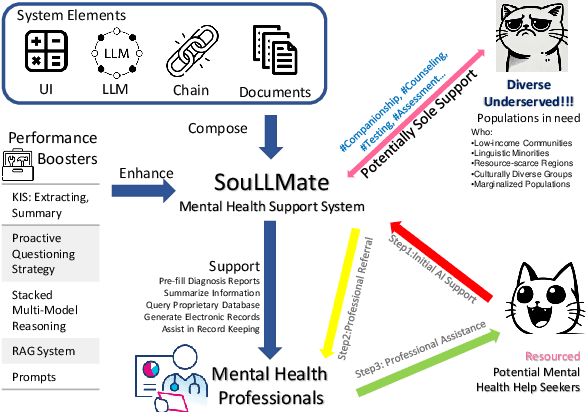

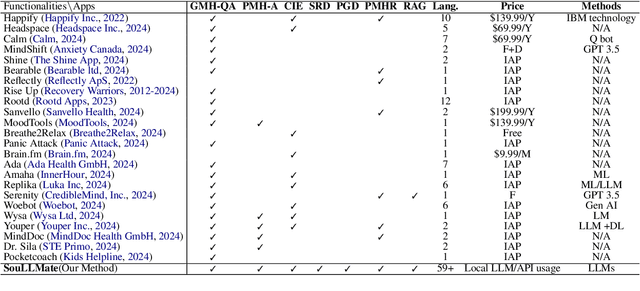

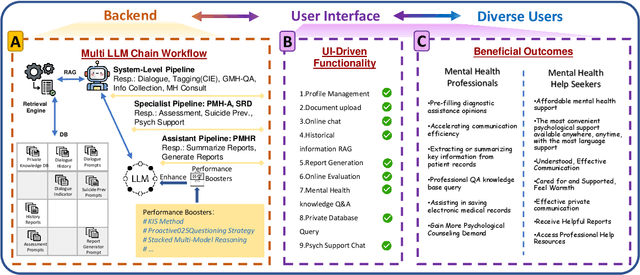

SouLLMate: An Application Enhancing Diverse Mental Health Support with Adaptive LLMs, Prompt Engineering, and RAG Techniques

Oct 17, 2024

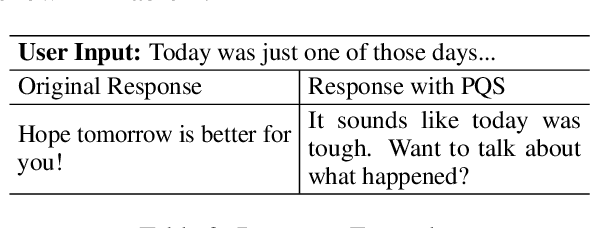

Abstract:Mental health issues significantly impact individuals' daily lives, yet many do not receive the help they need even with available online resources. This study aims to provide diverse, accessible, stigma-free, personalized, and real-time mental health support through cutting-edge AI technologies. It makes the following contributions: (1) Conducting an extensive survey of recent mental health support methods to identify prevalent functionalities and unmet needs. (2) Introducing SouLLMate, an adaptive LLM-driven system that integrates LLM technologies, Chain, Retrieval-Augmented Generation (RAG), prompt engineering, and domain knowledge. This system offers advanced features such as Risk Detection and Proactive Guidance Dialogue, and utilizes RAG for personalized profile uploads and Conversational Information Extraction. (3) Developing novel evaluation approaches for preliminary assessments and risk detection via professionally annotated interview data and real-life suicide tendency data. (4) Proposing the Key Indicator Summarization (KIS), Proactive Questioning Strategy (PQS), and Stacked Multi-Model Reasoning (SMMR) methods to enhance model performance and usability through context-sensitive response adjustments, semantic coherence evaluations, and enhanced accuracy of long-context reasoning in language models. This study contributes to advancing mental health support technologies, potentially improving the accessibility and effectiveness of mental health care globally.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge