Qile Liu

NeuroCLIP: Brain-Inspired Prompt Tuning for EEG-to-Image Multimodal Contrastive Learning

Nov 12, 2025Abstract:Recent advances in brain-inspired artificial intelligence have sought to align neural signals with visual semantics using multimodal models such as CLIP. However, existing methods often treat CLIP as a static feature extractor, overlooking its adaptability to neural representations and the inherent physiological-symbolic gap in EEG-image alignment. To address these challenges, we present NeuroCLIP, a prompt tuning framework tailored for EEG-to-image contrastive learning. Our approach introduces three core innovations: (1) We design a dual-stream visual embedding pipeline that combines dynamic filtering and token-level fusion to generate instance-level adaptive prompts, which guide the adjustment of patch embedding tokens based on image content, thereby enabling fine-grained modulation of visual representations under neural constraints; (2) We are the first to introduce visual prompt tokens into EEG-image alignment, acting as global, modality-level prompts that work in conjunction with instance-level adjustments. These visual prompt tokens are inserted into the Transformer architecture to facilitate neural-aware adaptation and parameter optimization at a global level; (3) Inspired by neuroscientific principles of human visual encoding, we propose a refined contrastive loss that better model the semantic ambiguity and cross-modal noise present in EEG signals. On the THINGS-EEG2 dataset, NeuroCLIP achieves a Top-1 accuracy of 63.2% in zero-shot image retrieval, surpassing the previous best method by +12.3%, and demonstrates strong generalization under inter-subject conditions (+4.6% Top-1), highlighting the potential of physiology-aware prompt tuning for bridging brain signals and visual semantics.

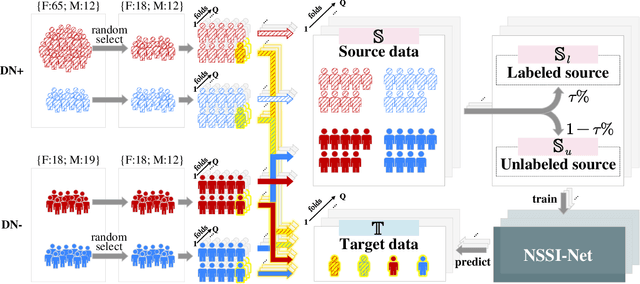

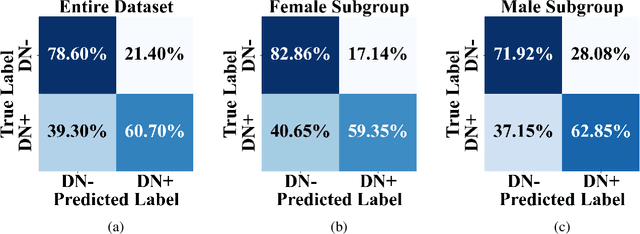

NSSI-Net: Multi-Concept Generative Adversarial Network for Non-Suicidal Self-Injury Detection Using High-Dimensional EEG Signals in a Semi-Supervised Learning Framework

Oct 16, 2024

Abstract:Non-suicidal self-injury (NSSI) is a serious threat to the physical and mental health of adolescents, significantly increasing the risk of suicide and attracting widespread public concern. Electroencephalography (EEG), as an objective tool for identifying brain disorders, holds great promise. However, extracting meaningful and reliable features from high-dimensional EEG data, especially by integrating spatiotemporal brain dynamics into informative representations, remains a major challenge. In this study, we introduce an advanced semi-supervised adversarial network, NSSI-Net, to effectively model EEG features related to NSSI. NSSI-Net consists of two key modules: a spatial-temporal feature extraction module and a multi-concept discriminator. In the spatial-temporal feature extraction module, an integrated 2D convolutional neural network (2D-CNN) and a bi-directional Gated Recurrent Unit (BiGRU) are used to capture both spatial and temporal dynamics in EEG data. In the multi-concept discriminator, signal, gender, domain, and disease levels are fully explored to extract meaningful EEG features, considering individual, demographic, disease variations across a diverse population. Based on self-collected NSSI data (n=114), the model's effectiveness and reliability are demonstrated, with a 7.44% improvement in performance compared to existing machine learning and deep learning methods. This study advances the understanding and early diagnosis of NSSI in adolescents with depression, enabling timely intervention. The source code is available at https://github.com/Vesan-yws/NSSINet.

Emotion-Agent: Unsupervised Deep Reinforcement Learning with Distribution-Prototype Reward for Continuous Emotional EEG Analysis

Aug 22, 2024Abstract:Continuous electroencephalography (EEG) signals are widely used in affective brain-computer interface (aBCI) applications. However, not all continuously collected EEG signals are relevant or meaningful to the task at hand (e.g., wondering thoughts). On the other hand, manually labeling the relevant parts is nearly impossible due to varying engagement patterns across different tasks and individuals. Therefore, effectively and efficiently identifying the important parts from continuous EEG recordings is crucial for downstream BCI tasks, as it directly impacts the accuracy and reliability of the results. In this paper, we propose a novel unsupervised deep reinforcement learning framework, called Emotion-Agent, to automatically identify relevant and informative emotional moments from continuous EEG signals. Specifically, Emotion-Agent involves unsupervised deep reinforcement learning combined with a heuristic algorithm. We first use the heuristic algorithm to perform an initial global search and form prototype representations of the EEG signals, which facilitates the efficient exploration of the signal space and identify potential regions of interest. Then, we design distribution-prototype reward functions to estimate the interactions between samples and prototypes, ensuring that the identified parts are both relevant and representative of the underlying emotional states. Emotion-Agent is trained using Proximal Policy Optimization (PPO) to achieve stable and efficient convergence. Our experiments compare the performance with and without Emotion-Agent. The results demonstrate that selecting relevant and informative emotional parts before inputting them into downstream tasks enhances the accuracy and reliability of aBCI applications.

EEG-SCMM: Soft Contrastive Masked Modeling for Cross-Corpus EEG-Based Emotion Recognition

Aug 17, 2024

Abstract:Emotion recognition using electroencephalography (EEG) signals has garnered widespread attention in recent years. However, existing studies have struggled to develop a sufficiently generalized model suitable for different datasets without re-training (cross-corpus). This difficulty arises because distribution differences across datasets far exceed the intra-dataset variability. To solve this problem, we propose a novel Soft Contrastive Masked Modeling (SCMM) framework. Inspired by emotional continuity, SCMM integrates soft contrastive learning with a new hybrid masking strategy to effectively mine the "short-term continuity" characteristics inherent in human emotions. During the self-supervised learning process, soft weights are assigned to sample pairs, enabling adaptive learning of similarity relationships across samples. Furthermore, we introduce an aggregator that weightedly aggregates complementary information from multiple close samples based on pairwise similarities among samples to enhance fine-grained feature representation, which is then used for original sample reconstruction. Extensive experiments on the SEED, SEED-IV and DEAP datasets show that SCMM achieves state-of-the-art (SOTA) performance, outperforming the second-best method by an average accuracy of 4.26% under two types of cross-corpus conditions (same-class and different-class) for EEG-based emotion recognition.

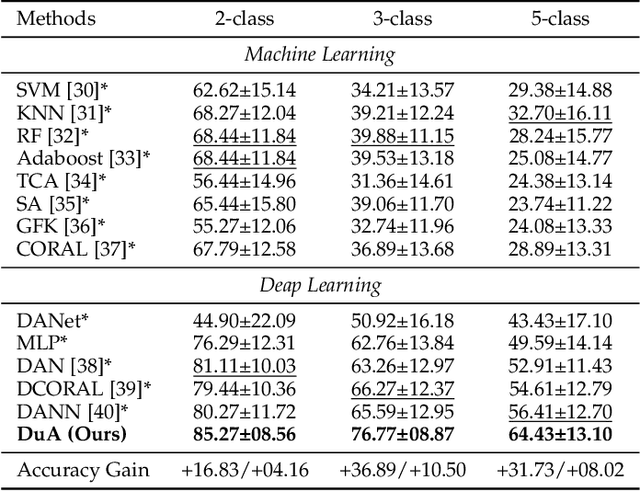

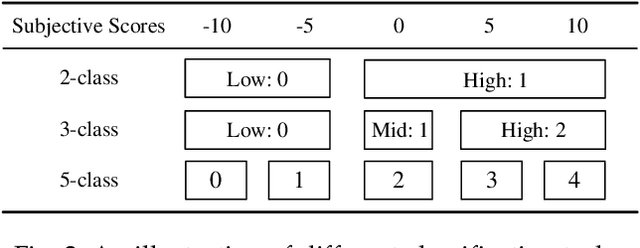

DuA: Dual Attentive Transformer in Long-Term Continuous EEG Emotion Analysis

Jul 30, 2024

Abstract:Affective brain-computer interfaces (aBCIs) are increasingly recognized for their potential in monitoring and interpreting emotional states through electroencephalography (EEG) signals. Current EEG-based emotion recognition methods perform well with short segments of EEG data. However, these methods encounter significant challenges in real-life scenarios where emotional states evolve over extended periods. To address this issue, we propose a Dual Attentive (DuA) transformer framework for long-term continuous EEG emotion analysis. Unlike segment-based approaches, the DuA transformer processes an entire EEG trial as a whole, identifying emotions at the trial level, referred to as trial-based emotion analysis. This framework is designed to adapt to varying signal lengths, providing a substantial advantage over traditional methods. The DuA transformer incorporates three key modules: the spatial-spectral network module, the temporal network module, and the transfer learning module. The spatial-spectral network module simultaneously captures spatial and spectral information from EEG signals, while the temporal network module detects temporal dependencies within long-term EEG data. The transfer learning module enhances the model's adaptability across different subjects and conditions. We extensively evaluate the DuA transformer using a self-constructed long-term EEG emotion database, along with two benchmark EEG emotion databases. On the basis of the trial-based leave-one-subject-out cross-subject cross-validation protocol, our experimental results demonstrate that the proposed DuA transformer significantly outperforms existing methods in long-term continuous EEG emotion analysis, with an average enhancement of 5.28%.

Joint Contrastive Learning with Feature Alignment for Cross-Corpus EEG-based Emotion Recognition

Apr 15, 2024Abstract:The integration of human emotions into multimedia applications shows great potential for enriching user experiences and enhancing engagement across various digital platforms. Unlike traditional methods such as questionnaires, facial expressions, and voice analysis, brain signals offer a more direct and objective understanding of emotional states. However, in the field of electroencephalography (EEG)-based emotion recognition, previous studies have primarily concentrated on training and testing EEG models within a single dataset, overlooking the variability across different datasets. This oversight leads to significant performance degradation when applying EEG models to cross-corpus scenarios. In this study, we propose a novel Joint Contrastive learning framework with Feature Alignment (JCFA) to address cross-corpus EEG-based emotion recognition. The JCFA model operates in two main stages. In the pre-training stage, a joint domain contrastive learning strategy is introduced to characterize generalizable time-frequency representations of EEG signals, without the use of labeled data. It extracts robust time-based and frequency-based embeddings for each EEG sample, and then aligns them within a shared latent time-frequency space. In the fine-tuning stage, JCFA is refined in conjunction with downstream tasks, where the structural connections among brain electrodes are considered. The model capability could be further enhanced for the application in emotion detection and interpretation. Extensive experimental results on two well-recognized emotional datasets show that the proposed JCFA model achieves state-of-the-art (SOTA) performance, outperforming the second-best method by an average accuracy increase of 4.09% in cross-corpus EEG-based emotion recognition tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge