Purushotam Radadia

Ashwin: Plug-and-Play System for Machine-Human Image Annotation

Sep 09, 2016

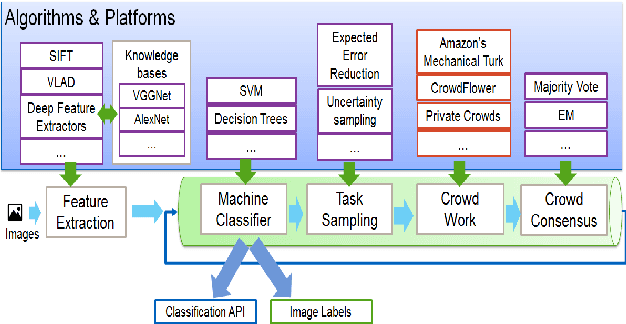

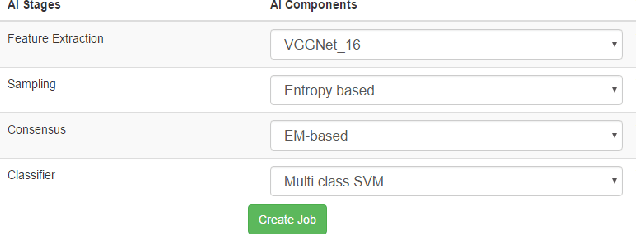

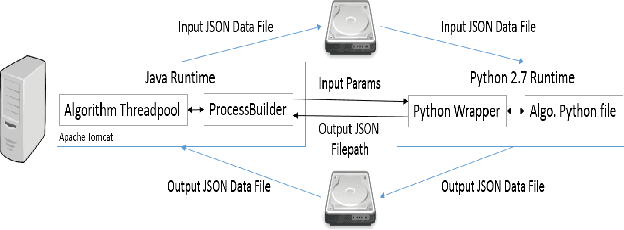

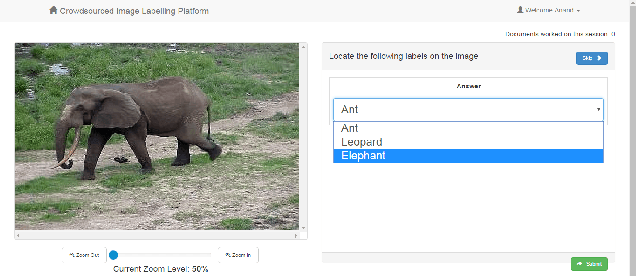

Abstract:We present an end-to-end machine-human image annotation system where each component can be attached in a plug-and-play fashion. These components include Feature Extraction, Machine Classifier, Task Sampling and Crowd Consensus.

Feasibility of Post-Editing Speech Transcriptions with a Mismatched Crowd

Sep 07, 2016

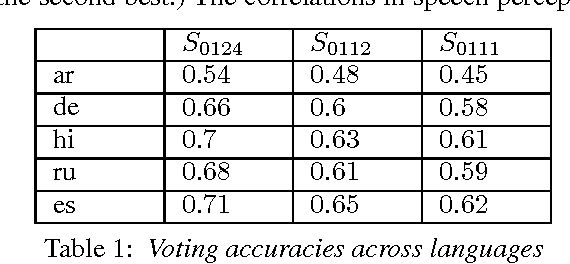

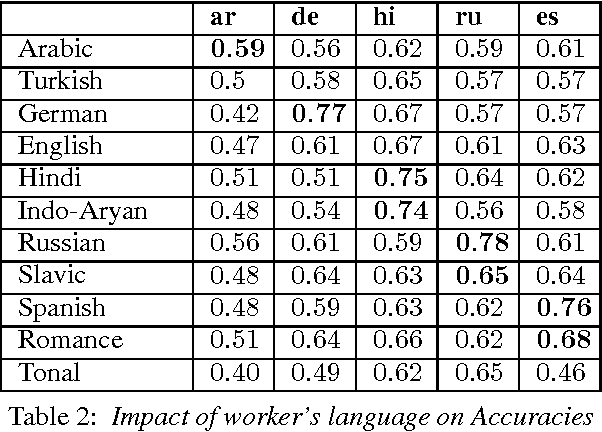

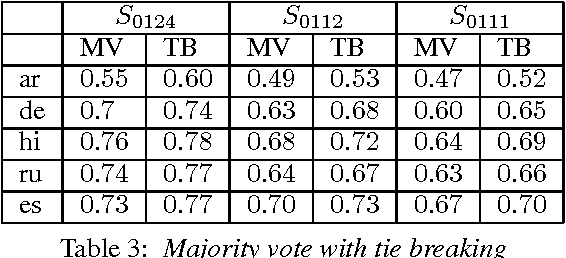

Abstract:Manual correction of speech transcription can involve a selection from plausible transcriptions. Recent work has shown the feasibility of employing a mismatched crowd for speech transcription. However, it is yet to be established whether a mismatched worker has sufficiently fine-granular speech perception to choose among the phonetically proximate options that are likely to be generated from the trellis of an ASRU. Hence, we consider five languages, Arabic, German, Hindi, Russian and Spanish. For each we generate synthetic, phonetically proximate, options which emulate post-editing scenarios of varying difficulty. We consistently observe non-trivial crowd ability to choose among fine-granular options.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge