Pravin Nair

Softmax is $1/2$-Lipschitz: A tight bound across all $\ell_p$ norms

Oct 27, 2025Abstract:The softmax function is a basic operator in machine learning and optimization, used in classification, attention mechanisms, reinforcement learning, game theory, and problems involving log-sum-exp terms. Existing robustness guarantees of learning models and convergence analysis of optimization algorithms typically consider the softmax operator to have a Lipschitz constant of $1$ with respect to the $\ell_2$ norm. In this work, we prove that the softmax function is contractive with the Lipschitz constant $1/2$, uniformly across all $\ell_p$ norms with $p \ge 1$. We also show that the local Lipschitz constant of softmax attains $1/2$ for $p = 1$ and $p = \infty$, and for $p \in (1,\infty)$, the constant remains strictly below $1/2$ and the supremum $1/2$ is achieved only in the limit. To our knowledge, this is the first comprehensive norm-uniform analysis of softmax Lipschitz continuity. We demonstrate how the sharper constant directly improves a range of existing theoretical results on robustness and convergence. We further validate the sharpness of the $1/2$ Lipschitz constant of the softmax operator through empirical studies on attention-based architectures (ViT, GPT-2, Qwen3-8B) and on stochastic policies in reinforcement learning.

Plug-and-Play Linear Attention for Pre-trained Image and Video Restoration Models

Jun 10, 2025Abstract:Multi-head self-attention (MHSA) has become a core component in modern computer vision models. However, its quadratic complexity with respect to input length poses a significant computational bottleneck in real-time and resource constrained environments. We propose PnP-Nystra, a Nystr\"om based linear approximation of self-attention, developed as a plug-and-play (PnP) module that can be integrated into the pre-trained image and video restoration models without retraining. As a drop-in replacement for MHSA, PnP-Nystra enables efficient acceleration in various window-based transformer architectures, including SwinIR, Uformer, and RVRT. Our experiments across diverse image and video restoration tasks, including denoising, deblurring, and super-resolution, demonstrate that PnP-Nystra achieves a 2-4x speed-up on an NVIDIA RTX 4090 GPU and a 2-5x speed-up on CPU inference. Despite these significant gains, the method incurs a maximum PSNR drop of only 1.5 dB across all evaluated tasks. To the best of our knowledge, we are the first to demonstrate a linear attention functioning as a training-free substitute for MHSA in restoration models.

Detecting Localized Deepfake Manipulations Using Action Unit-Guided Video Representations

Mar 28, 2025Abstract:With rapid advancements in generative modeling, deepfake techniques are increasingly narrowing the gap between real and synthetic videos, raising serious privacy and security concerns. Beyond traditional face swapping and reenactment, an emerging trend in recent state-of-the-art deepfake generation methods involves localized edits such as subtle manipulations of specific facial features like raising eyebrows, altering eye shapes, or modifying mouth expressions. These fine-grained manipulations pose a significant challenge for existing detection models, which struggle to capture such localized variations. To the best of our knowledge, this work presents the first detection approach explicitly designed to generalize to localized edits in deepfake videos by leveraging spatiotemporal representations guided by facial action units. Our method leverages a cross-attention-based fusion of representations learned from pretext tasks like random masking and action unit detection, to create an embedding that effectively encodes subtle, localized changes. Comprehensive evaluations across multiple deepfake generation methods demonstrate that our approach, despite being trained solely on the traditional FF+ dataset, sets a new benchmark in detecting recent deepfake-generated videos with fine-grained local edits, achieving a $20\%$ improvement in accuracy over current state-of-the-art detection methods. Additionally, our method delivers competitive performance on standard datasets, highlighting its robustness and generalization across diverse types of local and global forgeries.

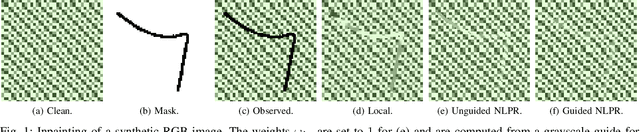

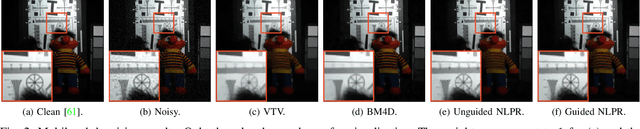

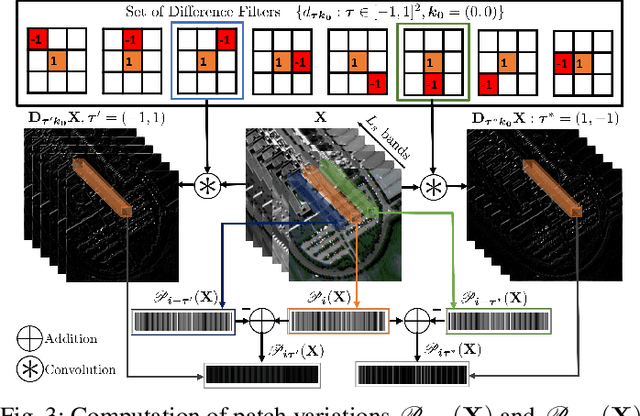

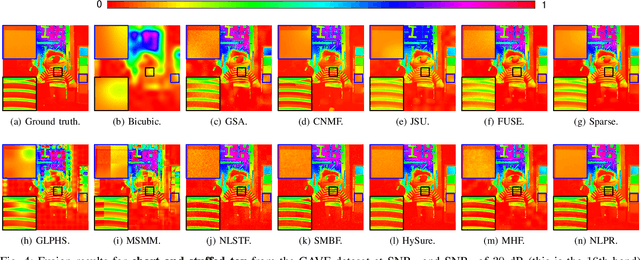

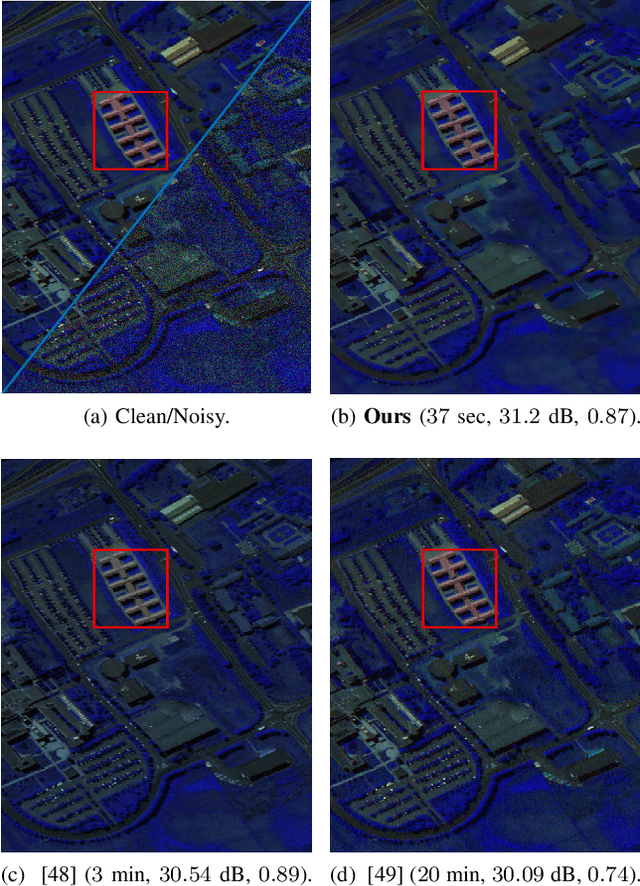

Guided Nonlocal Patch Regularization and Efficient Filtering-Based Inversion for Multiband Fusion

Oct 09, 2022

Abstract:In multiband fusion, an image with a high spatial and low spectral resolution is combined with an image with a low spatial but high spectral resolution to produce a single multiband image having high spatial and spectral resolutions. This comes up in remote sensing applications such as pansharpening~(MS+PAN), hyperspectral sharpening~(HS+PAN), and HS-MS fusion~(HS+MS). Remote sensing images are textured and have repetitive structures. Motivated by nonlocal patch-based methods for image restoration, we propose a convex regularizer that (i) takes into account long-distance correlations, (ii) penalizes patch variation, which is more effective than pixel variation for capturing texture information, and (iii) uses the higher spatial resolution image as a guide image for weight computation. We come up with an efficient ADMM algorithm for optimizing the regularizer along with a standard least-squares loss function derived from the imaging model. The novelty of our algorithm is that by expressing patch variation as filtering operations and by judiciously splitting the original variables and introducing latent variables, we are able to solve the ADMM subproblems efficiently using FFT-based convolution and soft-thresholding. As far as the reconstruction quality is concerned, our method is shown to outperform state-of-the-art variational and deep learning techniques.

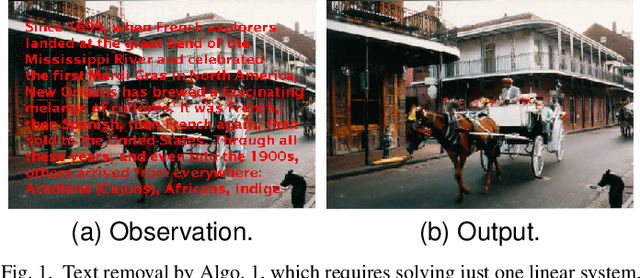

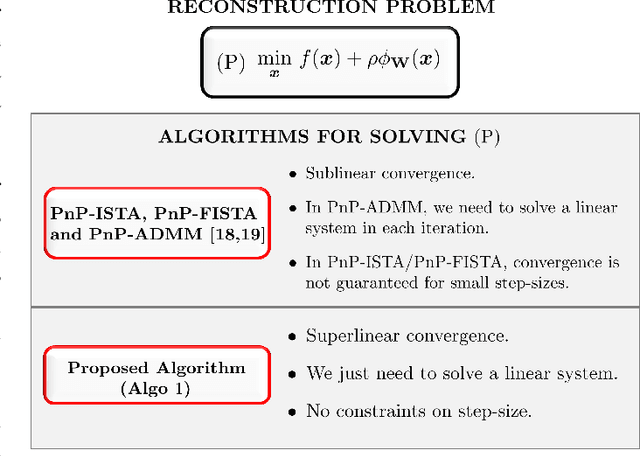

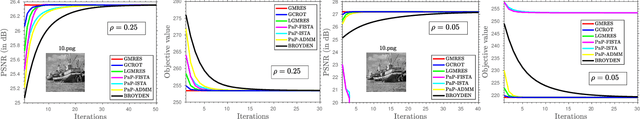

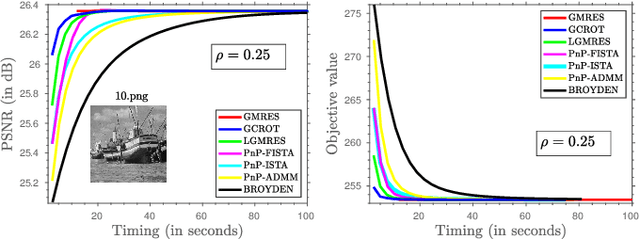

Plug-and-Play Regularization using Linear Solvers

Sep 16, 2022

Abstract:There has been tremendous research on the design of image regularizers over the years, from simple Tikhonov and Laplacian to sophisticated sparsity and CNN-based regularizers. Coupled with a model-based loss function, these are typically used for image reconstruction within an optimization framework. The technical challenge is to develop a regularizer that can accurately model realistic images and be optimized efficiently along with the loss function. Motivated by the recent plug-and-play paradigm for image regularization, we construct a quadratic regularizer whose reconstruction capability is competitive with state-of-the-art regularizers. The novelty of the regularizer is that, unlike classical regularizers, the quadratic objective function is derived from the observed data. Since the regularizer is quadratic, we can reduce the optimization to solving a linear system for applications such as superresolution, deblurring, inpainting, etc. In particular, we show that using iterative Krylov solvers, we can converge to the solution in a few iterations, where each iteration requires an application of the forward operator and a linear denoiser. The surprising finding is that we can get close to deep learning methods in terms of reconstruction quality. To the best of our knowledge, the possibility of achieving near state-of-the-art performance using a linear solver is novel.

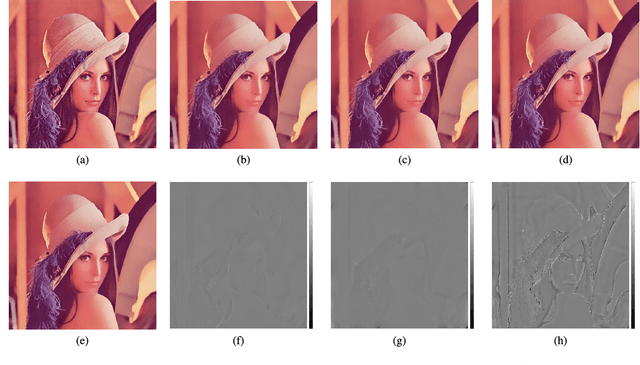

On the Construction of Averaged Deep Denoisers for Image Regularization

Jul 15, 2022

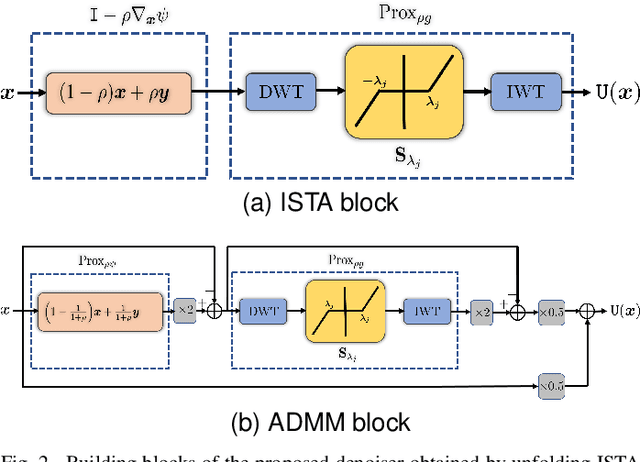

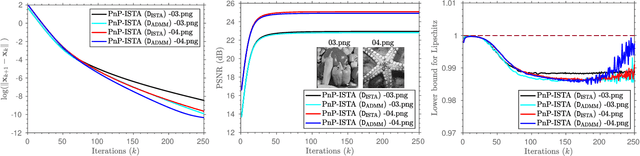

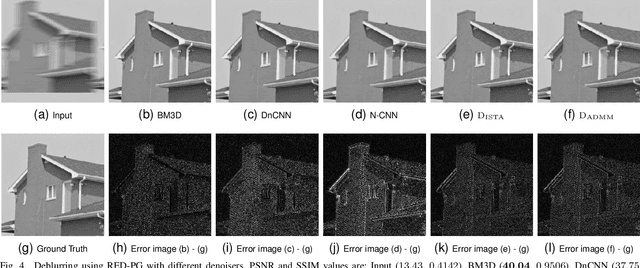

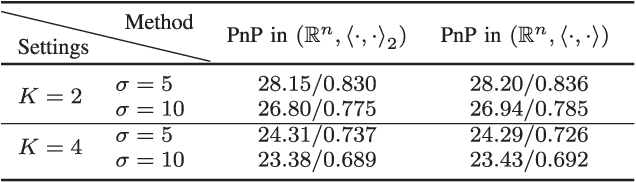

Abstract:Plug-and-Play (PnP) and Regularization by Denoising (RED) are recent paradigms for image reconstruction that can leverage the power of modern denoisers for image regularization. In particular, these algorithms have been shown to deliver state-of-the-art reconstructions using CNN denoisers. Since the regularization is performed in an ad-hoc manner in PnP and RED, understanding their convergence has been an active research area. Recently, it was observed in many works that iterate convergence of PnP and RED can be guaranteed if the denoiser is averaged or nonexpansive. However, integrating nonexpansivity with gradient-based learning is a challenging task -- checking nonexpansivity is known to be computationally intractable. Using numerical examples, we show that existing CNN denoisers violate the nonexpansive property and can cause the PnP iterations to diverge. In fact, algorithms for training nonexpansive denoisers either cannot guarantee nonexpansivity of the final denoiser or are computationally intensive. In this work, we propose to construct averaged (contractive) image denoisers by unfolding ISTA and ADMM iterations applied to wavelet denoising and demonstrate that their regularization capacity for PnP and RED can be matched with CNN denoisers. To the best of our knowledge, this is the first work to propose a simple framework for training provably averaged (contractive) denoisers using unfolding networks.

Fixed-Point and Objective Convergence of Plug-and-Play Algorithms

Apr 21, 2021

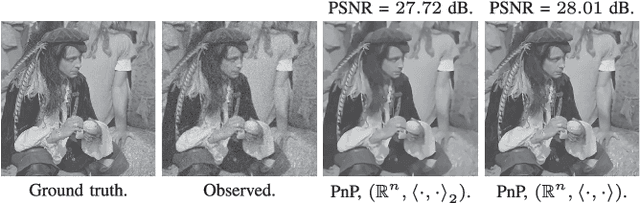

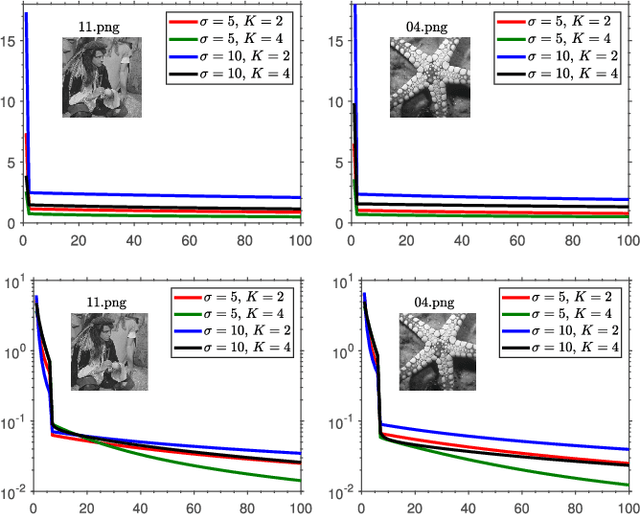

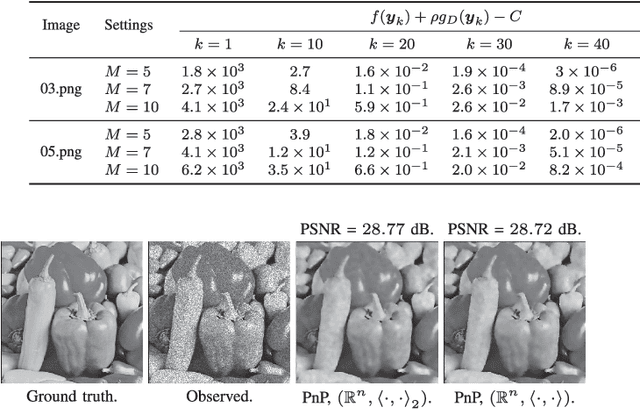

Abstract:A standard model for image reconstruction involves the minimization of a data-fidelity term along with a regularizer, where the optimization is performed using proximal algorithms such as ISTA and ADMM. In plug-and-play (PnP) regularization, the proximal operator (associated with the regularizer) in ISTA and ADMM is replaced by a powerful image denoiser. Although PnP regularization works surprisingly well in practice, its theoretical convergence -- whether convergence of the PnP iterates is guaranteed and if they minimize some objective function -- is not completely understood even for simple linear denoisers such as nonlocal means. In particular, while there are works where either iterate or objective convergence is established separately, a simultaneous guarantee on iterate and objective convergence is not available for any denoiser to our knowledge. In this paper, we establish both forms of convergence for a special class of linear denoisers. Notably, unlike existing works where the focus is on symmetric denoisers, our analysis covers non-symmetric denoisers such as nonlocal means and almost any convex data-fidelity. The novelty in this regard is that we make use of the convergence theory of averaged operators and we work with a special inner product (and norm) derived from the linear denoiser; the latter requires us to appropriately define the gradient and proximal operators associated with the data-fidelity term. We validate our convergence results using image reconstruction experiments.

* Published in IEEE Transactions on Computational Imaging

Fast High-Dimensional Kernel Filtering

Jan 18, 2019

Abstract:The bilateral and nonlocal means filters are instances of kernel-based filters that are popularly used in image processing. It was recently shown that fast and accurate bilateral filtering of grayscale images can be performed using a low-rank approximation of the kernel matrix. More specifically, based on the eigendecomposition of the kernel matrix, the overall filtering was approximated using spatial convolutions, for which efficient algorithms are available. Unfortunately, this technique cannot be scaled to high-dimensional data such as color and hyperspectral images. This is simply because one needs to compute/store a large matrix and perform its eigendecomposition in this case. We show how this problem can be solved using the Nystr\"om method, which is generally used for approximating the eigendecomposition of large matrices. The resulting algorithm can also be used for nonlocal means filtering. We demonstrate the effectiveness of our proposal for bilateral and nonlocal means filtering of color and hyperspectral images. In particular, our method is shown to be competitive with state-of-the-art fast algorithms, and moreover it comes with a theoretical guarantee on the approximation error.

Fast High-Dimensional Bilateral and Nonlocal Means Filtering

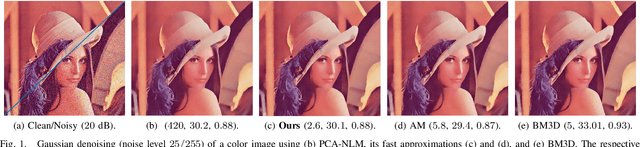

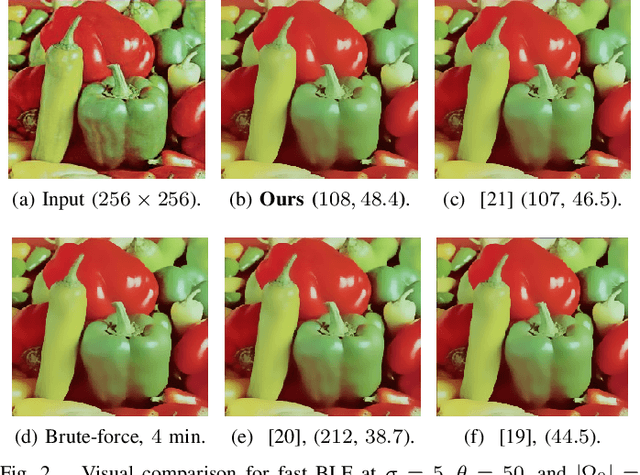

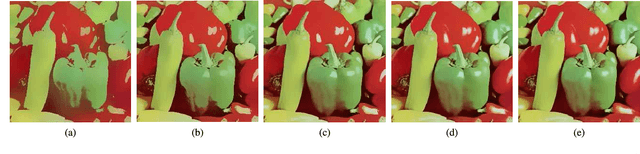

Nov 06, 2018

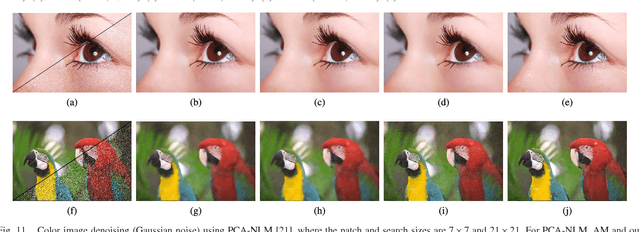

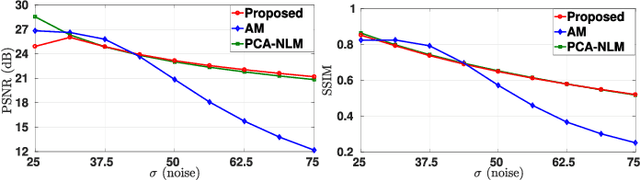

Abstract:Existing fast algorithms for bilateral and nonlocal means filtering mostly work with grayscale images. They cannot easily be extended to high-dimensional data such as color and hyperspectral images, patch-based data, flow-fields, etc. In this paper, we propose a fast algorithm for high-dimensional bilateral and nonlocal means filtering. Unlike existing approaches, where the focus is on approximating the data (using quantization) or the filter kernel (via analytic expansions), we locally approximate the kernel using weighted and shifted copies of a Gaussian, where the weights and shifts are inferred from the data. The algorithm emerging from the proposed approximation essentially involves clustering and fast convolutions, and is easy to implement. Moreover, a variant of our algorithm comes with a guarantee (bound) on the approximation error, which is not enjoyed by existing algorithms. We present some results for high-dimensional bilateral and nonlocal means filtering to demonstrate the speed and accuracy of our proposal. Moreover, we also show that our algorithm can outperform state-of-the-art fast approximations in terms of accuracy and timing.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge