Pranav Poduval

Functional Space Variational Inference for Uncertainty Estimation in Computer Aided Diagnosis

May 28, 2020

Abstract:Deep neural networks have revolutionized medical image analysis and disease diagnosis. Despite their impressive performance, it is difficult to generate well-calibrated probabilistic outputs for such networks, which makes them uninterpretable black boxes. Bayesian neural networks provide a principled approach for modelling uncertainty and increasing patient safety, but they have a large computational overhead and provide limited improvement in calibration. In this work, by taking skin lesion classification as an example task, we show that by shifting Bayesian inference to the functional space we can craft meaningful priors that give better calibrated uncertainty estimates at a much lower computational cost.

* Meaningful priors on the functional space rather than the weight space, result in well calibrated uncertainty estimates

Uncertainty Estimation in Cancer Survival Prediction

Mar 25, 2020

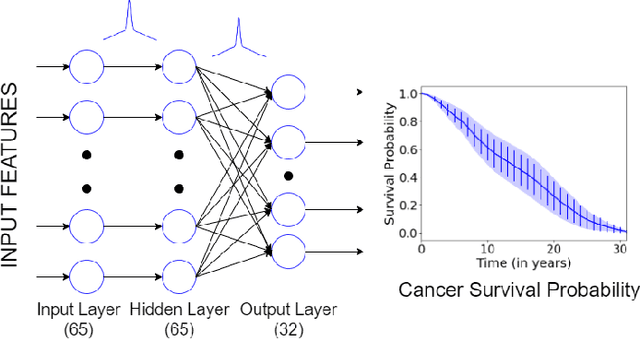

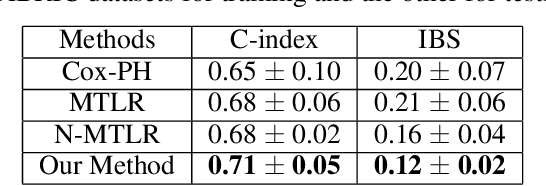

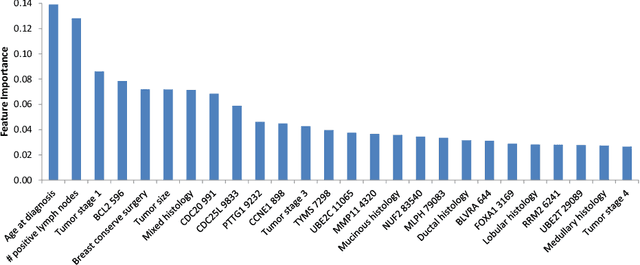

Abstract:Survival models are used in various fields, such as the development of cancer treatment protocols. Although many statistical and machine learning models have been proposed to achieve accurate survival predictions, little attention has been paid to obtain well-calibrated uncertainty estimates associated with each prediction. The currently popular models are opaque and untrustworthy in that they often express high confidence even on those test cases that are not similar to the training samples, and even when their predictions are wrong. We propose a Bayesian framework for survival models that not only gives more accurate survival predictions but also quantifies the survival uncertainty better. Our approach is a novel combination of variational inference for uncertainty estimation, neural multi-task logistic regression for estimating nonlinear and time-varying risk models, and an additional sparsity-inducing prior to work with high dimensional data.

Unreliable Multi-Armed Bandits: A Novel Approach to Recommendation Systems

Nov 14, 2019

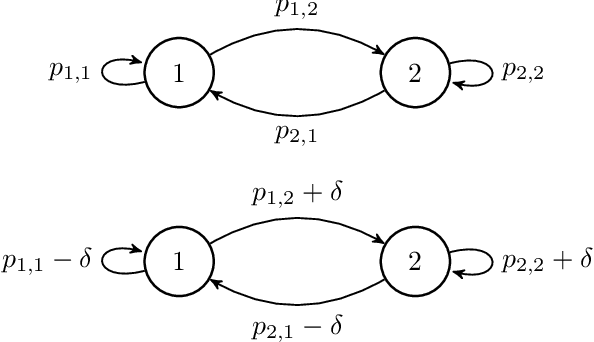

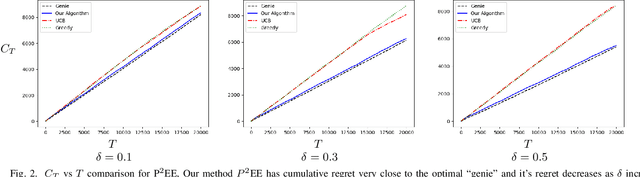

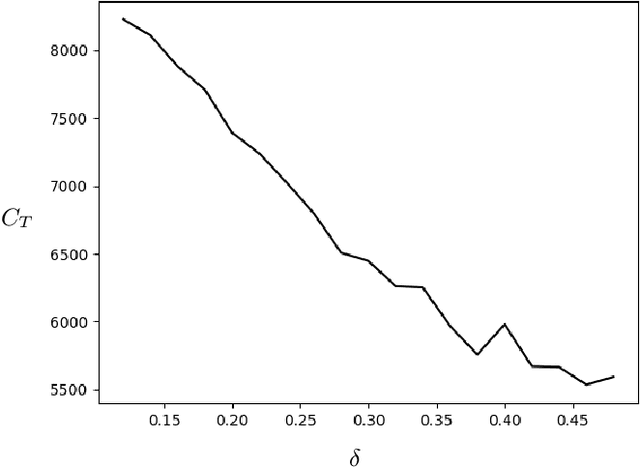

Abstract:We use a novel modification of Multi-Armed Bandits to create a new model for recommendation systems. We model the recommendation system as a bandit seeking to maximize reward by pulling on arms with unknown rewards. The catch however is that this bandit can only access these arms through an unreliable intermediate that has some level of autonomy while choosing its arms. For example, in a streaming website the user has a lot of autonomy while choosing content they want to watch. The streaming sites can use targeted advertising as a means to bias opinions of these users. Here the streaming site is the bandit aiming to maximize reward and the user is the unreliable intermediate. We model the intermediate as accessing states via a Markov chain. The bandit is allowed to perturb this Markov chain. We prove fundamental theorems for this setting after which we show a close-to-optimal Explore-Commit algorithm.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge