Pierrick Philippe

IETR

Efficient Sub-pixel Motion Compensation in Learned Video Codecs

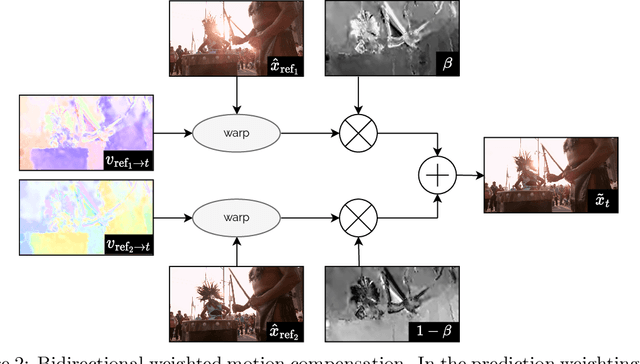

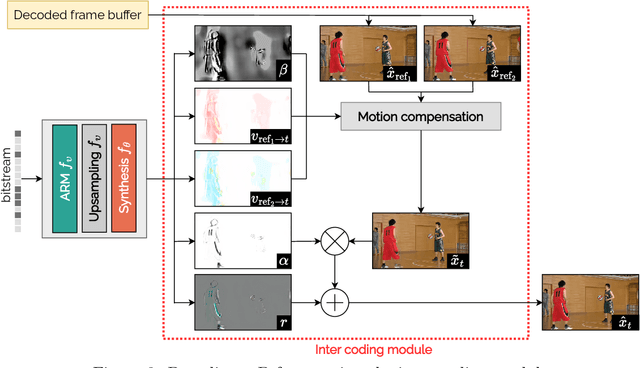

Jul 29, 2025Abstract:Motion compensation is a key component of video codecs. Conventional codecs (HEVC and VVC) have carefully refined this coding step, with an important focus on sub-pixel motion compensation. On the other hand, learned codecs achieve sub-pixel motion compensation through simple bilinear filtering. This paper offers to improve learned codec motion compensation by drawing inspiration from conventional codecs. It is shown that the usage of more advanced interpolation filters, block-based motion information and finite motion accuracy lead to better compression performance and lower decoding complexity. Experimental results are provided on the Cool-chic video codec, where we demonstrate a rate decrease of more than 10% and a lowering of motion-related decoding complexity from 391 MAC per pixel to 214 MAC per pixel. All contributions are made open-source at https://github.com/Orange-OpenSource/Cool-Chic

Improved Encoding for Overfitted Video Codecs

Jan 28, 2025Abstract:Overfitted neural video codecs offer a decoding complexity orders of magnitude smaller than their autoencoder counterparts. Yet, this low complexity comes at the cost of limited compression efficiency, in part due to their difficulty capturing accurate motion information. This paper proposes to guide motion information learning with an optical flow estimator. A joint rate-distortion optimization is also introduced to improve rate distribution across the different frames. These contributions maintain a low decoding complexity of 1300 multiplications per pixel while offering compression performance close to the conventional codec HEVC and outperforming other overfitted codecs. This work is made open-source at https://orange-opensource. github.io/Cool-Chic/

Upsampling Improvement for Overfitted Neural Coding

Nov 28, 2024Abstract:Neural image compression, based on auto-encoders and overfitted representations, relies on a latent representation of the coded signal. This representation needs to be compact and uses low resolution feature maps. In the decoding process, those latents are upsampled and filtered using stacks of convolution filters and non linear elements to recover the decoded image. Therefore, the upsampling process is crucial in the design of a neural coding scheme and is of particular importance for overfitted codecs where the network parameters, including the upsampling filters, are part of the representation. This paper addresses the improvement of the upsampling process in order to reduce its complexity and limit the number of parameters. A new upsampling structure is presented whose improvements are illustrated within the Cool-Chic overfitted image coding framework. The proposed approach offers a rate reduction of 4.7%. The code is provided.

Overfitted image coding at reduced complexity

Mar 18, 2024Abstract:Overfitted image codecs offer compelling compression performance and low decoder complexity, through the overfitting of a lightweight decoder for each image. Such codecs include Cool-chic, which presents image coding performance on par with VVC while requiring around 2000 multiplications per decoded pixel. This paper proposes to decrease Cool-chic encoding and decoding complexity. The encoding complexity is reduced by shortening Cool-chic training, up to the point where no overfitting is performed at all. It is also shown that a tiny neural decoder with 300 multiplications per pixel still outperforms HEVC. A near real-time CPU implementation of this decoder is made available at https://orange-opensource.github.io/Cool-Chic/.

Cool-chic video: Learned video coding with 800 parameters

Feb 06, 2024

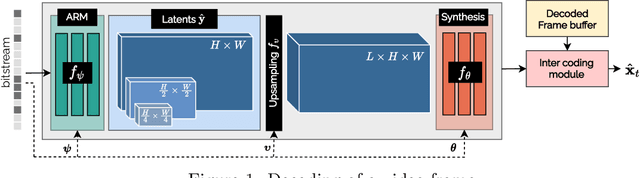

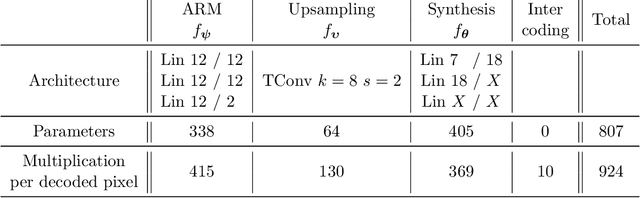

Abstract:We propose a lightweight learned video codec with 900 multiplications per decoded pixel and 800 parameters overall. To the best of our knowledge, this is one of the neural video codecs with the lowest decoding complexity. It is built upon the overfitted image codec Cool-chic and supplements it with an inter coding module to leverage the video's temporal redundancies. The proposed model is able to compress videos using both low-delay and random access configurations and achieves rate-distortion close to AVC while out-performing other overfitted codecs such as FFNeRV. The system is made open-source: orange-opensource.github.io/Cool-Chic.

Cool-Chic: Perceptually Tuned Low Complexity Overfitted Image Coder

Jan 04, 2024

Abstract:This paper summarises the design of the Cool-Chic candidate for the Challenge on Learned Image Compression. This candidate attempts to demonstrate that neural coding methods can lead to low complexity and lightweight image decoders while still offering competitive performance. The approach is based on the already published overfitted lightweight neural networks Cool-Chic, further adapted to the human subjective viewing targeted in this challenge.

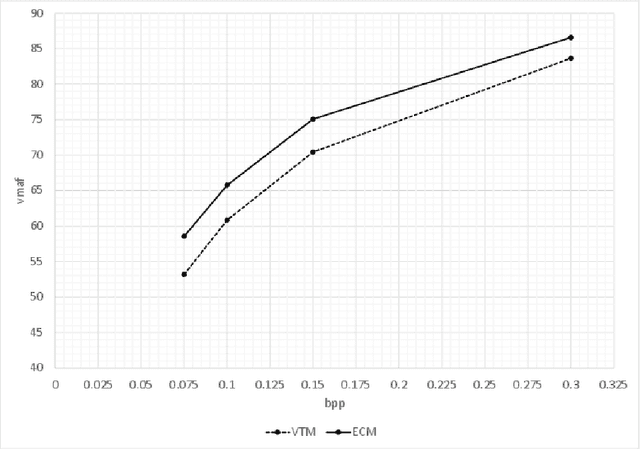

ED: Perceptually tuned Enhanced Compression Model

Jan 04, 2024

Abstract:This paper summarises the design of the candidate ED for the Challenge on Learned Image Compression 2024. This candidate aims at providing an anchor based on conventional coding technologies to the learning-based approaches mostly targeted in the challenge. The proposed candidate is based on the Enhanced Compression Model (ECM) developed at JVET, the Joint Video Experts Team of ITU-T VCEG and ISO/IEC MPEG. Here, ECM is adapted to the challenge objective: to maximise the perceived quality, the encoding is performed according to a perceptual metric, also the sequence selection is performed in a perceptual manner to fit the target bit per pixel objectives. The primary objective of this candidate is to assess the recent developments in video coding standardisation and in parallel to evaluate the progress made by learning-based techniques. To this end, this paper explains how to generate coded images fulfilling the challenge requirements, in a reproducible way, targeting the maximum performance.

Video Quality Assessment and Coding Complexity of the Versatile Video Coding Standard

Oct 19, 2023

Abstract:In recent years, the proliferation of multimedia applications and formats, such as IPTV, Virtual Reality (VR, 360-degree), and point cloud videos, has presented new challenges to the video compression research community. Simultaneously, there has been a growing demand from users for higher resolutions and improved visual quality. To further enhance coding efficiency, a new video coding standard, Versatile Video Coding (VVC), was introduced in July 2020. This paper conducts a comprehensive analysis of coding performance and complexity for the latest VVC standard in comparison to its predecessor, High Efficiency Video Coding (HEVC). The study employs a diverse set of test sequences, covering both High Definition (HD) and Ultra High Definition (UHD) resolutions, and spans a wide range of bit-rates. These sequences are encoded using the reference software encoders of HEVC (HM) and VVC (VTM). The results consistently demonstrate that VVC outperforms HEVC, achieving bit-rate savings of up to 40% on the subjective quality scale, particularly at realistic bit-rates and quality levels. Objective quality metrics, including PSNR, SSIM, and VMAF, support these findings, revealing bit-rate savings ranging from 31% to 40%, depending on the video content, spatial resolution, and the selected quality metric. However, these improvements in coding efficiency come at the cost of significantly increased computational complexity. On average, our results indicate that the VVC decoding process is 1.5 times more complex, while the encoding process becomes at least eight times more complex than that of the HEVC reference encoder. Our simultaneous profiling of the two standards sheds light on the primary evolutionary differences between them and highlights the specific stages responsible for the observed increase in complexity.

Efficient Predictive Coding of Intra Prediction Modes

Oct 09, 2023Abstract:The high efficiency video coding (HEVC) standard and the joint exploration model (JEM) codec incorporate 35 and 67 intra prediction modes (IPMs) respectively, which are essential for efficient compression of Intra coded blocks. These IPMs are transmitted to the decoder through a coding scheme. In our paper, we present an innovative approach to construct a dedicated coding scheme for IPM based on contextual information. This approach comprises three key steps: prediction, clustering, and coding, each of which has been enhanced by introducing new elements, namely, labels for prediction, tests for clustering, and codes for coding. In this context, we have proposed a method that utilizes a genetic algorithm to minimize the rate cost, aiming to derive the most efficient coding scheme while leveraging the available labels, tests, and codes. The resulting coding scheme, expressed as a binary tree, achieves the highest coding efficiency for a given level of complexity. In our experimental evaluation under the HEVC standard, we observed significant bitrate gains while maintaining coding efficiency under the JEM codec. These results demonstrate the potential of our approach to improve compression efficiency, particularly under the HEVC standard, while preserving the coding efficiency of the JEM codec.

Low-complexity Overfitted Neural Image Codec

Jul 24, 2023

Abstract:We propose a neural image codec at reduced complexity which overfits the decoder parameters to each input image. While autoencoders perform up to a million multiplications per decoded pixel, the proposed approach only requires 2300 multiplications per pixel. Albeit low-complexity, the method rivals autoencoder performance and surpasses HEVC performance under various coding conditions. Additional lightweight modules and an improved training process provide a 14% rate reduction with respect to previous overfitted codecs, while offering a similar complexity. This work is made open-source at https://orange-opensource.github.io/Cool-Chic/

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge