Pierre Schaus

Anytime Optimal Decision Tree Learning with Continuous Features

Jan 21, 2026Abstract:In recent years, significant progress has been made on algorithms for learning optimal decision trees, primarily in the context of binary features. Extending these methods to continuous features remains substantially more challenging due to the large number of potential splits for each feature. Recently, an elegant exact algorithm was proposed for learning optimal decision trees with continuous features; however, the rapidly increasing computational time limits its practical applicability to shallow depths (typically 3 or 4). It relies on a depth-first search optimization strategy that fully optimizes the left subtree of each split before exploring the corresponding right subtree. While effective in finding optimal solutions given sufficient time, this strategy can lead to poor anytime behavior: when interrupted early, the best-found tree is often highly unbalanced and suboptimal. In such cases, purely greedy methods such as C4.5 may, paradoxically, yield better solutions. To address this limitation, we propose an anytime, yet complete approach leveraging limited discrepancy search, distributing the computational effort more evenly across the entire tree structure, and thus ensuring that a high-quality decision tree is available at any interruption point. Experimental results show that our approach outperforms the existing one in terms of anytime performance.

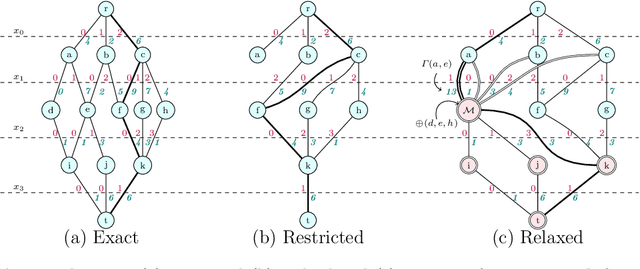

Towards Bound Consistency for the No-Overlap Constraint Using MDDs

Jan 21, 2026Abstract:Achieving bound consistency for the no-overlap constraint is known to be NP-complete. Therefore, several polynomial-time tightening techniques, such as edge finding, not-first-not-last reasoning, and energetic reasoning, have been introduced for this constraint. In this work, we derive the first bound-consistent algorithm for the no-overlap constraint. By building on the no-overlap MDD defined by Ciré and van Hoeve, we extract bounds of the time window of the jobs, allowing us to tighten start and end times in time polynomial in the number of nodes of the MDD. Similarly, to bound the size and time-complexity, we limit the width of the MDD to a threshold, creating a relaxed MDD that can also be used to relax the bound-consistent filtering. Through experiments on a sequencing problem with time windows and a just-in-time objective ($1 \mid r_j, d_j, \bar{d}_j \mid \sum E_j + \sum T_j$), we observe that the proposed filtering, even with a threshold on the width, achieves a stronger reduction in the number of nodes visited in the search tree compared to the previously proposed precedence-detection algorithm of Ciré and van Hoeve. The new filtering also appears to be complementary to classical propagation methods for the no-overlap constraint, allowing a substantial reduction in both the number of nodes and the solving time on several instances.

Sequence Variables: A Constraint Programming Computational Domain for Routing and Sequencing

Oct 10, 2025Abstract:Constraint Programming (CP) offers an intuitive, declarative framework for modeling Vehicle Routing Problems (VRP), yet classical CP models based on successor variables cannot always deal with optional visits or insertion based heuristics. To address these limitations, this paper formalizes sequence variables within CP. Unlike the classical successor models, this computational domain handle optional visits and support insertion heuristics, including insertion-based Large Neighborhood Search. We provide a clear definition of their domain, update operations, and introduce consistency levels for constraints on this domain. An implementation is described with the underlying data structures required for integrating sequence variables into existing trail-based CP solvers. Furthermore, global constraints specifically designed for sequence variables and vehicle routing are introduced. Finally, the effectiveness of sequence variables is demonstrated by simplifying problem modeling and achieving competitive computational performance on the Dial-a-Ride Problem.

CP-Model-Zoo: A Natural Language Query System for Constraint Programming Models

Sep 09, 2025Abstract:Constraint Programming and its high-level modeling languages have long been recognized for their potential to achieve the holy grail of problem-solving. However, the complexity of modeling languages, the large number of global constraints, and the art of creating good models have often hindered non-experts from choosing CP to solve their combinatorial problems. While generating an expert-level model from a natural-language description of a problem would be the dream, we are not yet there. We propose a tutoring system called CP-Model-Zoo, exploiting expert-written models accumulated through the years. CP-Model-Zoo retrieves the closest source code model from a database based on a user's natural language description of a combinatorial problem. It ensures that expert-validated models are presented to the user while eliminating the need for human data labeling. Our experiments show excellent accuracy in retrieving the correct model based on a user-input description of a problem simulated with different levels of expertise.

A Generic Complete Anytime Beam Search for Optimal Decision Tree

Aug 08, 2025Abstract:Finding an optimal decision tree that minimizes classification error is known to be NP-hard. While exact algorithms based on MILP, CP, SAT, or dynamic programming guarantee optimality, they often suffer from poor anytime behavior -- meaning they struggle to find high-quality decision trees quickly when the search is stopped before completion -- due to unbalanced search space exploration. To address this, several anytime extensions of exact methods have been proposed, such as LDS-DL8.5, Top-k-DL8.5, and Blossom, but they have not been systematically compared, making it difficult to assess their relative effectiveness. In this paper, we propose CA-DL8.5, a generic, complete, and anytime beam search algorithm that extends the DL8.5 framework and unifies some existing anytime strategies. In particular, CA-DL8.5 generalizes previous approaches LDS-DL8.5 and Top-k-DL8.5, by allowing the integration of various heuristics and relaxation mechanisms through a modular design. The algorithm reuses DL8.5's efficient branch-and-bound pruning and trie-based caching, combined with a restart-based beam search that gradually relaxes pruning criteria to improve solution quality over time. Our contributions are twofold: (1) We introduce this new generic framework for exact and anytime decision tree learning, enabling the incorporation of diverse heuristics and search strategies; (2) We conduct a rigorous empirical comparison of several instantiations of CA-DL8.5 -- based on Purity, Gain, Discrepancy, and Top-k heuristics -- using an anytime evaluation metric called the primal gap integral. Experimental results on standard classification benchmarks show that CA-DL8.5 using LDS (limited discrepancy) consistently provides the best anytime performance, outperforming both other CA-DL8.5 variants and the Blossom algorithm while maintaining completeness and optimality guarantees.

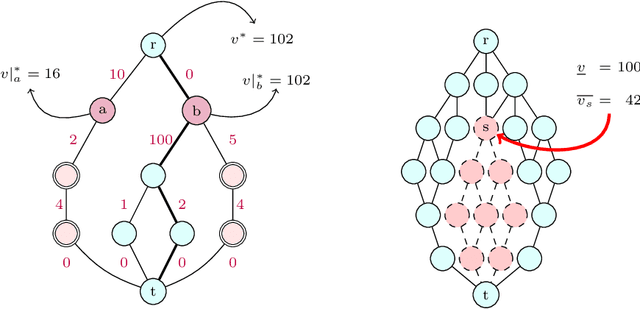

Branch-and-Bound with Barrier: Dominance and Suboptimality Detection for DD-Based Branch-and-Bound

Nov 22, 2022

Abstract:The branch-and-bound algorithm based on decision diagrams introduced by Bergman et al. in 2016 is a framework for solving discrete optimization problems with a dynamic programming formulation. It works by compiling a series of bounded-width decision diagrams that can provide lower and upper bounds for any given subproblem. Eventually, every part of the search space will be either explored or pruned by the algorithm, thus proving optimality. This paper presents new ingredients to speed up the search by exploiting the structure of dynamic programming models. The key idea is to prevent the repeated exploration of nodes corresponding to the same dynamic programming states by storing and querying thresholds in a data structure called the Barrier. These thresholds are based on dominance relations between partial solutions previously found. They can be further strengthened by integrating the filtering techniques introduced by Gillard et al. in 2021. Computational experiments show that the pruning brought by the Barrier allows to significantly reduce the number of nodes expanded by the algorithm. This results in more benchmark instances of difficult optimization problems being solved in less time while using narrower decision diagrams.

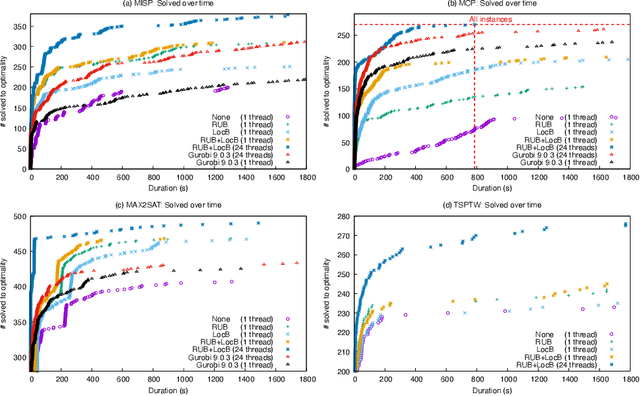

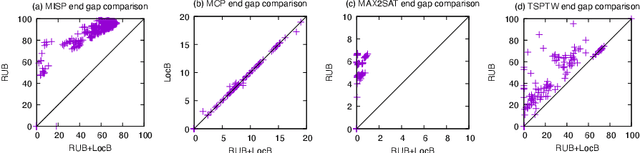

Improving the filtering of Branch-And-Bound MDD solver

Apr 24, 2021

Abstract:This paper presents and evaluates two pruning techniques to reinforce the efficiency of constraint optimization solvers based on multi-valued decision-diagrams (MDD). It adopts the branch-and-bound framework proposed by Bergman et al. in 2016 to solve dynamic programs to optimality. In particular, our paper presents and evaluates the effectiveness of the local-bound (LocB) and rough upper-bound pruning (RUB). LocB is a new and effective rule that leverages the approximate MDD structure to avoid the exploration of non-interesting nodes. RUB is a rule to reduce the search space during the development of bounded-width-MDDs. The experimental study we conducted on the Maximum Independent Set Problem (MISP), Maximum Cut Problem (MCP), Maximum 2 Satisfiability (MAX2SAT) and the Traveling Salesman Problem with Time Windows (TSPTW) shows evidence indicating that rough-upper-bound and local-bound pruning have a high impact on optimization solvers based on branch-and-bound with MDDs. In particular, it shows that RUB delivers excellent results but requires some effort when defining the model. Also, it shows that LocB provides a significant improvement automatically; without necessitating any user-supplied information. Finally, it also shows that rough-upper-bound and local-bound pruning are not mutually exclusive, and their combined benefit supersedes the individual benefit of using each technique.

Impact of weather factors on migration intention using machine learning algorithms

Dec 04, 2020

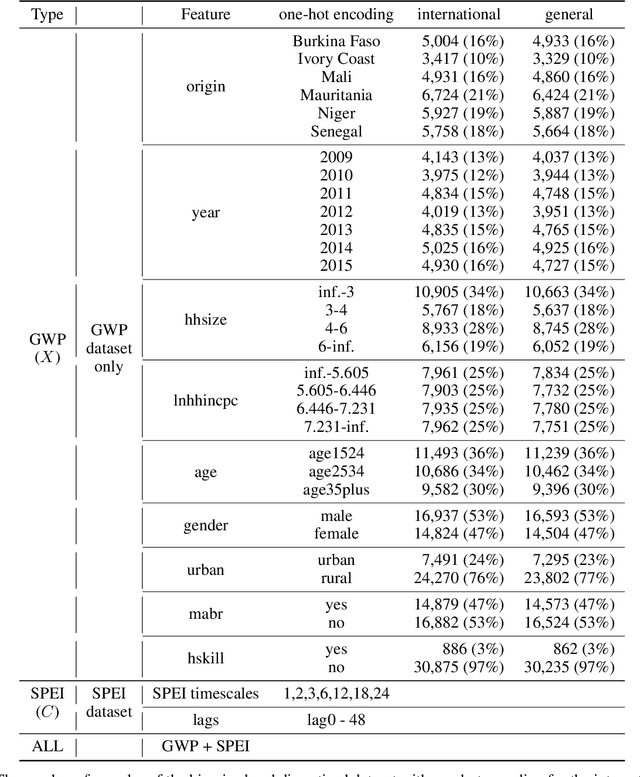

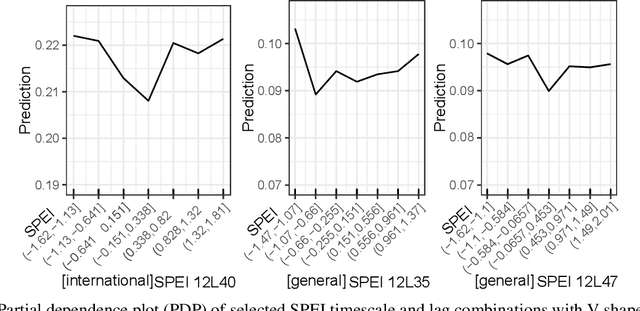

Abstract:A growing attention in the empirical literature has been paid to the incidence of climate shocks and change in migration decisions. Previous literature leads to different results and uses a multitude of traditional empirical approaches. This paper proposes a tree-based Machine Learning (ML) approach to analyze the role of the weather shocks towards an individual's intention to migrate in the six agriculture-dependent-economy countries such as Burkina Faso, Ivory Coast, Mali, Mauritania, Niger, and Senegal. We perform several tree-based algorithms (e.g., XGB, Random Forest) using the train-validation-test workflow to build robust and noise-resistant approaches. Then we determine the important features showing in which direction they are influencing the migration intention. This ML-based estimation accounts for features such as weather shocks captured by the Standardized Precipitation-Evapotranspiration Index (SPEI) for different timescales and various socioeconomic features/covariates. We find that (i) weather features improve the prediction performance although socioeconomic characteristics have more influence on migration intentions, (ii) country-specific model is necessary, and (iii) international move is influenced more by the longer timescales of SPEIs while general move (which includes internal move) by that of shorter timescales.

An LSTM approach to Forecast Migration using Google Trends

Jun 19, 2020

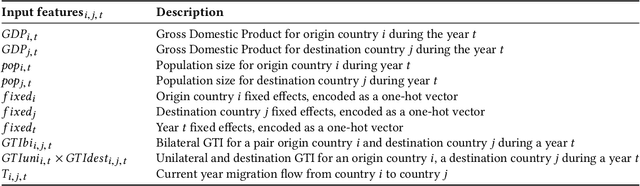

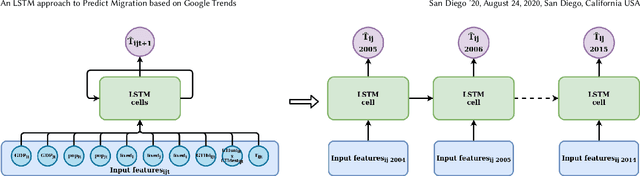

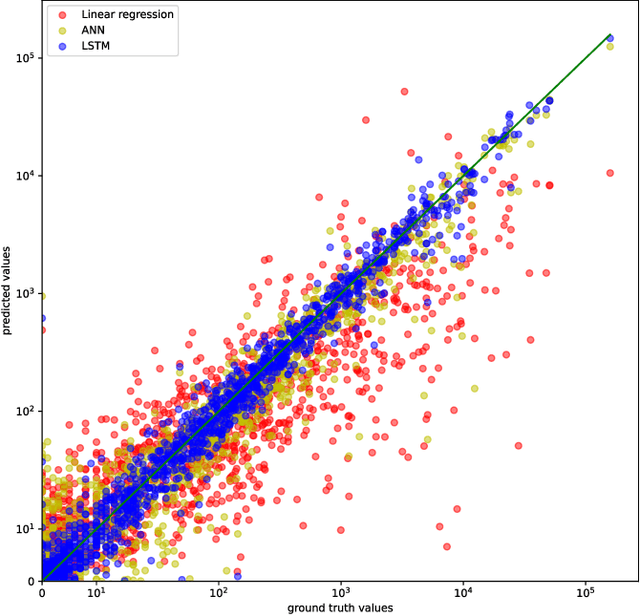

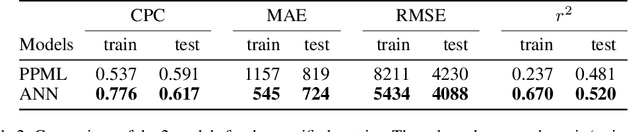

Abstract:Being able to model and forecast international migration as precisely as possible is crucial for policymaking. Recently Google Trends data in addition to other economic and demographic data have been shown to improve the forecasting quality of a gravity linear model for the one-year ahead forecasting. In this work, we replace the linear model with a long short-term memory (LSTM) approach and compare it with two existing approaches: the linear gravity model and an artificial neural network (ANN) model. Our LSTM approach combined with Google Trends data outperforms both these models on various metrics in the task of forecasting the one-year ahead incoming international migration to 35 Organization for Economic Co-operation and Development (OECD) countries: for example the root mean square error (RMSE) and the mean average error (MAE) have been divided by 5 and 4 on the test set. This positive result demonstrates that machine learning techniques constitute a serious alternative over traditional approaches for studying migration mechanisms.

Using an interpretable Machine Learning approach to study the drivers of International Migration

Jun 05, 2020

Abstract:Globally increasing migration pressures call for new modelling approaches in order to design effective policies. It is important to have not only efficient models to predict migration flows but also to understand how specific parameters influence these flows. In this paper, we propose an artificial neural network (ANN) to model international migration. Moreover, we use a technique for interpreting machine learning models, namely Partial Dependence Plots (PDP), to show that one can well study the effects of drivers behind international migration. We train and evaluate the model on a dataset containing annual international bilateral migration from $1960$ to $2010$ from $175$ origin countries to $33$ mainly OECD destinations, along with the main determinants as identified in the migration literature. The experiments carried out confirm that: 1) the ANN model is more efficient w.r.t. a traditional model, and 2) using PDP we are able to gain additional insights on the specific effects of the migration drivers. This approach provides much more information than only using the feature importance information used in previous works.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge