Peter R. Rijnbeek

Clinical semantics for lung cancer prediction

Aug 20, 2025Abstract:Background: Existing clinical prediction models often represent patient data using features that ignore the semantic relationships between clinical concepts. This study integrates domain-specific semantic information by mapping the SNOMED medical term hierarchy into a low-dimensional hyperbolic space using Poincar\'e embeddings, with the aim of improving lung cancer onset prediction. Methods: Using a retrospective cohort from the Optum EHR dataset, we derived a clinical knowledge graph from the SNOMED taxonomy and generated Poincar\'e embeddings via Riemannian stochastic gradient descent. These embeddings were then incorporated into two deep learning architectures, a ResNet and a Transformer model. Models were evaluated for discrimination (area under the receiver operating characteristic curve) and calibration (average absolute difference between observed and predicted probabilities) performance. Results: Incorporating pre-trained Poincar\'e embeddings resulted in modest and consistent improvements in discrimination performance compared to baseline models using randomly initialized Euclidean embeddings. ResNet models, particularly those using a 10-dimensional Poincar\'e embedding, showed enhanced calibration, whereas Transformer models maintained stable calibration across configurations. Discussion: Embedding clinical knowledge graphs into hyperbolic space and integrating these representations into deep learning models can improve lung cancer onset prediction by preserving the hierarchical structure of clinical terminologies used for prediction. This approach demonstrates a feasible method for combining data-driven feature extraction with established clinical knowledge.

Comparison of deep learning and conventional methods for disease onset prediction

Oct 14, 2024Abstract:Background: Conventional prediction methods such as logistic regression and gradient boosting have been widely utilized for disease onset prediction for their reliability and interpretability. Deep learning methods promise enhanced prediction performance by extracting complex patterns from clinical data, but face challenges like data sparsity and high dimensionality. Methods: This study compares conventional and deep learning approaches to predict lung cancer, dementia, and bipolar disorder using observational data from eleven databases from North America, Europe, and Asia. Models were developed using logistic regression, gradient boosting, ResNet, and Transformer, and validated both internally and externally across the data sources. Discrimination performance was assessed using AUROC, and calibration was evaluated using Eavg. Findings: Across 11 datasets, conventional methods generally outperformed deep learning methods in terms of discrimination performance, particularly during external validation, highlighting their better transportability. Learning curves suggest that deep learning models require substantially larger datasets to reach the same performance levels as conventional methods. Calibration performance was also better for conventional methods, with ResNet showing the poorest calibration. Interpretation: Despite the potential of deep learning models to capture complex patterns in structured observational healthcare data, conventional models remain highly competitive for disease onset prediction, especially in scenarios involving smaller datasets and if lengthy training times need to be avoided. The study underscores the need for future research focused on optimizing deep learning models to handle the sparsity, high dimensionality, and heterogeneity inherent in healthcare datasets, and find new strategies to exploit the full capabilities of deep learning methods.

A standardized framework for risk-based assessment of treatment effect heterogeneity in observational healthcare databases

Oct 13, 2020

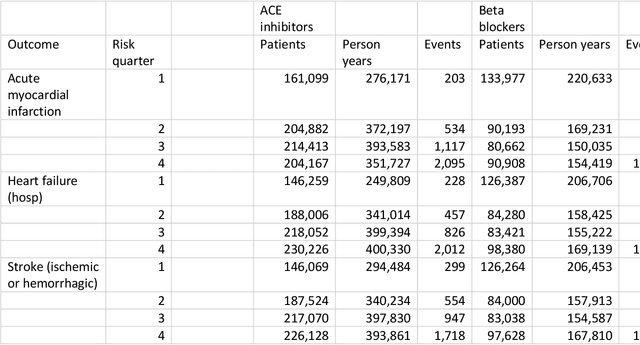

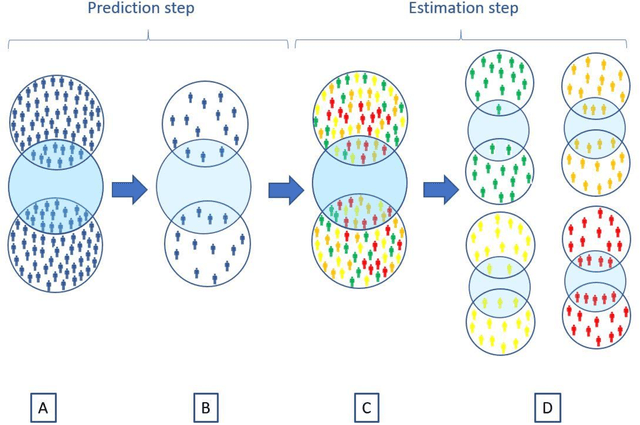

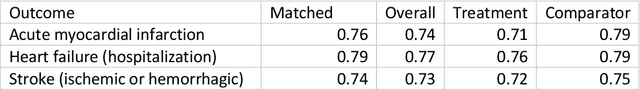

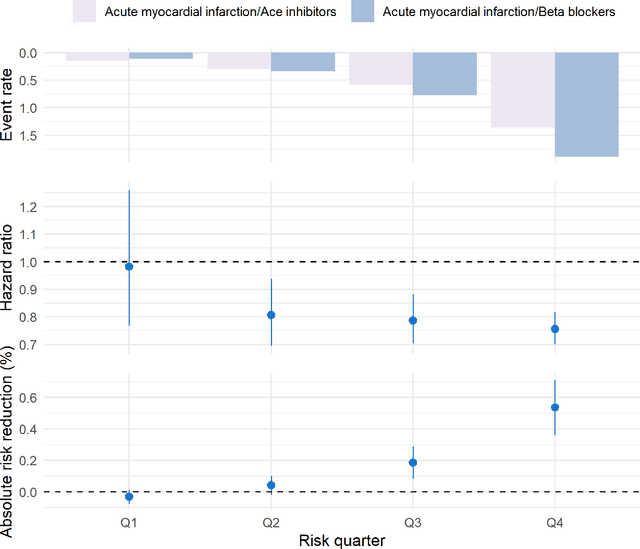

Abstract:Aim: One of the aims of the Observation Health Data Sciences and Informatics (OHDSI) initiative is population-level treatment effect estimation in large observational databases. Since treatment effects are well-known to vary across groups of patients with different baseline risk, we aimed to extend the OHDSI methods library with a framework for risk-based assessment of treatment effect heterogeneity. Materials and Methods: The proposed framework consists of five steps: 1) definition of the problem, i.e. the population, the treatment, the comparator and the outcome(s) of interest; 2) identification of relevant databases; 3) development of a prediction model for the outcome(s) of interest; 4) estimation of propensity scores within strata of predicted risk and estimation of relative and absolute treatment effect within strata of predicted risk; 5) evaluation and presentation of results. Results: We demonstrate our framework by evaluating heterogeneity of the effect of angiotensin-converting enzyme (ACE) inhibitors versus beta blockers on a set of 9 outcomes of interest across three observational databases. With increasing risk of acute myocardial infarction we observed increasing absolute benefits, i.e. from -0.03% to 0.54% in the lowest to highest risk groups. Cough-related absolute harms decreased from 4.1% to 2.6%. Conclusions: The proposed framework may be useful for the evaluation of heterogeneity of treatment effect on observational data that are mapped to the OMOP Common Data Model. The proof of concept study demonstrates its feasibility in large observational data. Further insights may arise by application to safety and effectiveness questions across the global data network.

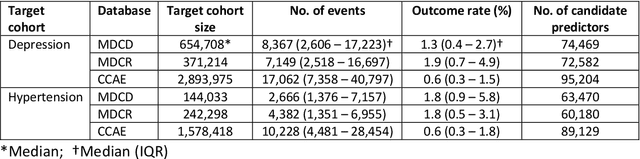

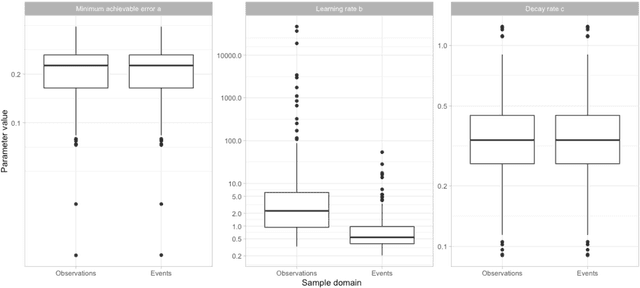

How little data do we need for patient-level prediction?

Aug 14, 2020

Abstract:Objective: Provide guidance on sample size considerations for developing predictive models by empirically establishing the adequate sample size, which balances the competing objectives of improving model performance and reducing model complexity as well as computational requirements. Materials and Methods: We empirically assess the effect of sample size on prediction performance and model complexity by generating learning curves for 81 prediction problems in three large observational health databases, requiring training of 17,248 prediction models. The adequate sample size was defined as the sample size for which the performance of a model equalled the maximum model performance minus a small threshold value. Results: The adequate sample size achieves a median reduction of the number of observations between 9.5% and 78.5% for threshold values between 0.001 and 0.02. The median reduction of the number of predictors in the models at the adequate sample size varied between 8.6% and 68.3%, respectively. Discussion: Based on our results a conservative, yet significant, reduction in sample size and model complexity can be estimated for future prediction work. Though, if a researcher is willing to generate a learning curve a much larger reduction of the model complexity may be possible as suggested by a large outcome-dependent variability. Conclusion: Our results suggest that in most cases only a fraction of the available data was sufficient to produce a model close to the performance of one developed on the full data set, but with a substantially reduced model complexity.

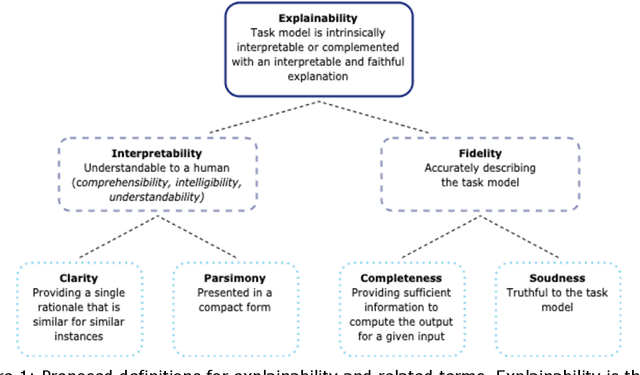

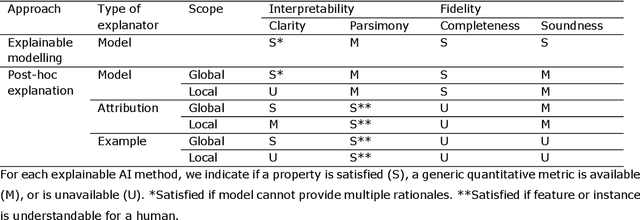

The role of explainability in creating trustworthy artificial intelligence for health care: a comprehensive survey of the terminology, design choices, and evaluation strategies

Jul 31, 2020

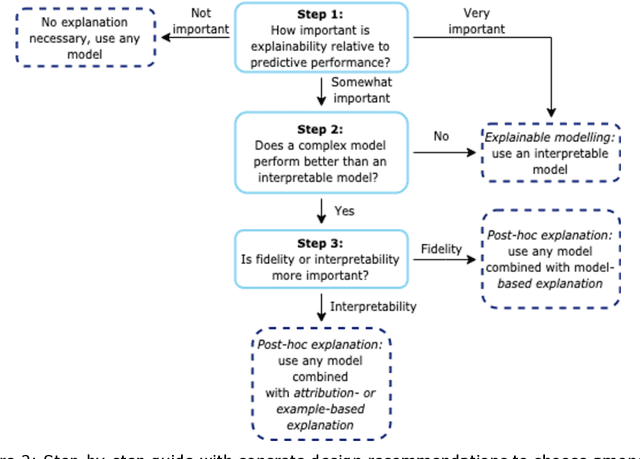

Abstract:Artificial intelligence (AI) has huge potential to improve the health and well-being of people, but adoption in clinical practice is still limited. Lack of transparency is identified as one of the main barriers to implementation, as clinicians should be confident the AI system can be trusted. Explainable AI has the potential to overcome this issue and can be a step towards trustworthy AI. In this paper we review the recent literature to provide guidance to researchers and practitioners on the design of explainable AI systems for the health-care domain and contribute to formalization of the field of explainable AI. We argue the reason to demand explainability determines what should be explained as this determines the relative importance of the properties of explainability (i.e. interpretability and fidelity). Based on this, we give concrete recommendations to choose between classes of explainable AI methods (explainable modelling versus post-hoc explanation; model-based, attribution-based, or example-based explanations; global and local explanations). Furthermore, we find that quantitative evaluation metrics, which are important for objective standardized evaluation, are still lacking for some properties (e.g. clarity) and types of explanators (e.g. example-based methods). We conclude that explainable modelling can contribute to trustworthy AI, but recognize that complementary measures might be needed to create trustworthy AI (e.g. reporting data quality, performing extensive (external) validation, and regulation).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge