Pengfei Ma

Deep Forest with Hashing Screening and Window Screening

Jul 25, 2022

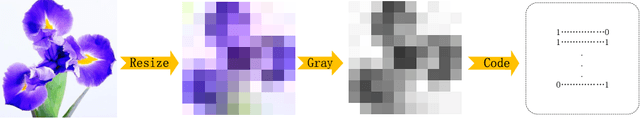

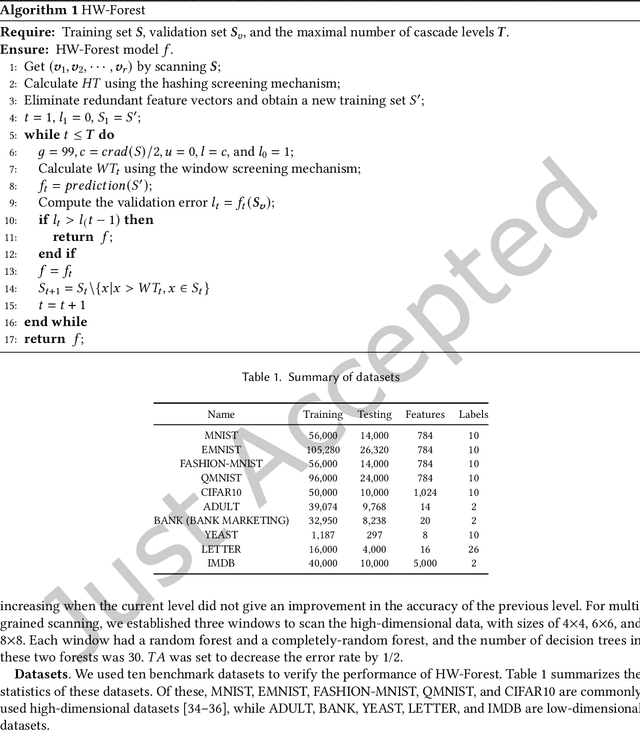

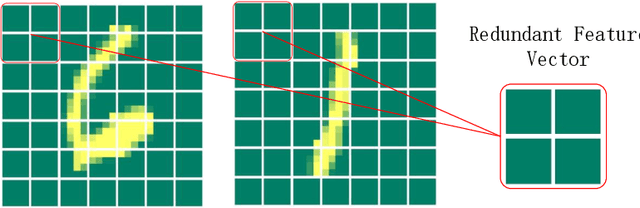

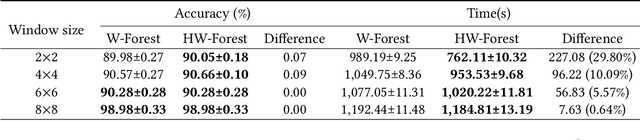

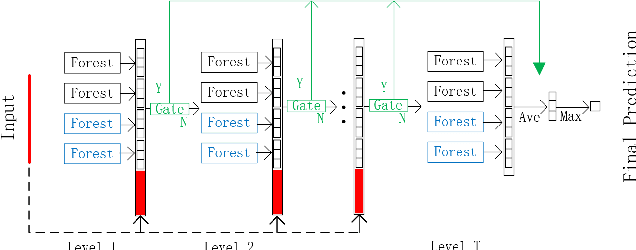

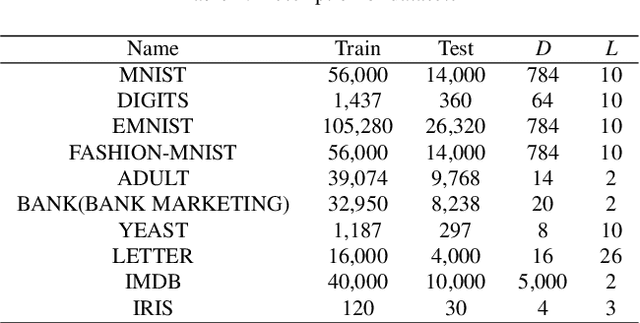

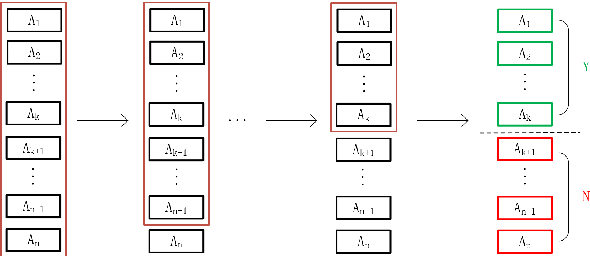

Abstract:As a novel deep learning model, gcForest has been widely used in various applications. However, the current multi-grained scanning of gcForest produces many redundant feature vectors, and this increases the time cost of the model. To screen out redundant feature vectors, we introduce a hashing screening mechanism for multi-grained scanning and propose a model called HW-Forest which adopts two strategies, hashing screening and window screening. HW-Forest employs perceptual hashing algorithm to calculate the similarity between feature vectors in hashing screening strategy, which is used to remove the redundant feature vectors produced by multi-grained scanning and can significantly decrease the time cost and memory consumption. Furthermore, we adopt a self-adaptive instance screening strategy to improve the performance of our approach, called window screening, which can achieve higher accuracy without hyperparameter tuning on different datasets. Our experimental results show that HW-Forest has higher accuracy than other models, and the time cost is also reduced.

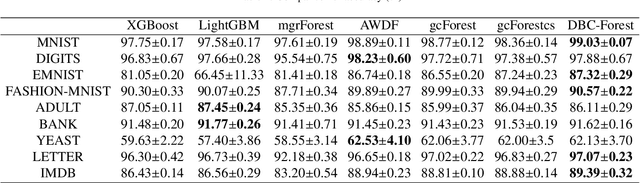

DBC-Forest: Deep forest with binning confidence screening

Dec 25, 2021

Abstract:As a deep learning model, deep confidence screening forest (gcForestcs) has achieved great success in various applications. Compared with the traditional deep forest approach, gcForestcs effectively reduces the high time cost by passing some instances in the high-confidence region directly to the final stage. However, there is a group of instances with low accuracy in the high-confidence region, which are called mis-partitioned instances. To find these mis-partitioned instances, this paper proposes a deep binning confidence screening forest (DBC-Forest) model, which packs all instances into bins based on their confidences. In this way, more accurate instances can be passed to the final stage, and the performance is improved. Experimental results show that DBC-Forest achieves highly accurate predictions for the same hyperparameters and is faster than other similar models to achieve the same accuracy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge