Pawel Korus

Dictionary Attacks on Speaker Verification

Apr 24, 2022

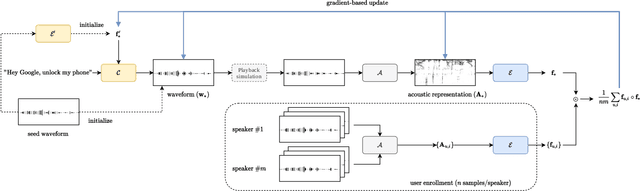

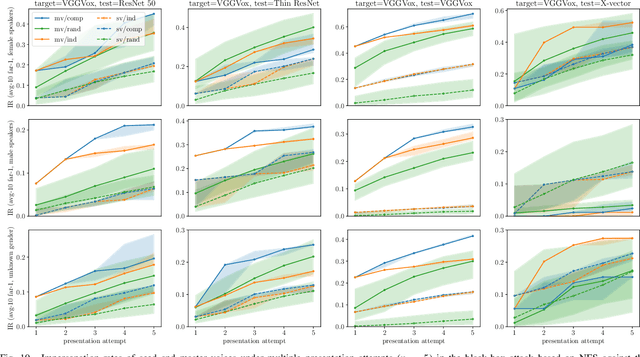

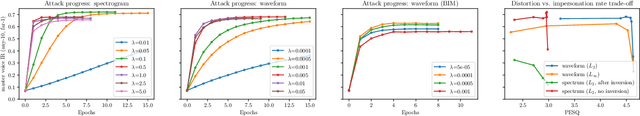

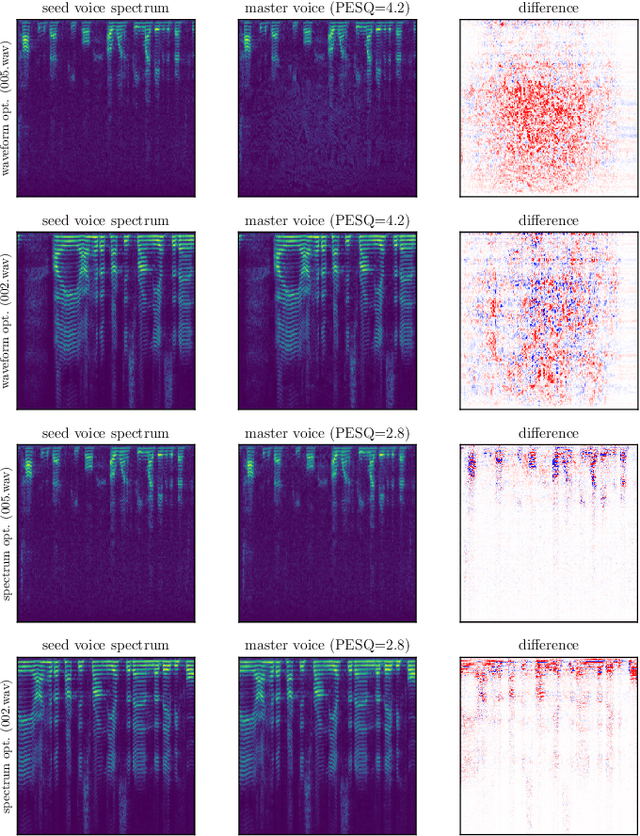

Abstract:In this paper, we propose dictionary attacks against speaker verification - a novel attack vector that aims to match a large fraction of speaker population by chance. We introduce a generic formulation of the attack that can be used with various speech representations and threat models. The attacker uses adversarial optimization to maximize raw similarity of speaker embeddings between a seed speech sample and a proxy population. The resulting master voice successfully matches a non-trivial fraction of people in an unknown population. Adversarial waveforms obtained with our approach can match on average 69% of females and 38% of males enrolled in the target system at a strict decision threshold calibrated to yield false alarm rate of 1%. By using the attack with a black-box voice cloning system, we obtain master voices that are effective in the most challenging conditions and transferable between speaker encoders. We also show that, combined with multiple attempts, this attack opens even more to serious issues on the security of these systems.

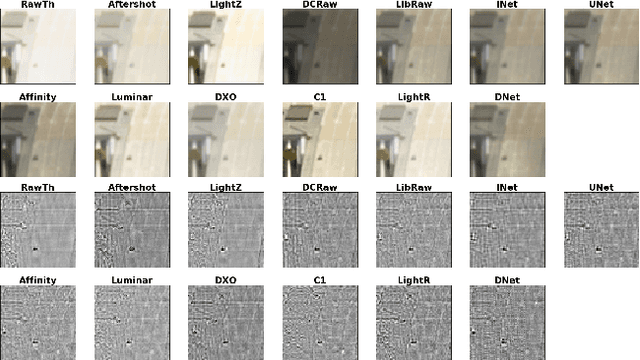

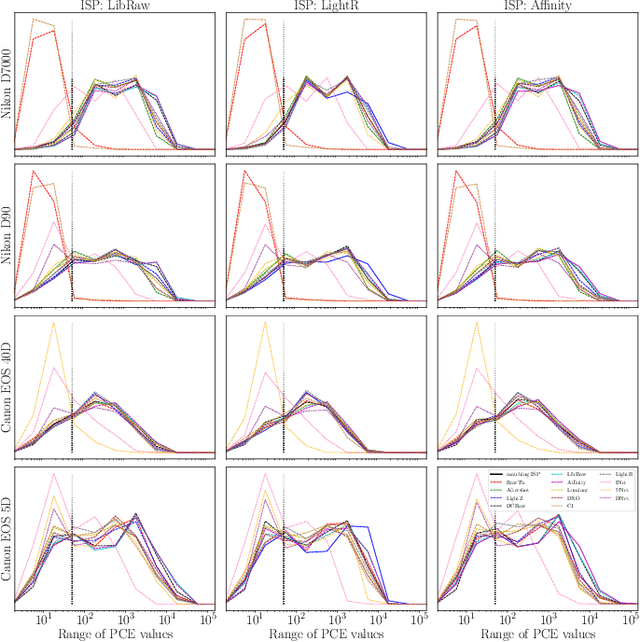

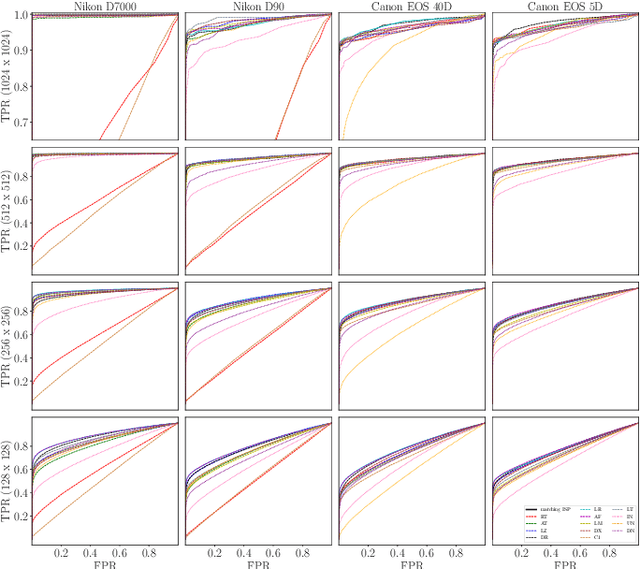

Empirical Evaluation of PRNU Fingerprint Variation for Mismatched Imaging Pipelines

Apr 04, 2020

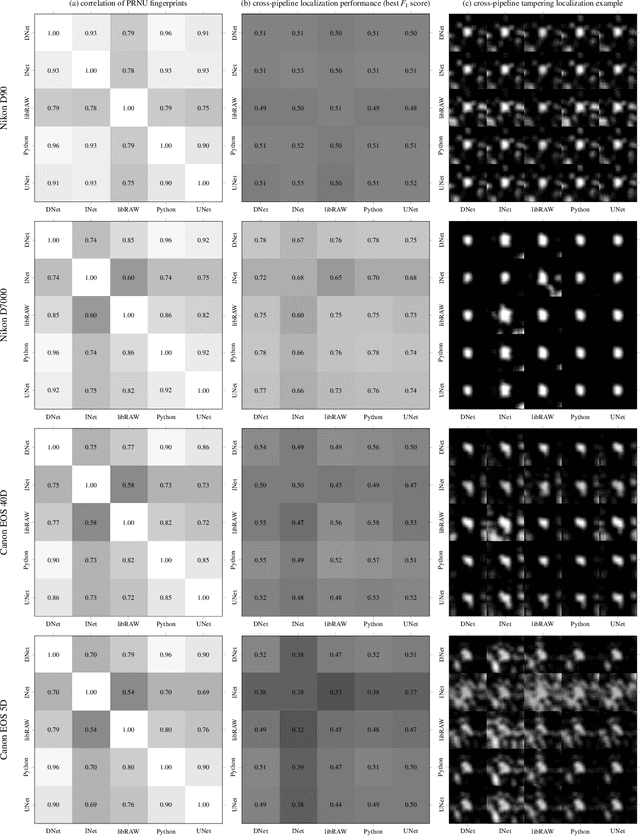

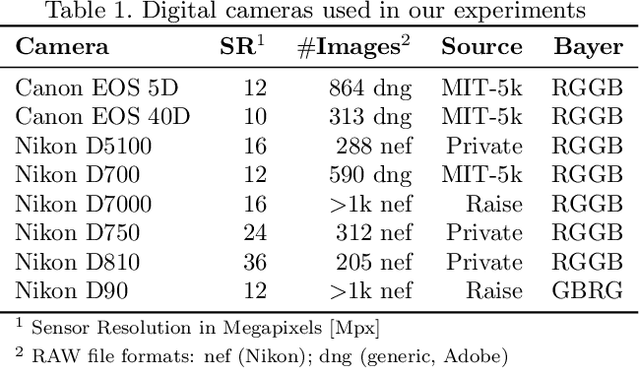

Abstract:We assess the variability of PRNU-based camera fingerprints with mismatched imaging pipelines (e.g., different camera ISP or digital darkroom software). We show that camera fingerprints exhibit non-negligible variations in this setup, which may lead to unexpected degradation of detection statistics in real-world use-cases. We tested 13 different pipelines, including standard digital darkroom software and recent neural-networks. We observed that correlation between fingerprints from mismatched pipelines drops on average to 0.38 and the PCE detection statistic drops by over 40%. The degradation in error rates is the strongest for small patches commonly used in photo manipulation detection, and when neural networks are used for photo development. At a fixed 0.5% FPR setting, the TPR drops by 17 ppt (percentage points) for 128 px and 256 px patches.

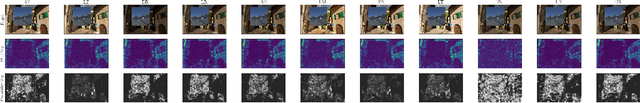

Neural Imaging Pipelines - the Scourge or Hope of Forensics?

Feb 27, 2019

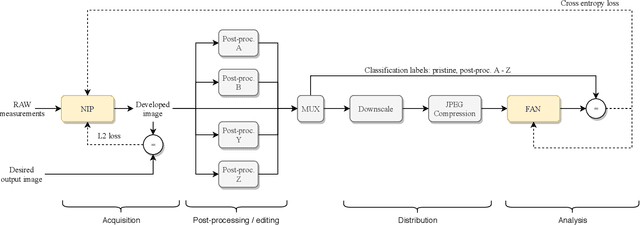

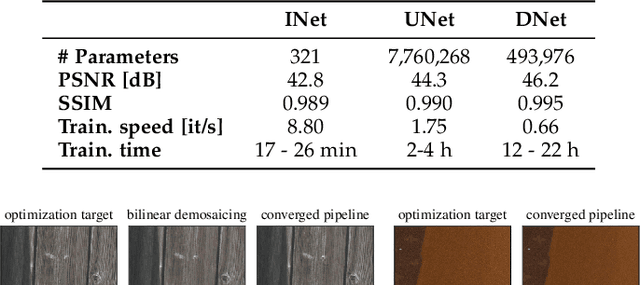

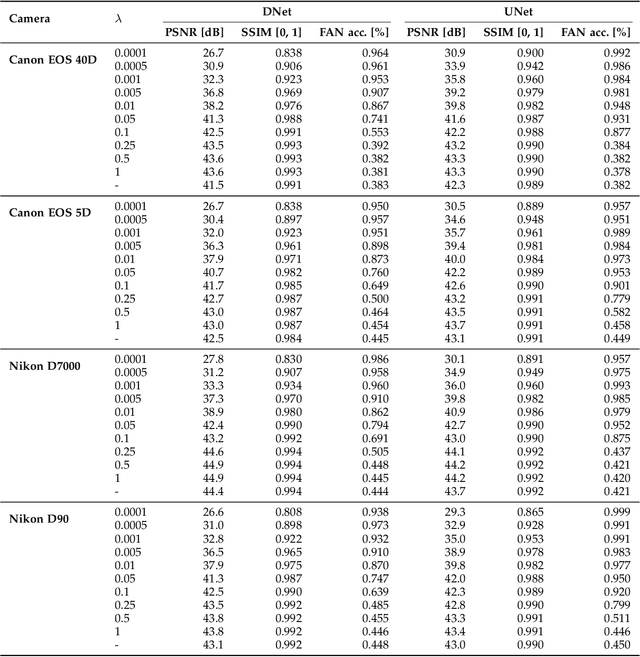

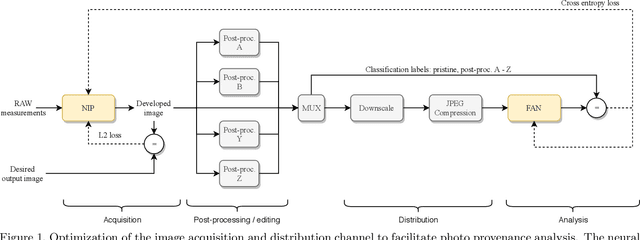

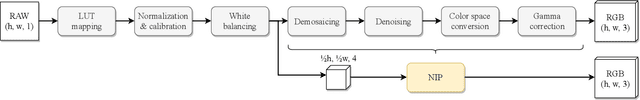

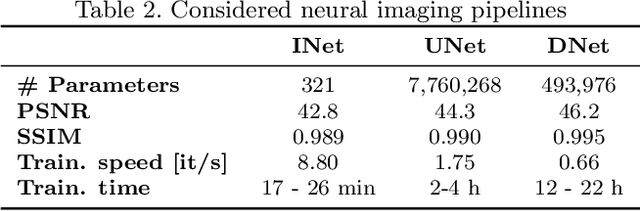

Abstract:Forensic analysis of digital photographs relies on intrinsic statistical traces introduced at the time of their acquisition or subsequent editing. Such traces are often removed by post-processing (e.g., down-sampling and re-compression applied upon distribution in the Web) which inhibits reliable provenance analysis. Increasing adoption of computational methods within digital cameras further complicates the process and renders explicit mathematical modeling infeasible. While this trend challenges forensic analysis even in near-acquisition conditions, it also creates new opportunities. This paper explores end-to-end optimization of the entire image acquisition and distribution workflow to facilitate reliable forensic analysis at the end of the distribution channel, where state-of-the-art forensic techniques fail. We demonstrate that a neural network can be trained to replace the entire photo development pipeline, and jointly optimized for high-fidelity photo rendering and reliable provenance analysis. Such optimized neural imaging pipeline allowed us to increase image manipulation detection accuracy from approx. 45% to over 90%. The network learns to introduce carefully crafted artifacts, akin to digital watermarks, which facilitate subsequent manipulation detection. Analysis of performance trade-offs indicates that most of the gains can be obtained with only minor distortion. The findings encourage further research towards building more reliable imaging pipelines with explicit provenance-guaranteeing properties.

Content Authentication for Neural Imaging Pipelines: End-to-end Optimization of Photo Provenance in Complex Distribution Channels

Dec 04, 2018

Abstract:Forensic analysis of digital photo provenance relies on intrinsic traces left in the photograph at the time of its acquisition. Such analysis becomes unreliable after heavy post-processing, such as down-sampling and re-compression applied upon distribution in the Web. This paper explores end-to-end optimization of the entire image acquisition and distribution workflow to facilitate reliable forensic analysis at the end of the distribution channel. We demonstrate that neural imaging pipelines can be trained to replace the internals of digital cameras, and jointly optimized for high-fidelity photo development and reliable provenance analysis. In our experiments, the proposed approach increased image manipulation detection accuracy from 55% to nearly 98%. The findings encourage further research towards building more reliable imaging pipelines with explicit provenance-guaranteeing properties.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge