Pavlos S. Bouzinis

StatAvg: Mitigating Data Heterogeneity in Federated Learning for Intrusion Detection Systems

May 20, 2024

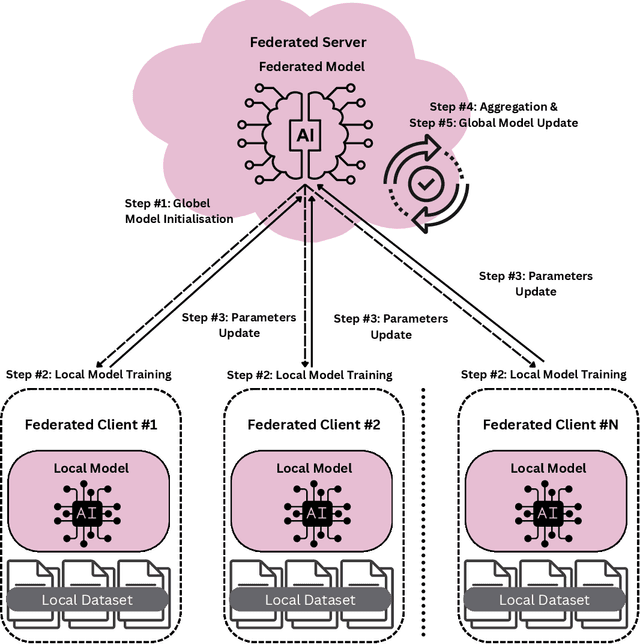

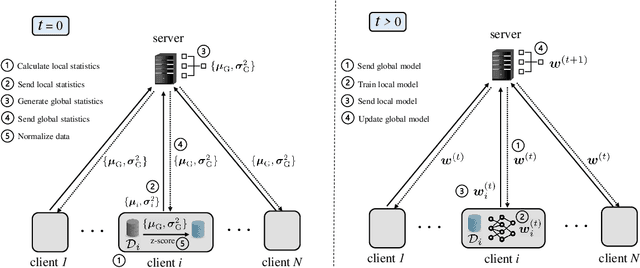

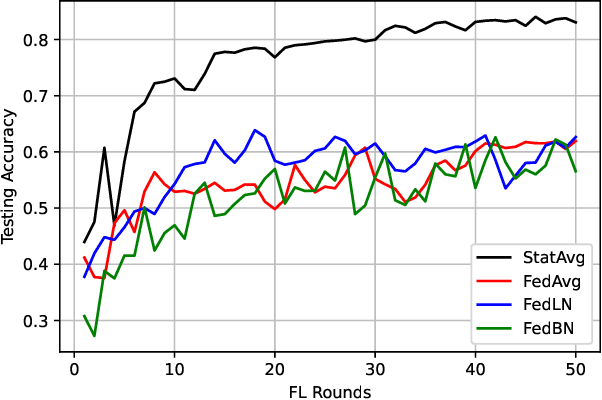

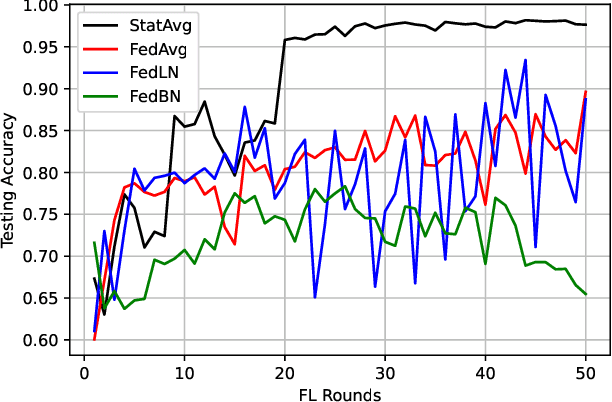

Abstract:Federated learning (FL) is a decentralized learning technique that enables participating devices to collaboratively build a shared Machine Leaning (ML) or Deep Learning (DL) model without revealing their raw data to a third party. Due to its privacy-preserving nature, FL has sparked widespread attention for building Intrusion Detection Systems (IDS) within the realm of cybersecurity. However, the data heterogeneity across participating domains and entities presents significant challenges for the reliable implementation of an FL-based IDS. In this paper, we propose an effective method called Statistical Averaging (StatAvg) to alleviate non-independently and identically (non-iid) distributed features across local clients' data in FL. In particular, StatAvg allows the FL clients to share their individual data statistics with the server, which then aggregates this information to produce global statistics. The latter are shared with the clients and used for universal data normalisation. It is worth mentioning that StatAvg can seamlessly integrate with any FL aggregation strategy, as it occurs before the actual FL training process. The proposed method is evaluated against baseline approaches using datasets for network and host Artificial Intelligence (AI)-powered IDS. The experimental results demonstrate the efficiency of StatAvg in mitigating non-iid feature distributions across the FL clients compared to the baseline methods.

Accelerating Distributed Optimization via Over-the-Air Computing

Dec 28, 2022Abstract:Distributed optimization is ubiquitous in emerging applications, such as robust sensor network control, smart grid management, machine learning, resource slicing, and localization. However, the extensive data exchange among local and central nodes may cause a severe communication bottleneck. To overcome this challenge, over-the-air computing (AirComp) is a promising medium access technology, which exploits the superposition property of the wireless multiple access channel (MAC) and offers significant bandwidth savings. In this work, we propose an AirComp framework for general distributed convex optimization problems. Specifically, a distributed primaldual (DPD) subgradient method is utilized for the optimization procedure. Under general assumptions, we prove that DPDAirComp can asymptotically achieve zero expected constraint violation. Therefore, DPD-AirComp ensures the feasibility of the original problem, despite the presence of channel fading and additive noise. Moreover, with proper power control of the users' signals, the expected non-zero optimality gap can also be mitigated. Two practical applications of the proposed framework are presented, namely, smart grid management and wireless resource allocation. Finally, numerical results reconfirm DPDAirComp's excellent performance, while it is also shown that DPD-AirComp converges an order of magnitude faster compared to a digital orthogonal multiple access scheme, specifically, time division multiple access (TDMA).

Wireless Quantized Federated Learning: A Joint Computation and Communication Design

Mar 11, 2022

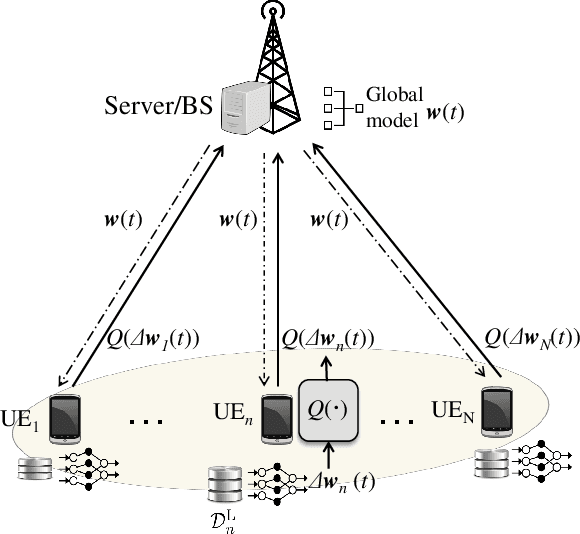

Abstract:Recently, federated learning (FL) has sparked widespread attention as a promising decentralized machine learning approach which provides privacy and low delay. However, communication bottleneck still constitutes an issue, that needs to be resolved for an efficient deployment of FL over wireless networks. In this paper, we aim to minimize the total convergence time of FL, by quantizing the local model parameters prior to uplink transmission. More specifically, the convergence analysis of the FL algorithm with stochastic quantization is firstly presented, which reveals the impact of the quantization error on the convergence rate. Following that, we jointly optimize the computing, communication resources and number of quantization bits, in order to guarantee minimized convergence time across all global rounds, subject to energy and quantization error requirements, which stem from the convergence analysis. The impact of the quantization error on the convergence time is evaluated and the trade-off among model accuracy and timely execution is revealed. Moreover, the proposed method is shown to result in faster convergence in comparison with baseline schemes. Finally, useful insights for the selection of the quantization error tolerance are provided.

Wireless Federated Learning (WFL) for 6G Networks -- Part II: The Compute-then-Transmit NOMA Paradigm

Apr 24, 2021

Abstract:As it has been discussed in the first part of this work, the utilization of advanced multiple access protocols and the joint optimization of the communication and computing resources can facilitate the reduction of delay for wireless federated learning (WFL), which is of paramount importance for the efficient integration of WFL in the sixth generation of wireless networks (6G). To this end, in this second part we introduce and optimize a novel communication protocol for WFL networks, that is based on non-orthogonal multiple access (NOMA). More specifically, the Compute-then-Transmit NOMA (CT-NOMA) protocol is introduced, where users terminate concurrently the local model training and then simultaneously transmit the trained parameters to the central server. Moreover, two different detection schemes for the mitigation of inter-user interference in NOMA are considered and evaluated, which correspond to fixed and variable decoding order during the successive interference cancellation process. Furthermore, the computation and communication resources are jointly optimized for both considered schemes, with the aim to minimize the total delay during a WFL communication round. Finally, the simulation results verify the effectiveness of CT-NOMA in terms of delay reduction, compared to the considered benchmark that is based on time-division multiple access.

Wireless Federated Learning for 6G Networks -- Part I: Research Challenges and Future Trends

Apr 24, 2021

Abstract:Conventional machine learning techniques are conducted in a centralized manner. Recently, the massive volume of generated wireless data, the privacy concerns and the increasing computing capabilities of wireless end-devices have led to the emergence of a promising decentralized solution, termed as Wireless Federated Learning (WFL). In this first of the two parts paper, we present the application of WFL in the sixth generation of wireless networks (6G), which is envisioned to be an integrated communication and computing platform. After analyzing the key concepts of WFL, we discuss the core challenges of WFL imposed by the wireless (or mobile communication) environment. Finally, we shed light to the future directions of WFL, aiming to compose a constructive integration of FL into the future wireless networks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge