Pavel Kalinin

Batch-Softmax Contrastive Loss for Pairwise Sentence Scoring Tasks

Oct 10, 2021

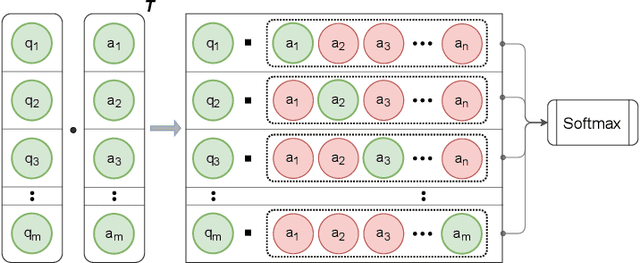

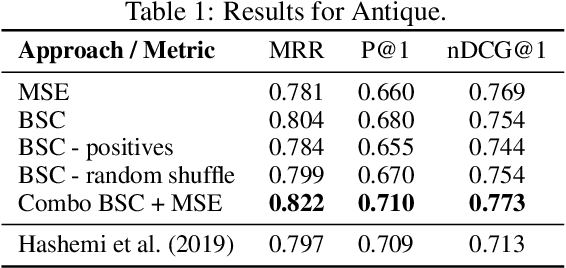

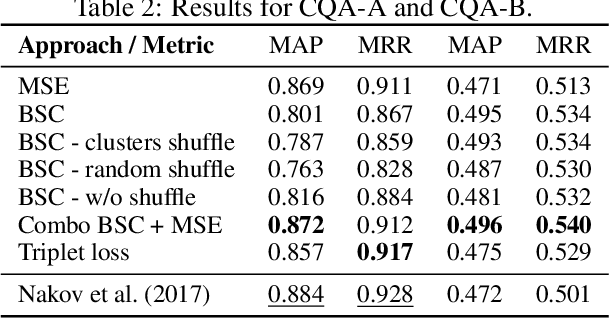

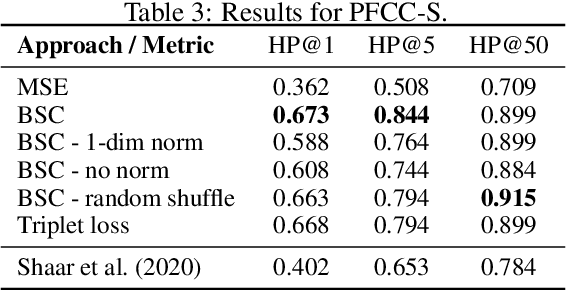

Abstract:The use of contrastive loss for representation learning has become prominent in computer vision, and it is now getting attention in Natural Language Processing (NLP). Here, we explore the idea of using a batch-softmax contrastive loss when fine-tuning large-scale pre-trained transformer models to learn better task-specific sentence embeddings for pairwise sentence scoring tasks. We introduce and study a number of variations in the calculation of the loss as well as in the overall training procedure; in particular, we find that data shuffling can be quite important. Our experimental results show sizable improvements on a number of datasets and pairwise sentence scoring tasks including classification, ranking, and regression. Finally, we offer detailed analysis and discussion, which should be useful for researchers aiming to explore the utility of contrastive loss in NLP.

Towards Practical Credit Assignment for Deep Reinforcement Learning

Jun 08, 2021

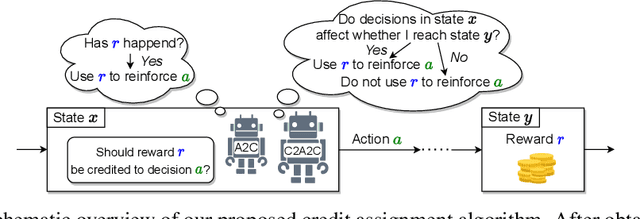

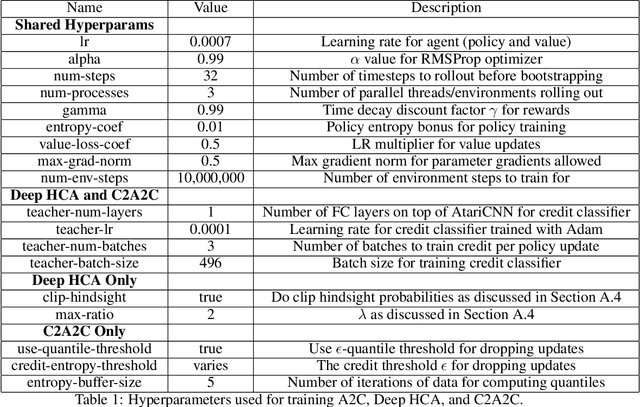

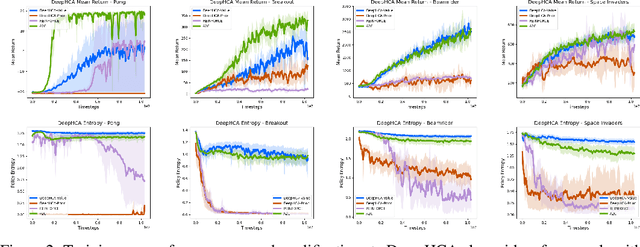

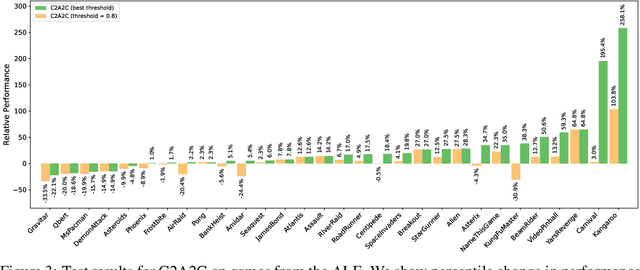

Abstract:Credit assignment is a fundamental problem in reinforcement learning, the problem of measuring an action's influence on future rewards. Improvements in credit assignment methods have the potential to boost the performance of RL algorithms on many tasks, but thus far have not seen widespread adoption. Recently, a family of methods called Hindsight Credit Assignment (HCA) was proposed, which explicitly assign credit to actions in hindsight based on the probability of the action having led to an observed outcome. This approach is appealing as a means to more efficient data usage, but remains a largely theoretical idea applicable to a limited set of tabular RL tasks, and it is unclear how to extend HCA to Deep RL environments. In this work, we explore the use of HCA-style credit in a deep RL context. We first describe the limitations of existing HCA algorithms in deep RL, then propose several theoretically-justified modifications to overcome them. Based on this exploration, we present a new algorithm, Credit-Constrained Advantage Actor-Critic (C2A2C), which ignores policy updates for actions which don't affect future outcomes based on credit in hindsight, while updating the policy as normal for those that do. We find that C2A2C outperforms Advantage Actor-Critic (A2C) on the Arcade Learning Environment (ALE) benchmark, showing broad improvements over A2C and motivating further work on credit-constrained update rules for deep RL methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge