Paulo Garcia

CENTRA, FEUP

Architecting Large Action Models for Human-in-the-Loop Intelligent Robots

Dec 12, 2025

Abstract:The realization of intelligent robots, operating autonomously and interacting with other intelligent agents, human or artificial, requires the integration of environment perception, reasoning, and action. Classic Artificial Intelligence techniques for this purpose, focusing on symbolic approaches, have long-ago hit the scalability wall on compute and memory costs. Advances in Large Language Models in the past decade (neural approaches) have resulted in unprecedented displays of capability, at the cost of control, explainability, and interpretability. Large Action Models aim at extending Large Language Models to encompass the full perception, reasoning, and action cycle; however, they typically require substantially more comprehensive training and suffer from the same deficiencies in reliability. Here, we show it is possible to build competent Large Action Models by composing off-the-shelf foundation models, and that their control, interpretability, and explainability can be effected by incorporating symbolic wrappers and associated verification on their outputs, achieving verifiable neuro-symbolic solutions for intelligent robots. Our experiments on a multi-modal robot demonstrate that Large Action Model intelligence does not require massive end-to-end training, but can be achieved by integrating efficient perception models with a logic-driven core. We find that driving action execution through the generation of Planning Domain Definition Language (PDDL) code enables a human-in-the-loop verification stage that effectively mitigates action hallucinations. These results can support practitioners in the design and development of robotic Large Action Models across novel industries, and shed light on the ongoing challenges that must be addressed to ensure safety in the field.

Dynamic Reconfiguration of Robotic Swarms: Coordination and Control for Precise Shape Formation

Nov 14, 2025Abstract:Coordination of movement and configuration in robotic swarms is a challenging endeavor. Deciding when and where each individual robot must move is a computationally complex problem. The challenge is further exacerbated by difficulties inherent to physical systems, such as measurement error and control dynamics. Thus, how to best determine the optimal path for each robot, when moving from one configuration to another, and how to best perform such determination and effect corresponding motion remains an open problem. In this paper, we show an algorithm for such coordination of robotic swarms. Our methods allow seamless transition from one configuration to another, leveraging geometric formulations that are mapped to the physical domain through appropriate control, localization, and mapping techniques. This paves the way for novel applications of robotic swarms by enabling more sophisticated distributed behaviors.

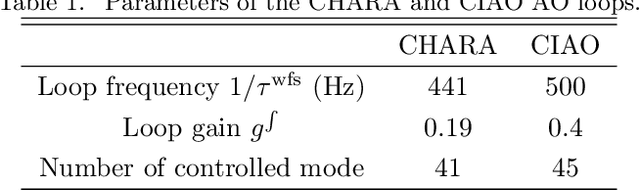

Open loop calibration and closed loop non-perturbative estimation of the lateral errors of an adaptive optics system: examples with GRAVITY+ and CHARA experimental data

Oct 09, 2024

Abstract:Performances of an adaptive optics (AO) system are directly linked with the quality of its alignment. During the instrument calibration, having open loop fast tools with a large capture range are necessary to quickly assess the system misalignment and to drive it towards a state allowing to close the AO loop. During operation, complex systems are prone to misalignments (mechanical flexions, rotation of optical elements, etc.) that potentially degrade the AO performances, creating a need for a monitoring tool to tackle their driftage. In this work, we first present an improved perturbative method to quickly assess large lateral errors in open loop. It uses the spatial correlation of the measured interaction matrix of a limited number of 2D spatial modes with a synthetic model. Then, we introduce a novel solution to finely measure and correct these lateral errors via the closed loop telemetry. Non-perturbative, this method consequently does not impact the science output of the instrument. It is based on the temporal correlation of 2D spatial frequencies in the deformable mirror commands. It is model-free (no need of an interaction matrix model) and sparse in the Fourier space, making it fast and easily scalable to complex systems such as future extremely large telescopes. Finally, we present some results obtained on the development bench of the GRAVITY+ extreme AO system (Cartesian grid, 1432 actuators). In addition, we show with on-sky results gathered with CHARA and GRAVITY/CIAO that the method is adaptable to non-conventional AO geometries (hexagonal grids, 60 actuators).

Cyber Physical Games

Jul 08, 2024Abstract:We describe a formulation of multi-agents operating within a Cyber-Physical System, resulting in collaborative or adversarial games. We show that the non-determinism inherent in the communication medium between agents and the underlying physical environment gives rise to environment evolution that is a probabilistic function of agents' strategies. We name these emergent properties Cyber Physical Games and study its properties. We present an algorithmic model that determines the most likely system evolution, approximating Cyber Physical Games through Probabilistic Finite State Automata, and evaluate it on collaborative and adversarial versions of the Iterated Boolean Game, comparing theoretical results with simulated ones. Results support the validity of the proposed model, and suggest several required research directions to continue evolving our understanding of Cyber Physical System, as well as how to best design agents that must operate within such environments.

Agents Need Not Know Their Purpose

Feb 15, 2024Abstract:Ensuring artificial intelligence behaves in such a way that is aligned with human values is commonly referred to as the alignment challenge. Prior work has shown that rational agents, behaving in such a way that maximizes a utility function, will inevitably behave in such a way that is not aligned with human values, especially as their level of intelligence goes up. Prior work has also shown that there is no "one true utility function"; solutions must include a more holistic approach to alignment. This paper describes oblivious agents: agents that are architected in such a way that their effective utility function is an aggregation of a known and hidden sub-functions. The hidden component, to be maximized, is internally implemented as a black box, preventing the agent from examining it. The known component, to be minimized, is knowledge of the hidden sub-function. Architectural constraints further influence how agent actions can evolve its internal environment model. We show that an oblivious agent, behaving rationally, constructs an internal approximation of designers' intentions (i.e., infers alignment), and, as a consequence of its architecture and effective utility function, behaves in such a way that maximizes alignment; i.e., maximizing the approximated intention function. We show that, paradoxically, it does this for whatever utility function is used as the hidden component and, in contrast with extant techniques, chances of alignment actually improve as agent intelligence grows.

Preserving Power Optimizations Across the High Level Synthesis of Distinct Application-Specific Circuits

Jan 15, 2024

Abstract:We evaluate the use of software interpretation to push High Level Synthesis of application-specific accelerators toward a higher level of abstraction. Our methodology is supported by a formal power consumption model that computes the power consumption of accelerator components, accurately predicting the power consumption on new designs from prior optimization estimations. We demonstrate how our approach simplifies the re-use of power optimizations across distinct designs, by leveraging the higher level of design abstraction, using two accelerators representative of the robotics domain, implemented through the Bambu High Level Synthesis tool. Results support the research hypothesis, achieving predictions accurate within +/- 1%.

On a Functional Definition of Intelligence

Dec 15, 2023Abstract:Without an agreed-upon definition of intelligence, asking "is this system intelligent?"" is an untestable question. This lack of consensus hinders research, and public perception, on Artificial Intelligence (AI), particularly since the rise of generative- and large-language models. Most work on precisely capturing what we mean by "intelligence" has come from the fields of philosophy, psychology, and cognitive science. Because these perspectives are intrinsically linked to intelligence as it is demonstrated by natural creatures, we argue such fields cannot, and will not, provide a sufficiently rigorous definition that can be applied to artificial means. Thus, we present an argument for a purely functional, black-box definition of intelligence, distinct from how that intelligence is actually achieved; focusing on the "what", rather than the "how". To achieve this, we first distinguish other related concepts (sentience, sensation, agency, etc.) from the notion of intelligence, particularly identifying how these concepts pertain to artificial intelligent systems. As a result, we achieve a formal definition of intelligence that is conceptually testable from only external observation, that suggests intelligence is a continuous variable. We conclude by identifying challenges that still remain towards quantifiable measurement. This work provides a useful perspective for both the development of AI, and for public perception of the capabilities and risks of AI.

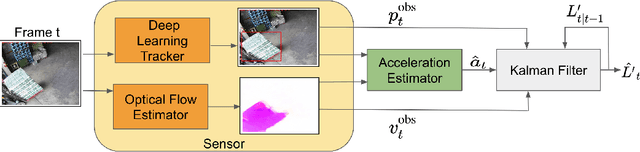

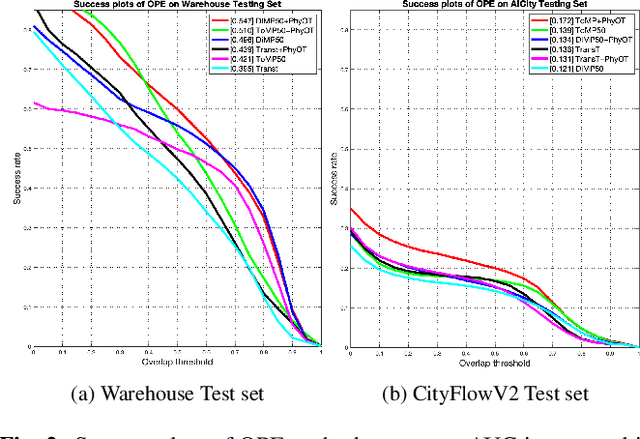

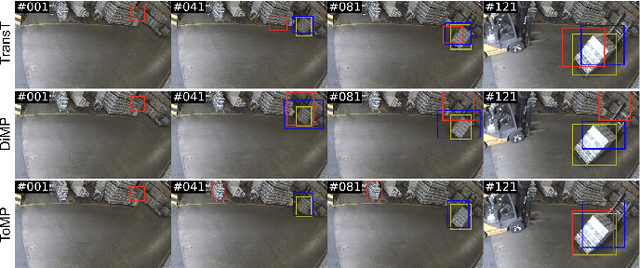

PhyOT: Physics-informed object tracking in surveillance cameras

Dec 14, 2023

Abstract:While deep learning has been very successful in computer vision, real world operating conditions such as lighting variation, background clutter, or occlusion hinder its accuracy across several tasks. Prior work has shown that hybrid models -- combining neural networks and heuristics/algorithms -- can outperform vanilla deep learning for several computer vision tasks, such as classification or tracking. We consider the case of object tracking, and evaluate a hybrid model (PhyOT) that conceptualizes deep neural networks as ``sensors'' in a Kalman filter setup, where prior knowledge, in the form of Newtonian laws of motion, is used to fuse sensor observations and to perform improved estimations. Our experiments combine three neural networks, performing position, indirect velocity and acceleration estimation, respectively, and evaluate such a formulation on two benchmark datasets: a warehouse security camera dataset that we collected and annotated and a traffic camera open dataset. Results suggest that our PhyOT can track objects in extreme conditions that the state-of-the-art deep neural networks fail while its performance in general cases does not degrade significantly from that of existing deep learning approaches. Results also suggest that our PhyOT components are generalizable and transferable.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge