PhyOT: Physics-informed object tracking in surveillance cameras

Paper and Code

Dec 14, 2023

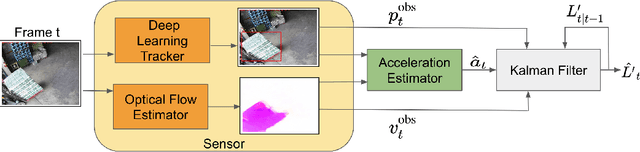

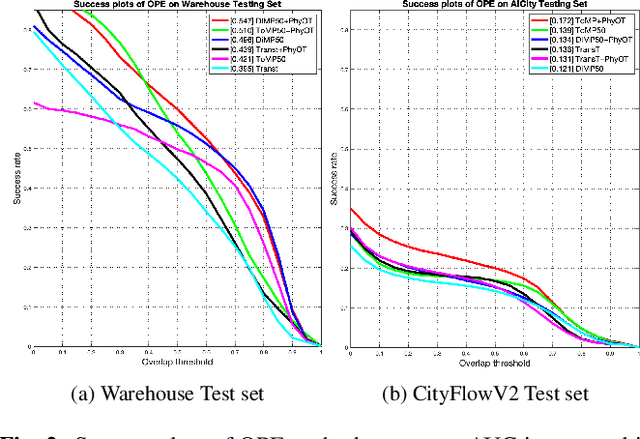

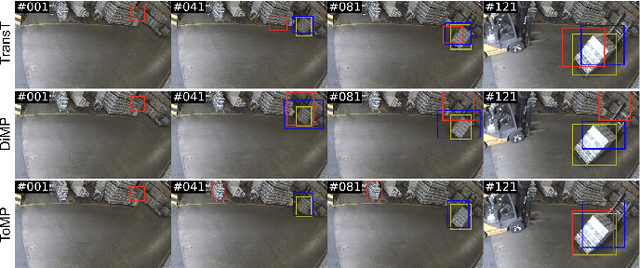

While deep learning has been very successful in computer vision, real world operating conditions such as lighting variation, background clutter, or occlusion hinder its accuracy across several tasks. Prior work has shown that hybrid models -- combining neural networks and heuristics/algorithms -- can outperform vanilla deep learning for several computer vision tasks, such as classification or tracking. We consider the case of object tracking, and evaluate a hybrid model (PhyOT) that conceptualizes deep neural networks as ``sensors'' in a Kalman filter setup, where prior knowledge, in the form of Newtonian laws of motion, is used to fuse sensor observations and to perform improved estimations. Our experiments combine three neural networks, performing position, indirect velocity and acceleration estimation, respectively, and evaluate such a formulation on two benchmark datasets: a warehouse security camera dataset that we collected and annotated and a traffic camera open dataset. Results suggest that our PhyOT can track objects in extreme conditions that the state-of-the-art deep neural networks fail while its performance in general cases does not degrade significantly from that of existing deep learning approaches. Results also suggest that our PhyOT components are generalizable and transferable.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge