Paul Young Joun Ha

A DRL-based Multiagent Cooperative Control Framework for CAV Networks: a Graphic Convolution Q Network

Oct 12, 2020

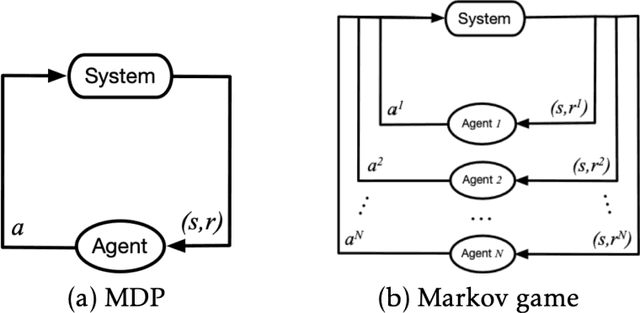

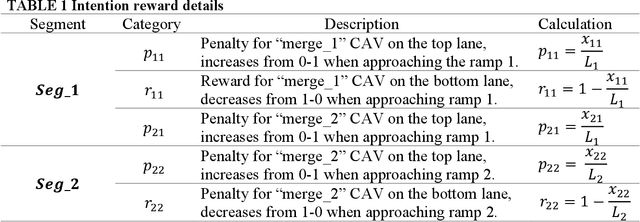

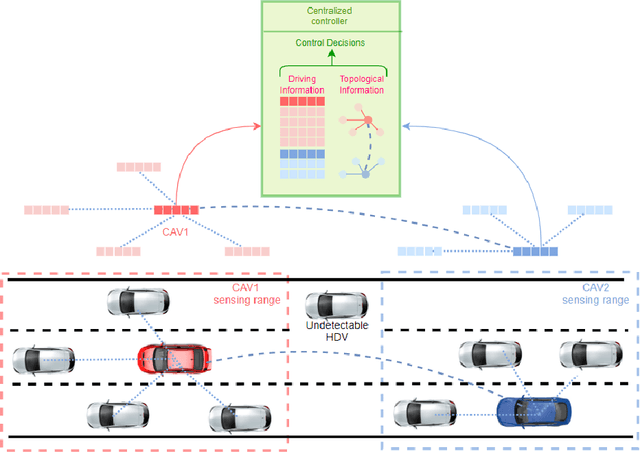

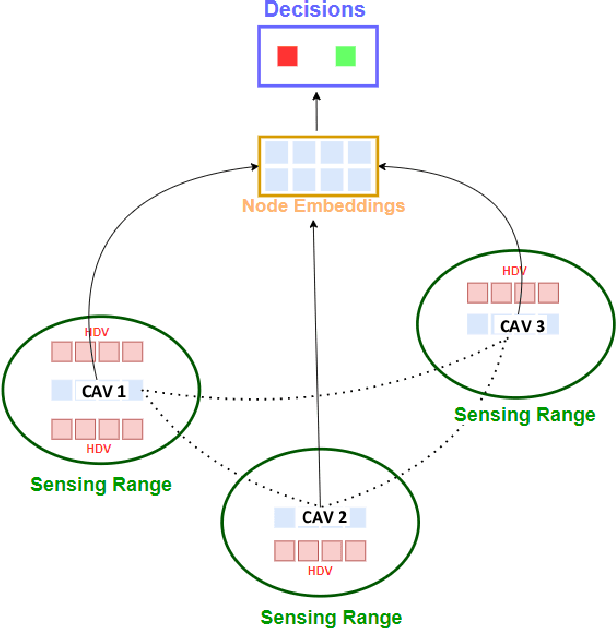

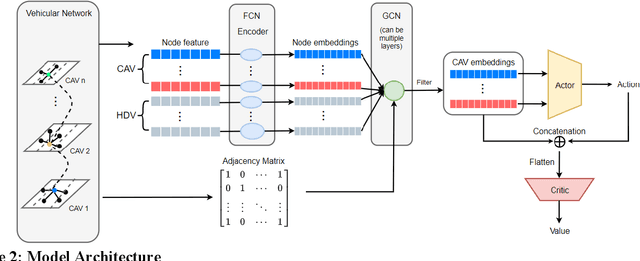

Abstract:Connected Autonomous Vehicle (CAV) Network can be defined as a collection of CAVs operating at different locations on a multilane corridor, which provides a platform to facilitate the dissemination of operational information as well as control instructions. Cooperation is crucial in CAV operating systems since it can greatly enhance operation in terms of safety and mobility, and high-level cooperation between CAVs can be expected by jointly plan and control within CAV network. However, due to the highly dynamic and combinatory nature such as dynamic number of agents (CAVs) and exponentially growing joint action space in a multiagent driving task, achieving cooperative control is NP hard and cannot be governed by any simple rule-based methods. In addition, existing literature contains abundant information on autonomous driving's sensing technology and control logic but relatively little guidance on how to fuse the information acquired from collaborative sensing and build decision processor on top of fused information. In this paper, a novel Deep Reinforcement Learning (DRL) based approach combining Graphic Convolution Neural Network (GCN) and Deep Q Network (DQN), namely Graphic Convolution Q network (GCQ) is proposed as the information fusion module and decision processor. The proposed model can aggregate the information acquired from collaborative sensing and output safe and cooperative lane changing decisions for multiple CAVs so that individual intention can be satisfied even under a highly dynamic and partially observed mixed traffic. The proposed algorithm can be deployed on centralized control infrastructures such as road-side units (RSU) or cloud platforms to improve the CAV operation.

Leveraging the Capabilities of Connected and Autonomous Vehicles and Multi-Agent Reinforcement Learning to Mitigate Highway Bottleneck Congestion

Oct 12, 2020

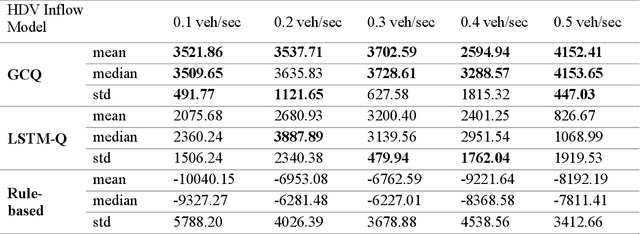

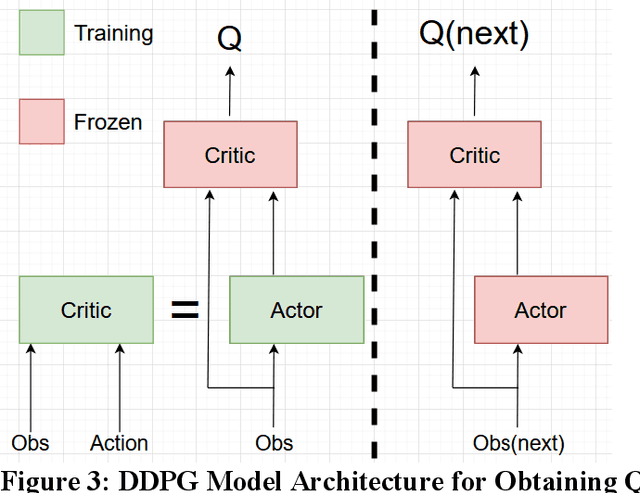

Abstract:Active Traffic Management strategies are often adopted in real-time to address such sudden flow breakdowns. When queuing is imminent, Speed Harmonization (SH), which adjusts speeds in upstream traffic to mitigate traffic showckwaves downstream, can be applied. However, because SH depends on driver awareness and compliance, it may not always be effective in mitigating congestion. The use of multiagent reinforcement learning for collaborative learning, is a promising solution to this challenge. By incorporating this technique in the control algorithms of connected and autonomous vehicle (CAV), it may be possible to train the CAVs to make joint decisions that can mitigate highway bottleneck congestion without human driver compliance to altered speed limits. In this regard, we present an RL-based multi-agent CAV control model to operate in mixed traffic (both CAVs and human-driven vehicles (HDVs)). The results suggest that even at CAV percent share of corridor traffic as low as 10%, CAVs can significantly mitigate bottlenecks in highway traffic. Another objective was to assess the efficacy of the RL-based controller vis-\`a-vis that of the rule-based controller. In addressing this objective, we duly recognize that one of the main challenges of RL-based CAV controllers is the variety and complexity of inputs that exist in the real world, such as the information provided to the CAV by other connected entities and sensed information. These translate as dynamic length inputs which are difficult to process and learn from. For this reason, we propose the use of Graphical Convolution Networks (GCN), a specific RL technique, to preserve information network topology and corresponding dynamic length inputs. We then use this, combined with Deep Deterministic Policy Gradient (DDPG), to carry out multi-agent training for congestion mitigation using the CAV controllers.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge