Paolo Franceschi

From Vision to Assistance: Gaze and Vision-Enabled Adaptive Control for a Back-Support Exoskeleton

Feb 04, 2026Abstract:Back-support exoskeletons have been proposed to mitigate spinal loading in industrial handling, yet their effectiveness critically depends on timely and context-aware assistance. Most existing approaches rely either on load-estimation techniques (e.g., EMG, IMU) or on vision systems that do not directly inform control. In this work, we present a vision-gated control framework for an active lumbar occupational exoskeleton that leverages egocentric vision with wearable gaze tracking. The proposed system integrates real-time grasp detection from a first-person YOLO-based perception system, a finite-state machine (FSM) for task progression, and a variable admittance controller to adapt torque delivery to both posture and object state. A user study with 15 participants performing stooping load lifting trials under three conditions (no exoskeleton, exoskeleton without vision, exoskeleton with vision) shows that vision-gated assistance significantly reduces perceived physical demand and improves fluency, trust, and comfort. Quantitative analysis reveals earlier and stronger assistance when vision is enabled, while questionnaire results confirm user preference for the vision-gated mode. These findings highlight the potential of egocentric vision to enhance the responsiveness, ergonomics, safety, and acceptance of back-support exoskeletons.

ros2 fanuc interface: Design and Evaluation of a Fanuc CRX Hardware Interface in ROS2

Jun 17, 2025Abstract:This paper introduces the ROS2 control and the Hardware Interface (HW) integration for the Fanuc CRX- robot family. It explains basic implementation details and communication protocols, and its integration with the Moveit2 motion planning library. We conducted a series of experiments to evaluate relevant performances in the robotics field. We tested the developed ros2_fanuc_interface for four relevant robotics cases: step response, trajectory tracking, collision avoidance integrated with Moveit2, and dynamic velocity scaling, respectively. Results show that, despite a non-negligible delay between command and feedback, the robot can track the defined path with negligible errors (if it complies with joint velocity limits), ensuring collision avoidance. Full code is open source and available at https://github.com/paolofrance/ros2_fanuc_interface.

Human-robot collaborative transport personalization via Dynamic Movement Primitives and velocity scaling

Jun 11, 2025Abstract:Nowadays, industries are showing a growing interest in human-robot collaboration, particularly for shared tasks. This requires intelligent strategies to plan a robot's motions, considering both task constraints and human-specific factors such as height and movement preferences. This work introduces a novel approach to generate personalized trajectories using Dynamic Movement Primitives (DMPs), enhanced with real-time velocity scaling based on human feedback. The method was rigorously tested in industrial-grade experiments, focusing on the collaborative transport of an engine cowl lip section. Comparative analysis between DMP-generated trajectories and a state-of-the-art motion planner (BiTRRT) highlights their adaptability combined with velocity scaling. Subjective user feedback further demonstrates a clear preference for DMP- based interactions. Objective evaluations, including physiological measurements from brain and skin activity, reinforce these findings, showcasing the advantages of DMPs in enhancing human-robot interaction and improving user experience.

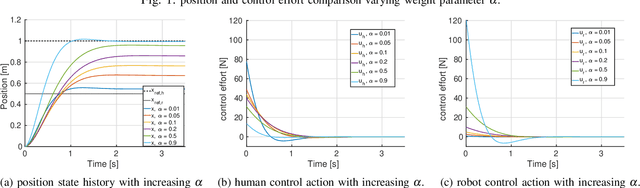

Modeling and analysis of pHRI with Differential Game Theory

Jul 20, 2023

Abstract:Applications involving humans and robots working together are spreading nowadays. Alongside, modeling and control techniques that allow physical Human-Robot Interaction (pHRI) are widely investigated. To better understand its potential application in pHRI, this work investigates the Cooperative Differential Game Theory modeling of pHRI in a cooperative reaching task, specifically for reference tracking. The proposed controller based on Collaborative Game Theory is deeply analyzed and compared in simulations with two other techniques, Linear Quadratic Regulator (LQR) and Non-Cooperative Game-Theoretic Controller. The set of simulations shows how different tuning of control parameters affects the system response and control efforts of both the players for the three controllers, suggesting the use of Cooperative GT in the case the robot should assist the human, while Non-Cooperative GT represents a better choice in the case the robot should lead the action. Finally, preliminary tests with a trained human are performed to extract useful information on the real applicability and limitations of the proposed method.

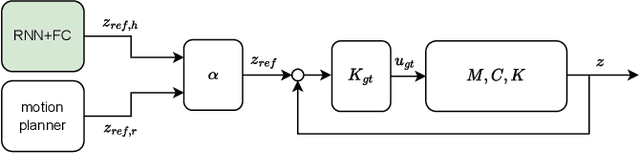

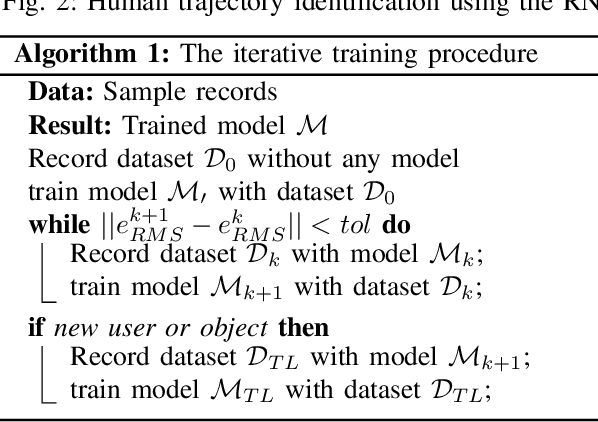

Predicting human motion intention for pHRI assistive control

Jul 20, 2023

Abstract:This work addresses human intention identification during physical Human-Robot Interaction (pHRI) tasks to include this information in an assistive controller. To this purpose, human intention is defined as the desired trajectory that the human wants to follow over a finite rolling prediction horizon so that the robot can assist in pursuing it. This work investigates a Recurrent Neural Network (RNN), specifically, Long-Short Term Memory (LSTM) cascaded with a Fully Connected layer. In particular, we propose an iterative training procedure to adapt the model. Such an iterative procedure is powerful in reducing the prediction error. Still, it has the drawback that it is time-consuming and does not generalize to different users or different co-manipulated objects. To overcome this issue, Transfer Learning (TL) adapts the pre-trained model to new trajectories, users, and co-manipulated objects by freezing the LSTM layer and fine-tuning the last FC layer, which makes the procedure faster. Experiments show that the iterative procedure adapts the model and reduces prediction error. Experiments also show that TL adapts to different users and to the co-manipulation of a large object. Finally, to check the utility of adopting the proposed method, we compare the proposed controller enhanced by the intention prediction with the other two standard controllers of pHRI.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge