Panagiotis Linardos

MediaEval 2019: Concealed FGSM Perturbations for Privacy Preservation

Oct 25, 2019

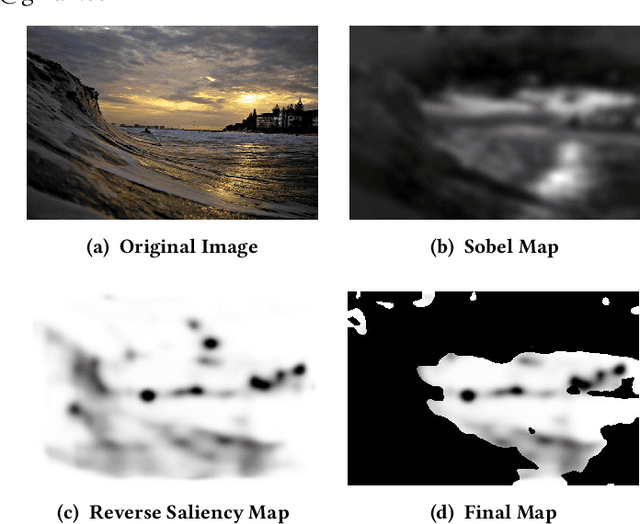

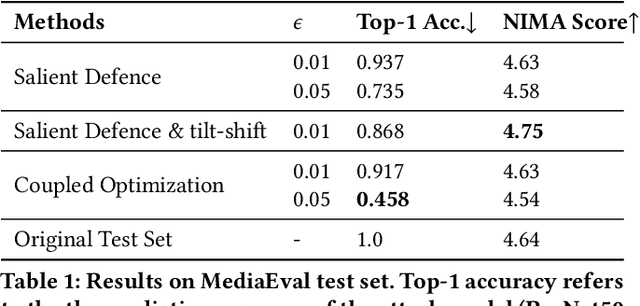

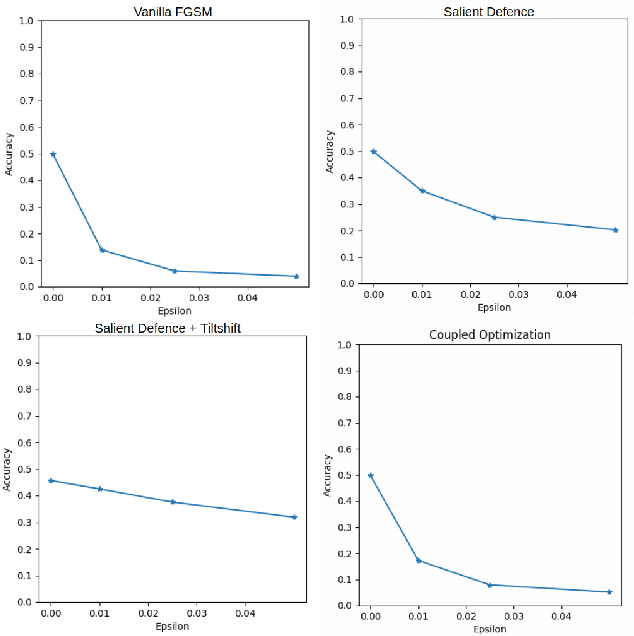

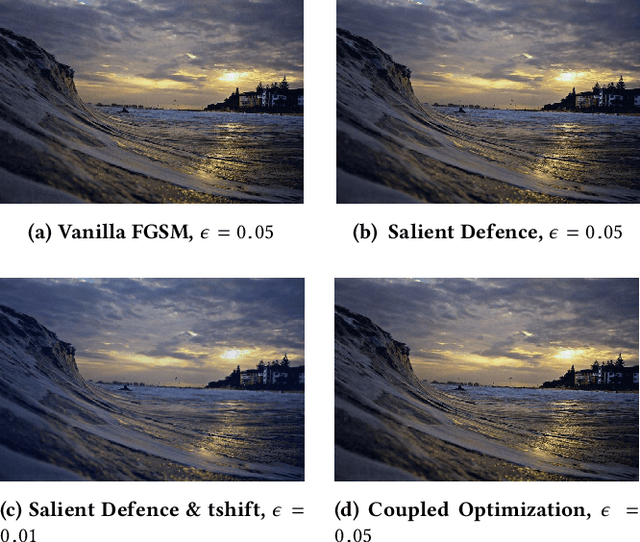

Abstract:This work tackles the Pixel Privacy task put forth by MediaEval 2019. Our goal is to manipulate images in a way that conceals them from automatic scene classifiers while preserving the original image quality. We use the fast gradient sign method, which normally has a corrupting influence on image appeal, and devise two methods to minimize the damage. The first approach uses a map of pixel locations that are either salient or flat, and directs perturbations away from them. The second approach subtracts the gradient of an aesthetics evaluation model from the gradient of the attack model to guide the perturbations towards a direction that preserves appeal. We make our code available at: https://git.io/JesXr.

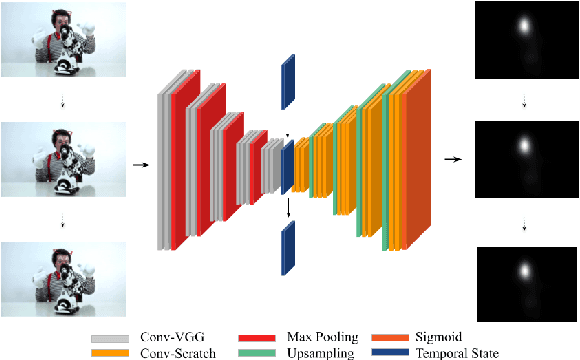

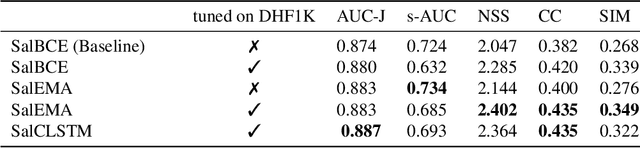

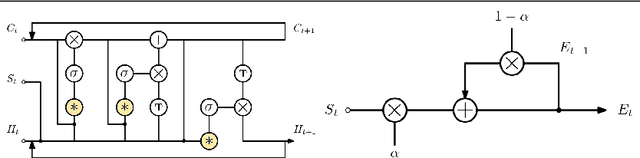

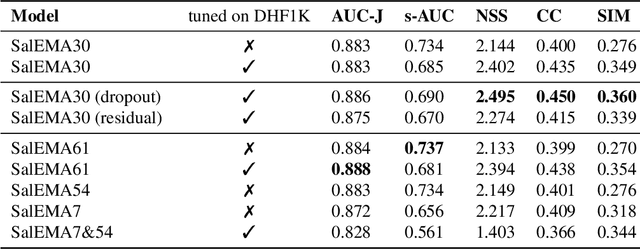

Simple vs complex temporal recurrences for video saliency prediction

Jul 16, 2019

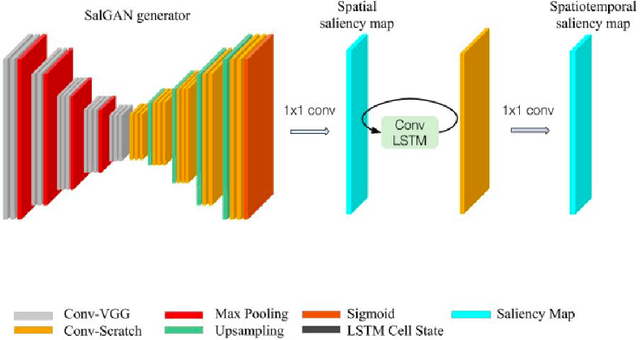

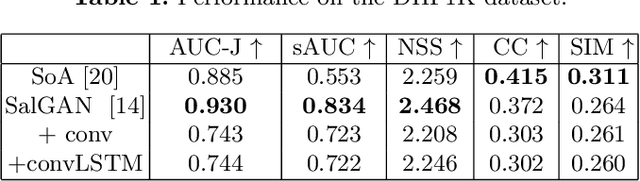

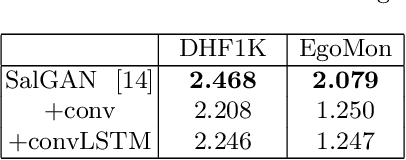

Abstract:This paper investigates modifying an existing neural network architecture for static saliency prediction using two types of recurrences that integrate information from the temporal domain. The first modification is the addition of a ConvLSTM within the architecture, while the second is a conceptually simple exponential moving average of an internal convolutional state. We use weights pre-trained on the SALICON dataset and fine-tune our model on DHF1K. Our results show that both modifications achieve state-of-the-art results and produce similar saliency maps. Source code is available at https://git.io/fjPiB.

Temporal Saliency Adaptation in Egocentric Videos

Sep 04, 2018

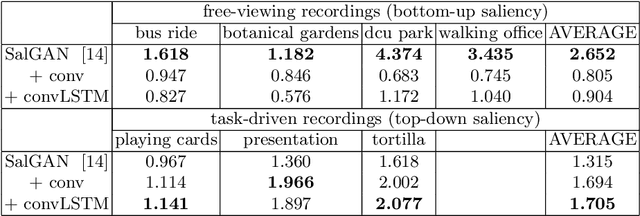

Abstract:This work adapts a deep neural model for image saliency prediction to the temporal domain of egocentric video. We compute the saliency map for each video frame, firstly with an off-the-shelf model trained from static images, secondly by adding a a convolutional or conv-LSTM layers trained with a dataset for video saliency prediction. We study each configuration on EgoMon, a new dataset made of seven egocentric videos recorded by three subjects in both free-viewing and task-driven set ups. Our results indicate that the temporal adaptation is beneficial when the viewer is not moving and observing the scene from a narrow field of view. Encouraged by this observation, we compute and publish the saliency maps for the EPIC Kitchens dataset, in which viewers are cooking. Source code and models available at https://imatge-upc.github.io/saliency-2018-videosalgan/

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge