Ozkan Kilic

Neurosymbolic Systems of Perception & Cognition: The Role of Attention

Dec 02, 2021

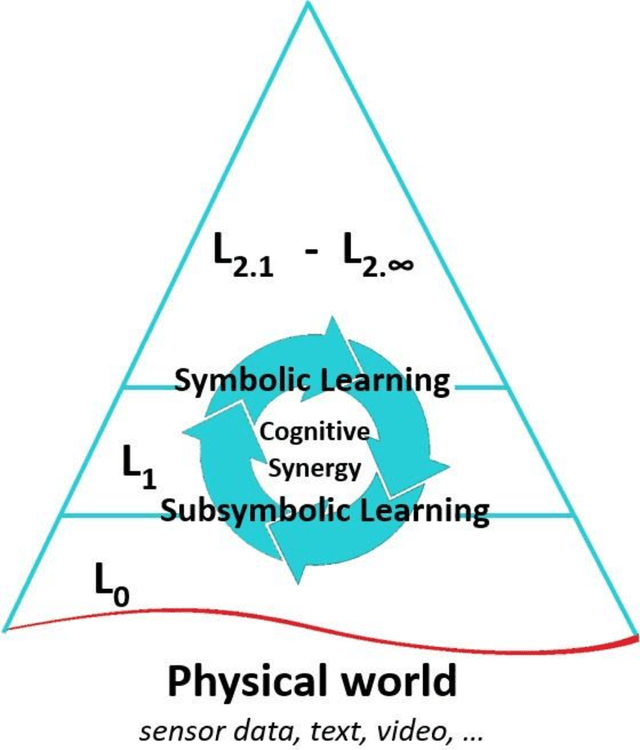

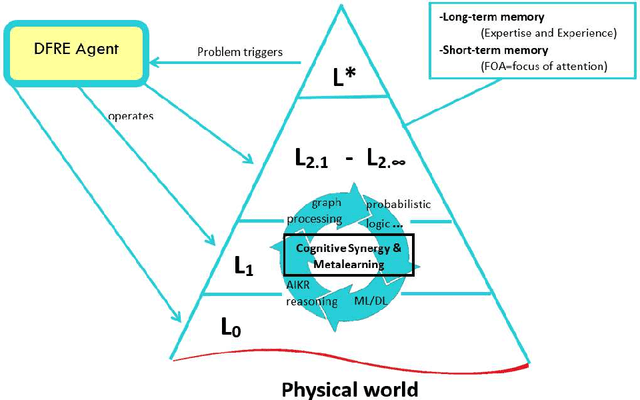

Abstract:A cognitive architecture aimed at cumulative learning must provide the necessary information and control structures to allow agents to learn incrementally and autonomously from their experience. This involves managing an agent's goals as well as continuously relating sensory information to these in its perception-cognition information stack. The more varied the environment of a learning agent is, the more general and flexible must be these mechanisms to handle a wider variety of relevant patterns, tasks, and goal structures. While many researchers agree that information at different levels of abstraction likely differs in its makeup and structure and processing mechanisms, agreement on the particulars of such differences is not generally shared in the research community. A binary processing architecture (often referred to as System-1 and System-2) has been proposed as a model of cognitive processing for low- and high-level information, respectively. We posit that cognition is not binary in this way and that knowledge at any level of abstraction involves what we refer to as neurosymbolic information, meaning that data at both high and low levels must contain both symbolic and subsymbolic information. Further, we argue that the main differentiating factor between the processing of high and low levels of data abstraction can be largely attributed to the nature of the involved attention mechanisms. We describe the key arguments behind this view and review relevant evidence from the literature.

A Metamodel and Framework for Artificial General Intelligence From Theory to Practice

Feb 11, 2021

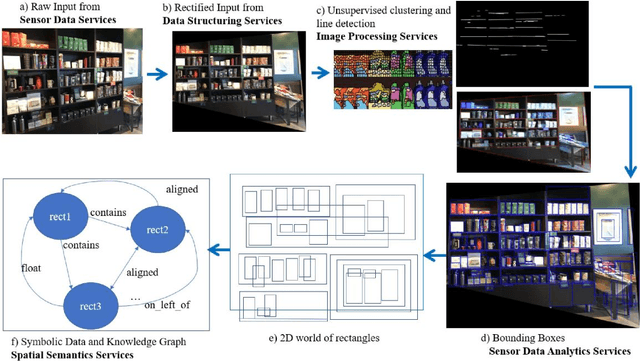

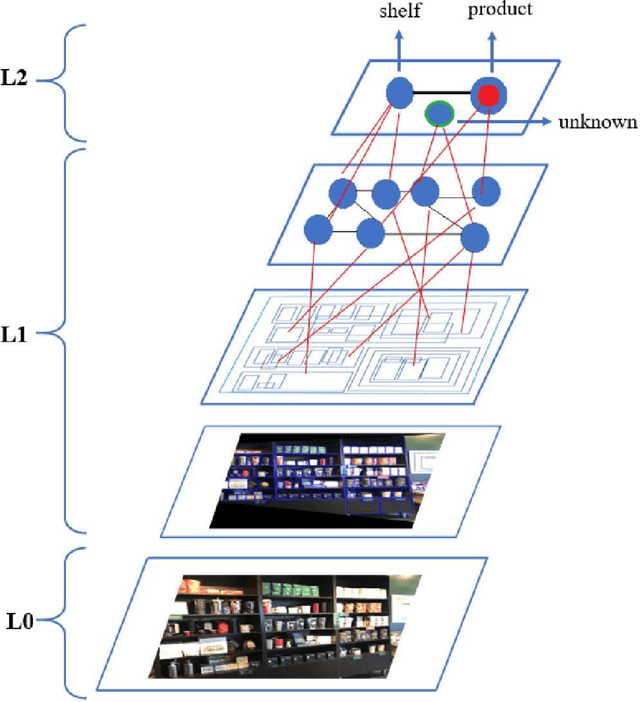

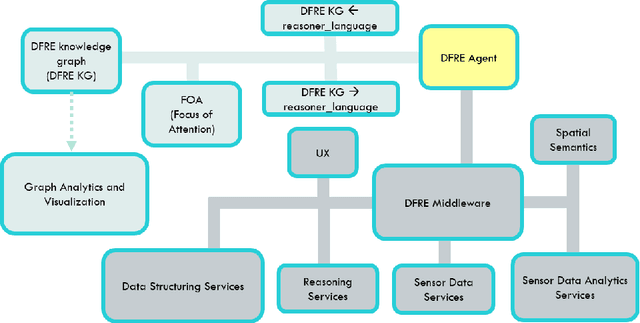

Abstract:This paper introduces a new metamodel-based knowledge representation that significantly improves autonomous learning and adaptation. While interest in hybrid machine learning / symbolic AI systems leveraging, for example, reasoning and knowledge graphs, is gaining popularity, we find there remains a need for both a clear definition of knowledge and a metamodel to guide the creation and manipulation of knowledge. Some of the benefits of the metamodel we introduce in this paper include a solution to the symbol grounding problem, cumulative learning, and federated learning. We have applied the metamodel to problems ranging from time series analysis, computer vision, and natural language understanding and have found that the metamodel enables a wide variety of learning mechanisms ranging from machine learning, to graph network analysis and learning by reasoning engines to interoperate in a highly synergistic way. Our metamodel-based projects have consistently exhibited unprecedented accuracy, performance, and ability to generalize. This paper is inspired by the state-of-the-art approaches to AGI, recent AGI-aspiring work, the granular computing community, as well as Alfred Korzybski's general semantics. One surprising consequence of the metamodel is that it not only enables a new level of autonomous learning and optimal functioning for machine intelligences, but may also shed light on a path to better understanding how to improve human cognition.

A Metamodel and Framework for AGI

Sep 06, 2020

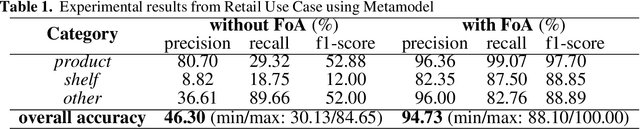

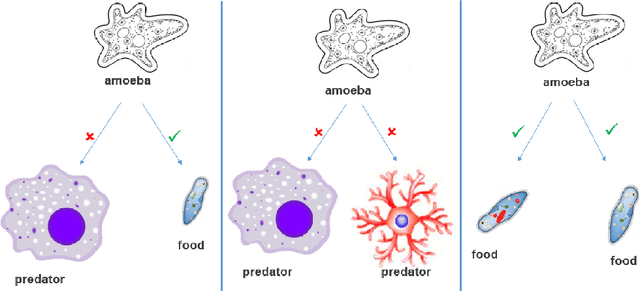

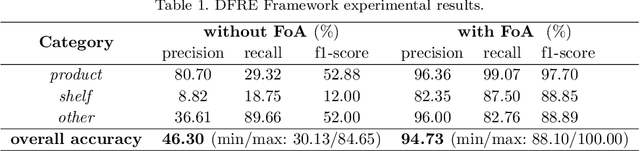

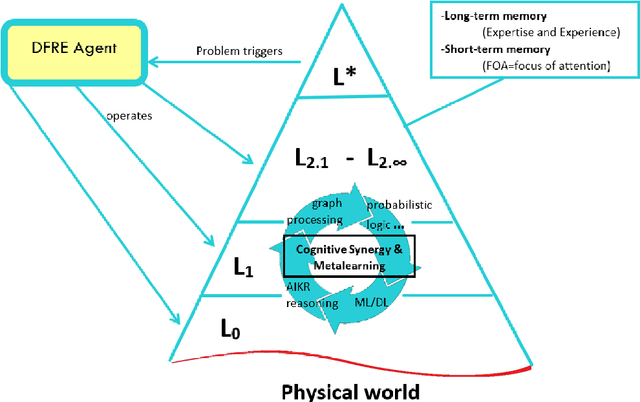

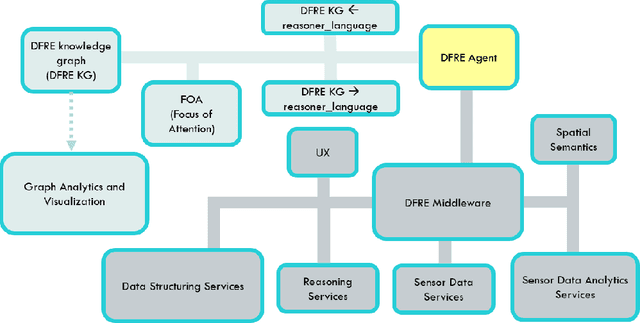

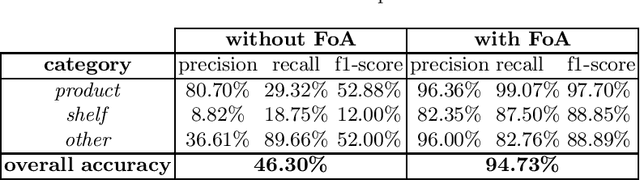

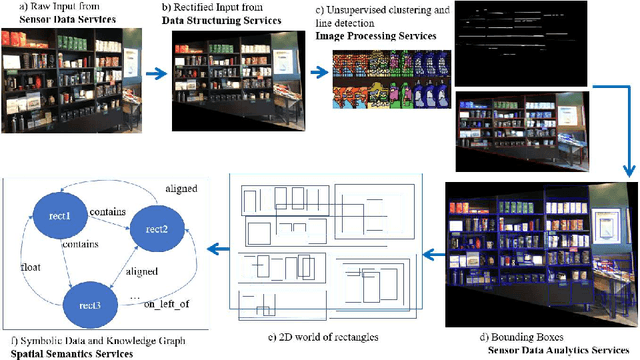

Abstract:Can artificial intelligence systems exhibit superhuman performance, but in critical ways, lack the intelligence of even a single-celled organism? The answer is clearly 'yes' for narrow AI systems. Animals, plants, and even single-celled organisms learn to reliably avoid danger and move towards food. This is accomplished via a physical knowledge preserving metamodel that autonomously generates useful models of the world. We posit that preserving the structure of knowledge is critical for higher intelligences that manage increasingly higher levels of abstraction, be they human or artificial. This is the key lesson learned from applying AGI subsystems to complex real-world problems that require continuous learning and adaptation. In this paper, we introduce the Deep Fusion Reasoning Engine (DFRE), which implements a knowledge-preserving metamodel and framework for constructing applied AGI systems. The DFRE metamodel exhibits some important fundamental knowledge preserving properties such as clear distinctions between symmetric and antisymmetric relations, and the ability to create a hierarchical knowledge representation that clearly delineates between levels of abstraction. The DFRE metamodel, which incorporates these capabilities, demonstrates how this approach benefits AGI in specific ways such as managing combinatorial explosion and enabling cumulative, distributed and federated learning. Our experiments show that the proposed framework achieves 94% accuracy on average on unsupervised object detection and recognition. This work is inspired by the state-of-the-art approaches to AGI, recent AGI-aspiring work, the granular computing community, as well as Alfred Korzybski's general semantics.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge