A Metamodel and Framework for AGI

Paper and Code

Sep 06, 2020

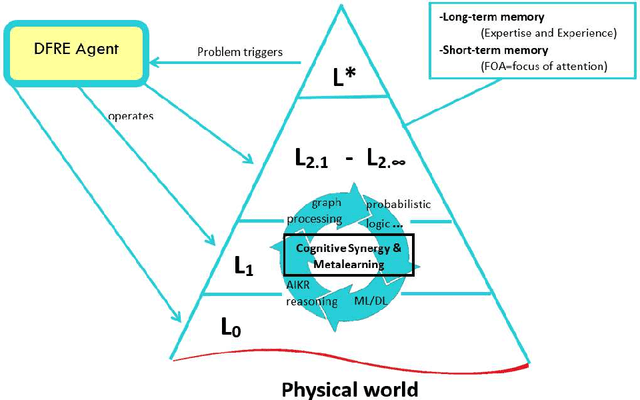

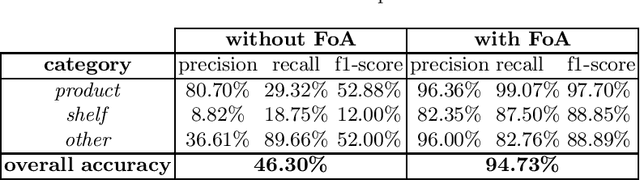

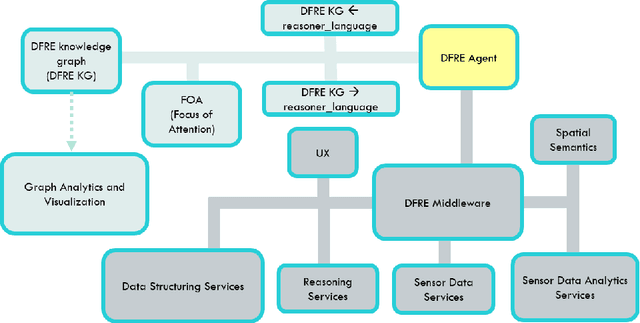

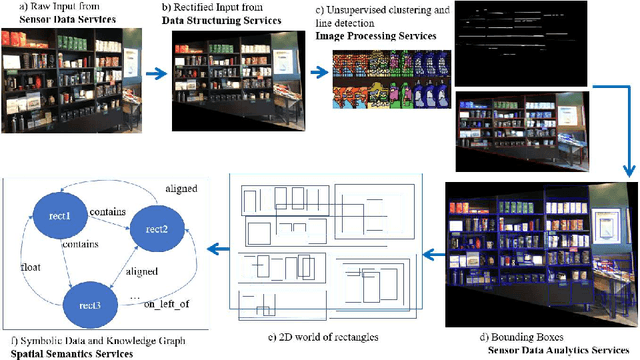

Can artificial intelligence systems exhibit superhuman performance, but in critical ways, lack the intelligence of even a single-celled organism? The answer is clearly 'yes' for narrow AI systems. Animals, plants, and even single-celled organisms learn to reliably avoid danger and move towards food. This is accomplished via a physical knowledge preserving metamodel that autonomously generates useful models of the world. We posit that preserving the structure of knowledge is critical for higher intelligences that manage increasingly higher levels of abstraction, be they human or artificial. This is the key lesson learned from applying AGI subsystems to complex real-world problems that require continuous learning and adaptation. In this paper, we introduce the Deep Fusion Reasoning Engine (DFRE), which implements a knowledge-preserving metamodel and framework for constructing applied AGI systems. The DFRE metamodel exhibits some important fundamental knowledge preserving properties such as clear distinctions between symmetric and antisymmetric relations, and the ability to create a hierarchical knowledge representation that clearly delineates between levels of abstraction. The DFRE metamodel, which incorporates these capabilities, demonstrates how this approach benefits AGI in specific ways such as managing combinatorial explosion and enabling cumulative, distributed and federated learning. Our experiments show that the proposed framework achieves 94% accuracy on average on unsupervised object detection and recognition. This work is inspired by the state-of-the-art approaches to AGI, recent AGI-aspiring work, the granular computing community, as well as Alfred Korzybski's general semantics.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge