Ophir Gozes

COVID-19 in CXR: from Detection and Severity Scoring to Patient Disease Monitoring

Aug 04, 2020

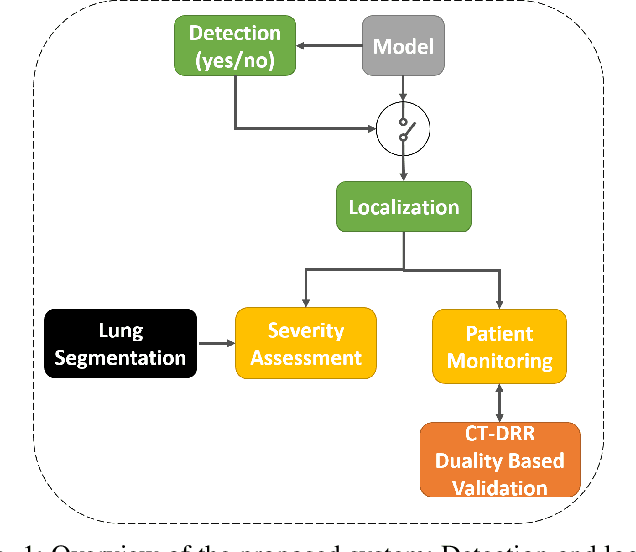

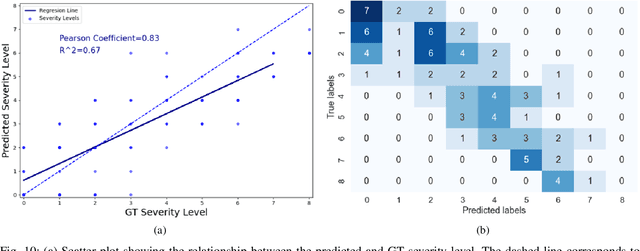

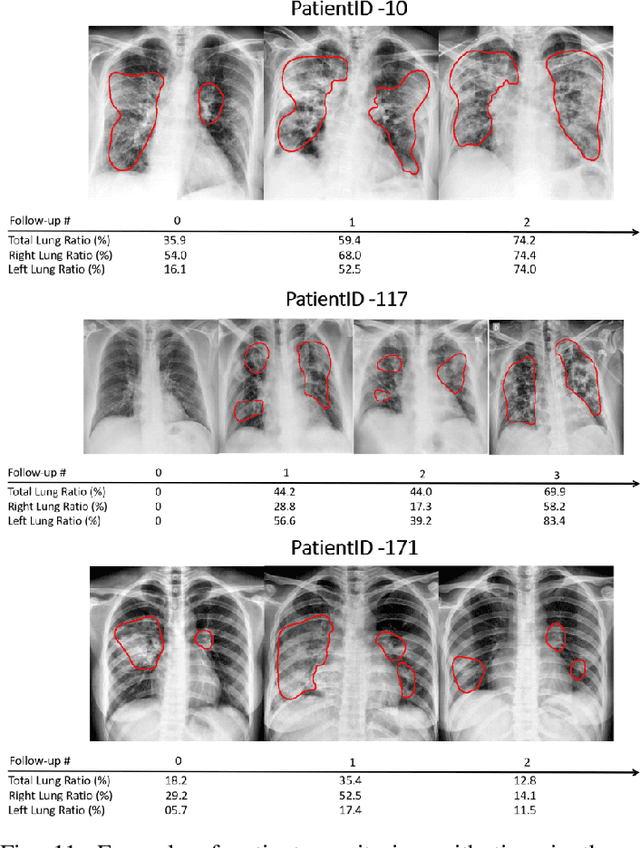

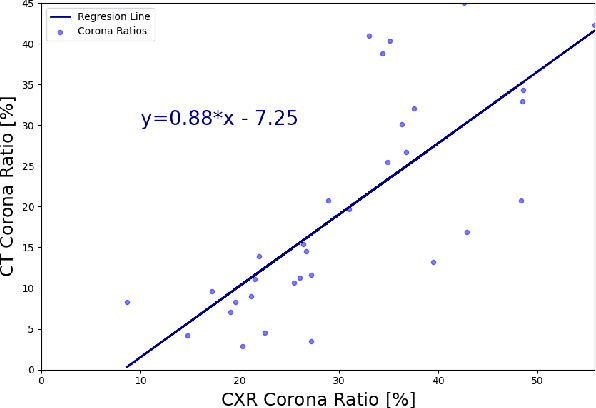

Abstract:In this work, we estimate the severity of pneumonia in COVID-19 patients and conduct a longitudinal study of disease progression. To achieve this goal, we developed a deep learning model for simultaneous detection and segmentation of pneumonia in chest Xray (CXR) images and generalized to COVID-19 pneumonia. The segmentations were utilized to calculate a "Pneumonia Ratio" which indicates the disease severity. The measurement of disease severity enables to build a disease extent profile over time for hospitalized patients. To validate the model relevance to the patient monitoring task, we developed a validation strategy which involves a synthesis of Digital Reconstructed Radiographs (DRRs - synthetic Xray) from serial CT scans; we then compared the disease progression profiles that were generated from the DRRs to those that were generated from CT volumes.

Coronavirus Detection and Analysis on Chest CT with Deep Learning

Apr 06, 2020

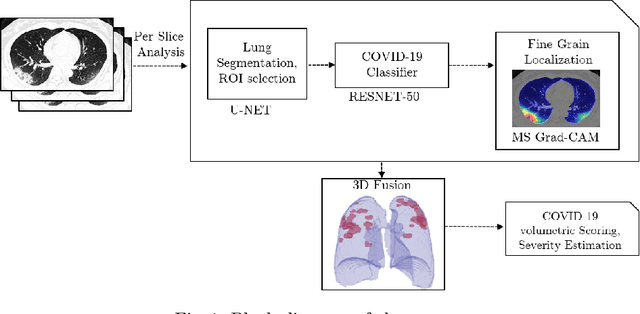

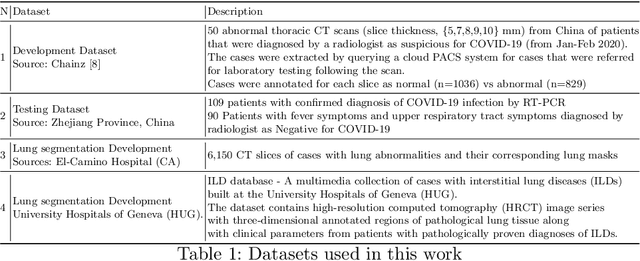

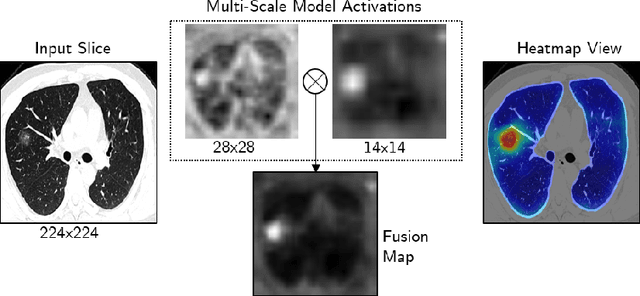

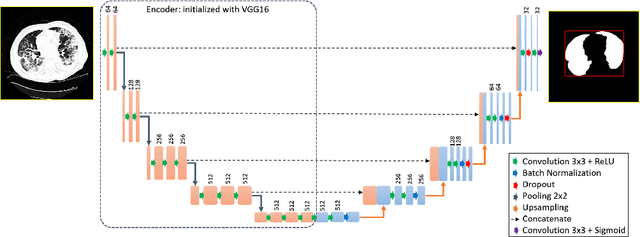

Abstract:The outbreak of the novel coronavirus, officially declared a global pandemic, has a severe impact on our daily lives. As of this writing there are approximately 197,188 confirmed cases of which 80,881 are in "Mainland China" with 7,949 deaths, a mortality rate of 3.4%. In order to support radiologists in this overwhelming challenge, we develop a deep learning based algorithm that can detect, localize and quantify severity of COVID-19 manifestation from chest CT scans. The algorithm is comprised of a pipeline of image processing algorithms which includes lung segmentation, 2D slice classification and fine grain localization. In order to further understand the manifestations of the disease, we perform unsupervised clustering of abnormal slices. We present our results on a dataset comprised of 110 confirmed COVID-19 patients from Zhejiang province, China.

Rapid AI Development Cycle for the Coronavirus (COVID-19) Pandemic: Initial Results for Automated Detection & Patient Monitoring using Deep Learning CT Image Analysis

Mar 24, 2020

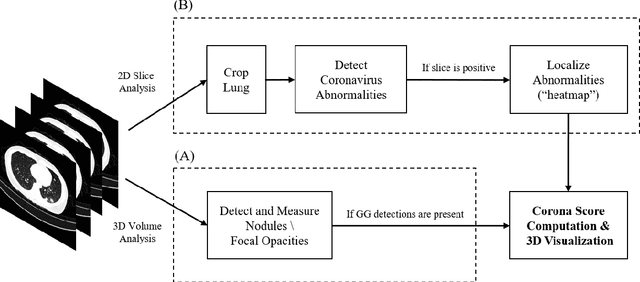

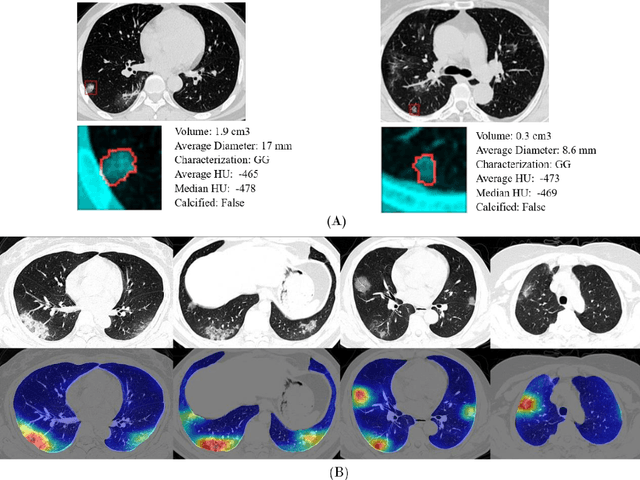

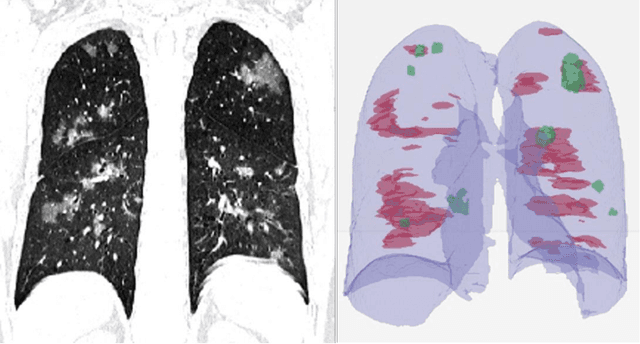

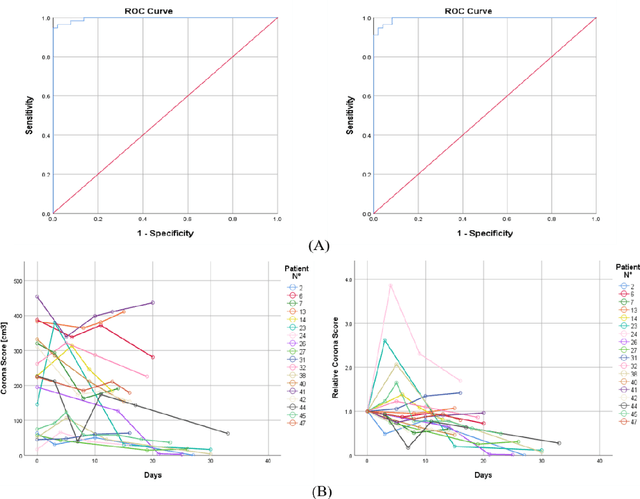

Abstract:Purpose: Develop AI-based automated CT image analysis tools for detection, quantification, and tracking of Coronavirus; demonstrate they can differentiate coronavirus patients from non-patients. Materials and Methods: Multiple international datasets, including from Chinese disease-infected areas were included. We present a system that utilizes robust 2D and 3D deep learning models, modifying and adapting existing AI models and combining them with clinical understanding. We conducted multiple retrospective experiments to analyze the performance of the system in the detection of suspected COVID-19 thoracic CT features and to evaluate evolution of the disease in each patient over time using a 3D volume review, generating a Corona score. The study includes a testing set of 157 international patients (China and U.S). Results: Classification results for Coronavirus vs Non-coronavirus cases per thoracic CT studies were 0.996 AUC (95%CI: 0.989-1.00) ; on datasets of Chinese control and infected patients. Possible working point: 98.2% sensitivity, 92.2% specificity. For time analysis of Coronavirus patients, the system output enables quantitative measurements for smaller opacities (volume, diameter) and visualization of the larger opacities in a slice-based heat map or a 3D volume display. Our suggested Corona score measures the progression of disease over time. Conclusion: This initial study, which is currently being expanded to a larger population, demonstrated that rapidly developed AI-based image analysis can achieve high accuracy in detection of Coronavirus as well as quantification and tracking of disease burden.

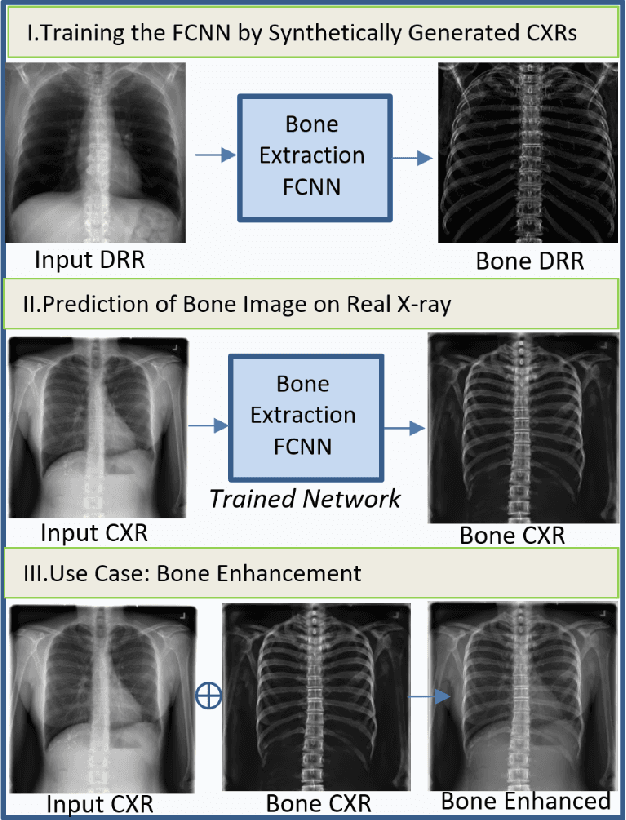

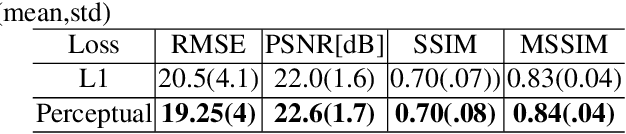

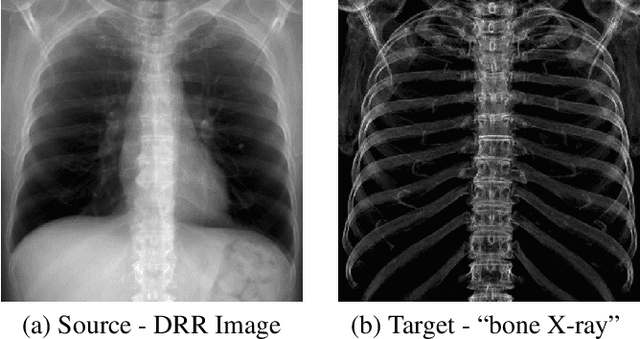

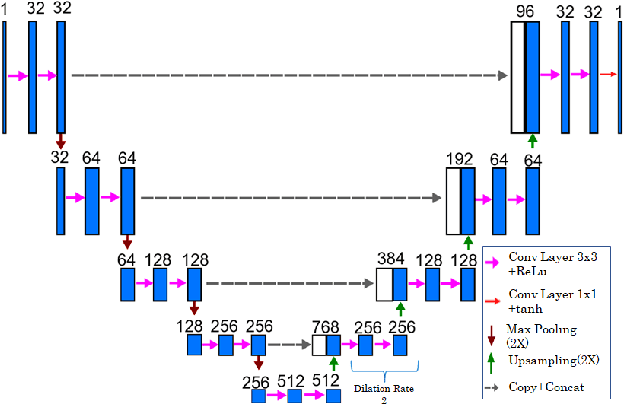

Bone Structures Extraction and Enhancement in Chest Radiographs via CNN Trained on Synthetic Data

Mar 20, 2020

Abstract:In this paper, we present a deep learning-based image processing technique for extraction of bone structures in chest radiographs using a U-Net FCNN. The U-Net was trained to accomplish the task in a fully supervised setting. To create the training image pairs, we employed simulated X-Ray or Digitally Reconstructed Radiographs (DRR), derived from 664 CT scans belonging to the LIDC-IDRI dataset. Using HU based segmentation of bone structures in the CT domain, a synthetic 2D "Bone x-ray" DRR is produced and used for training the network. For the reconstruction loss, we utilize two loss functions- L1 Loss and perceptual loss. Once the bone structures are extracted, the original image can be enhanced by fusing the original input x-ray and the synthesized "Bone X-ray". We show that our enhancement technique is applicable to real x-ray data, and display our results on the NIH Chest X-Ray-14 dataset.

Deep Feature Learning from a Hospital-Scale Chest X-ray Dataset with Application to TB Detection on a Small-Scale Dataset

Jun 03, 2019

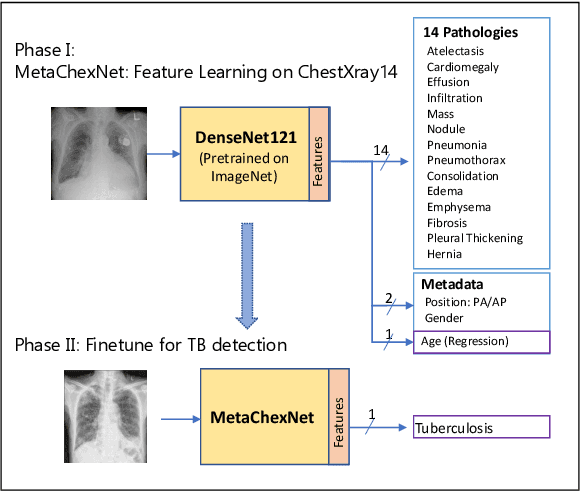

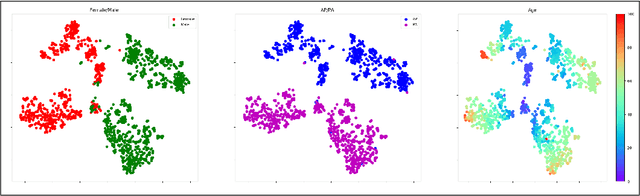

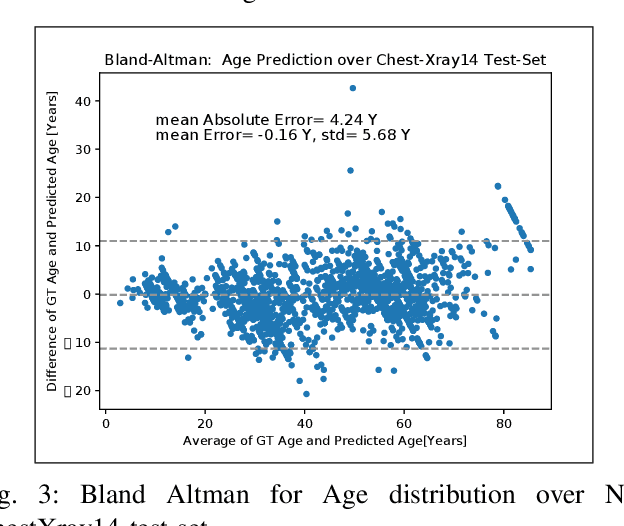

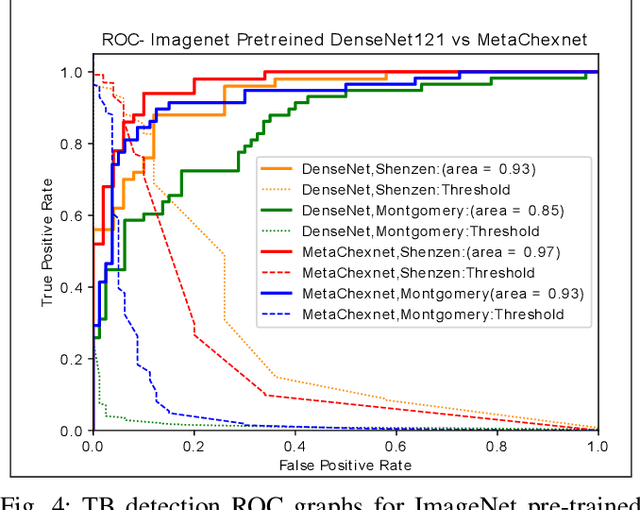

Abstract:The use of ImageNet pre-trained networks is becoming widespread in the medical imaging community. It enables training on small datasets, commonly available in medical imaging tasks. The recent emergence of a large Chest X-ray dataset opened the possibility for learning features that are specific to the X-ray analysis task. In this work, we demonstrate that the features learned allow for better classification results for the problem of Tuberculosis detection and enable generalization to an unseen dataset. To accomplish the task of feature learning, we train a DenseNet-121 CNN on 112K images from the ChestXray14 dataset which includes labels of 14 common thoracic pathologies. In addition to the pathology labels, we incorporate metadata which is available in the dataset: Patient Positioning, Gender and Patient Age. We term this architecture MetaChexNet. As a by-product of the feature learning, we demonstrate state of the art performance on the task of patient Age \& Gender estimation using CNN's. Finally, we show the features learned using ChestXray14 allow for better transfer learning on small-scale datasets for Tuberculosis.

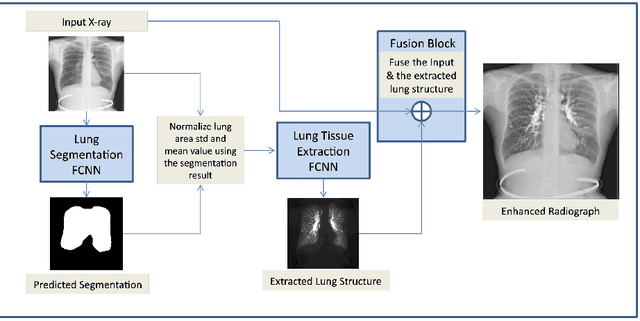

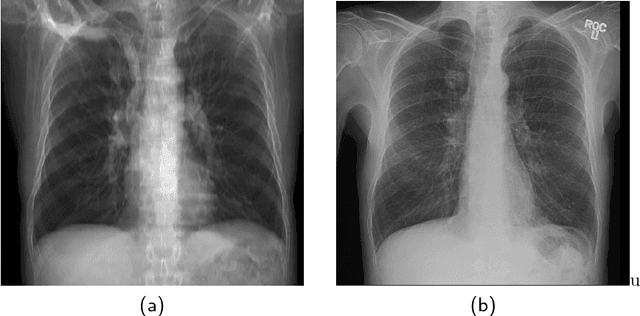

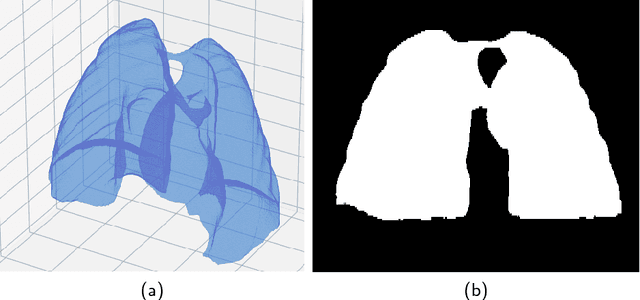

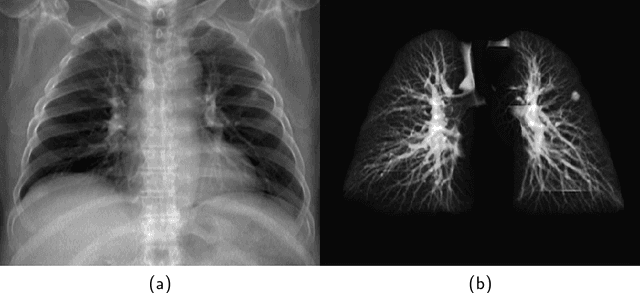

Lung Structures Enhancement in Chest Radiographs via CT based FCNN Training

Oct 14, 2018

Abstract:The abundance of overlapping anatomical structures appearing in chest radiographs can reduce the performance of lung pathology detection by automated algorithms (CAD) as well as the human reader. In this paper, we present a deep learning based image processing technique for enhancing the contrast of soft lung structures in chest radiographs using Fully Convolutional Neural Networks (FCNN). Two 2D FCNN architectures were trained to accomplish the task: The first performs 2D lung segmentation which is used for normalization of the lung area. The second FCNN is trained to extract lung structures. To create the training images, we employed Simulated X-Ray or Digitally Reconstructed Radiographs (DRR) derived from 516 scans belonging to the LIDC-IDRI dataset. By first segmenting the lungs in the CT domain, we are able to create a dataset of 2D lung masks to be used for training the segmentation FCNN. For training the extraction FCNN, we create DRR images of only voxels belonging to the 3D lung segmentation which we call "Lung X-ray" and use them as target images. Once the lung structures are extracted, the original image can be enhanced by fusing the original input x-ray and the synthesized "Lung X-ray". We show that our enhancement technique is applicable to real x-ray data, and display our results on the recently released NIH Chest X-Ray-14 dataset. We see promising results when training a DenseNet-121 based architecture to work directly on the lung enhanced X-ray images.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge