Omid Askarisichani

Expertise and confidence explain how social influence evolves along intellective tasks

Nov 13, 2020

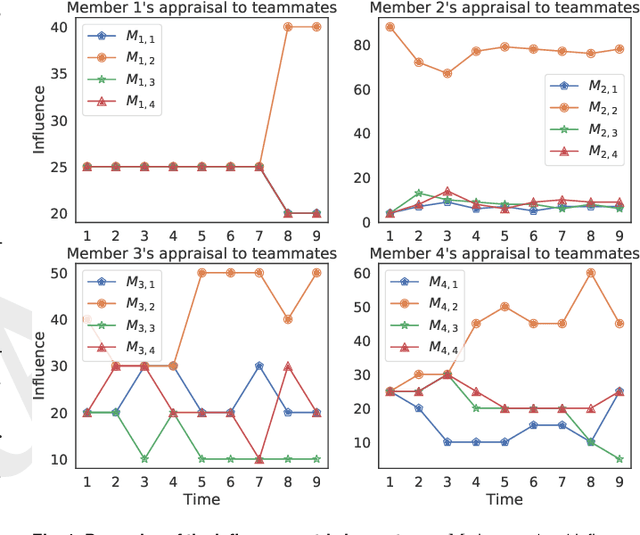

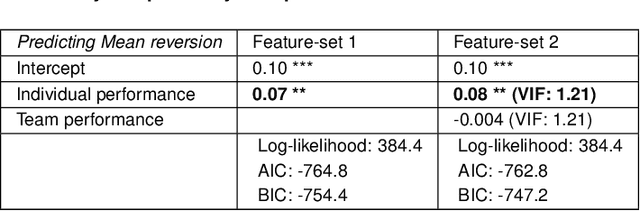

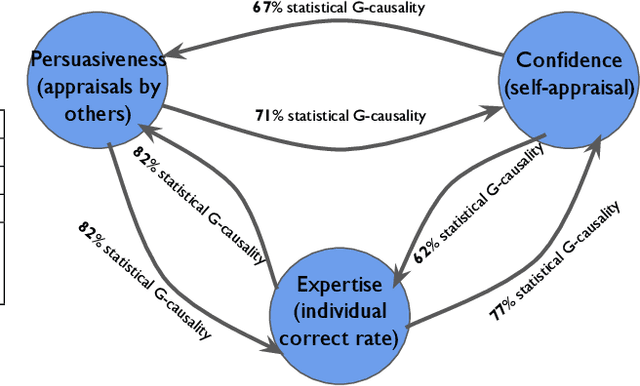

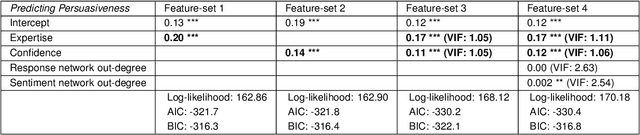

Abstract:Discovering the antecedents of individuals' influence in collaborative environments is an important, practical, and challenging problem. In this paper, we study interpersonal influence in small groups of individuals who collectively execute a sequence of intellective tasks. We observe that along an issue sequence with feedback, individuals with higher expertise and social confidence are accorded higher interpersonal influence. We also observe that low-performing individuals tend to underestimate their high-performing teammate's expertise. Based on these observations, we introduce three hypotheses and present empirical and theoretical support for their validity. We report empirical evidence on longstanding theories of transactive memory systems, social comparison, and confidence heuristics on the origins of social influence. We propose a cognitive dynamical model inspired by these theories to describe the process by which individuals adjust interpersonal influences over time. We demonstrate the model's accuracy in predicting individuals' influence and provide analytical results on its asymptotic behavior for the case with identically performing individuals. Lastly, we propose a novel approach using deep neural networks on a pre-trained text embedding model for predicting the influence of individuals. Using message contents, message times, and individual correctness collected during tasks, we are able to accurately predict individuals' self-reported influence over time. Extensive experiments verify the accuracy of the proposed models compared to baselines such as structural balance and reflected appraisal model. While the neural networks model is the most accurate, the dynamical model is the most interpretable for influence prediction.

DeepMap: Learning Deep Representations for Graph Classification

Apr 05, 2020

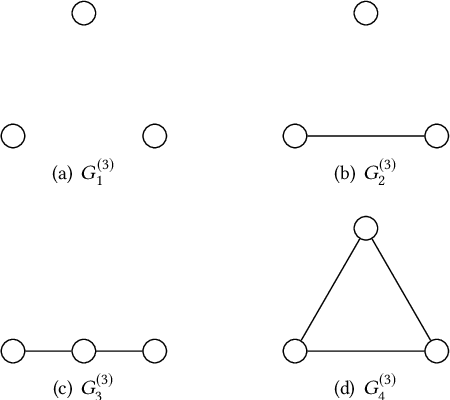

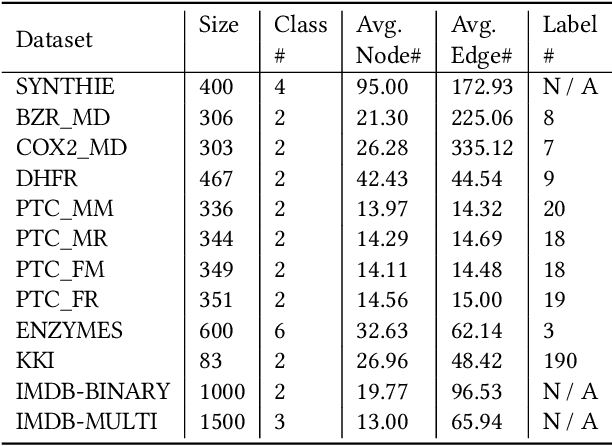

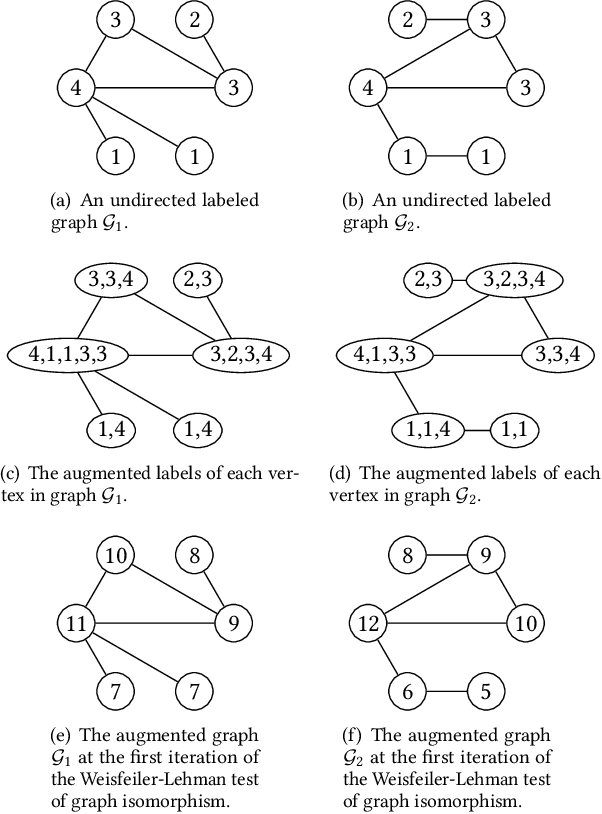

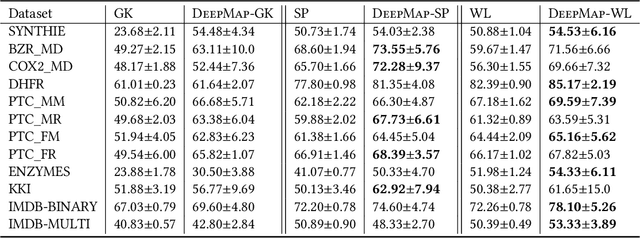

Abstract:Graph-structured data arise in many scenarios. A fundamental problem is to quantify the similarities of graphs for tasks such as classification. Graph kernels are positive-semidefinite functions that decompose graphs into substructures and compare them. One problem in the effective implementation of this idea is that the substructures are not independent, which leads to high-dimensional feature space. In addition, graph kernels cannot capture the high-order complex interactions between vertices. To mitigate these two problems, we propose a framework called DeepMap to learn deep representations for graph feature maps. The learnt deep representation for a graph is a dense and low-dimensional vector that captures complex high-order interactions in a vertex neighborhood. DeepMap extends Convolutional Neural Networks (CNNs) to arbitrary graphs by aligning vertices across graphs and building the receptive field for each vertex. We empirically validate DeepMap on various graph classification benchmarks and demonstrate that it achieves state-of-the-art performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge