Omer Sahin Tas

RetroMotion: Retrocausal Motion Forecasting Models are Instructable

May 26, 2025Abstract:Motion forecasts of road users (i.e., agents) vary in complexity as a function of scene constraints and interactive behavior. We address this with a multi-task learning method for motion forecasting that includes a retrocausal flow of information. The corresponding tasks are to forecast (1) marginal trajectory distributions for all modeled agents and (2) joint trajectory distributions for interacting agents. Using a transformer model, we generate the joint distributions by re-encoding marginal distributions followed by pairwise modeling. This incorporates a retrocausal flow of information from later points in marginal trajectories to earlier points in joint trajectories. Per trajectory point, we model positional uncertainty using compressed exponential power distributions. Notably, our method achieves state-of-the-art results in the Waymo Interaction Prediction dataset and generalizes well to the Argoverse 2 dataset. Additionally, our method provides an interface for issuing instructions through trajectory modifications. Our experiments show that regular training of motion forecasting leads to the ability to follow goal-based instructions and to adapt basic directional instructions to the scene context. Code: https://github.com/kit-mrt/future-motion

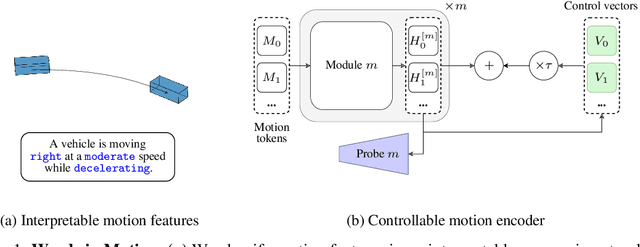

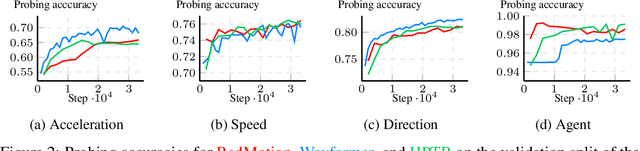

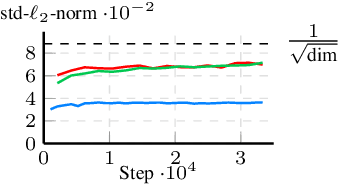

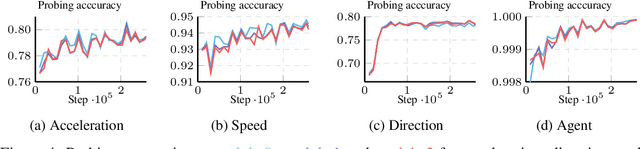

Words in Motion: Representation Engineering for Motion Forecasting

Jun 17, 2024

Abstract:Motion forecasting transforms sequences of past movements and environment context into future motion. Recent methods rely on learned representations, resulting in hidden states that are difficult to interpret. In this work, we use natural language to quantize motion features in a human-interpretable way, and measure the degree to which they are embedded in hidden states. Our experiments reveal that hidden states of motion sequences are arranged with respect to our discrete sets of motion features. Following these insights, we fit control vectors to motion features, which allow for controlling motion forecasts at inference. Consequently, our method enables controlling transformer-based motion forecasting models with textual inputs, providing a unique interface to interact with and understand these models. Our implementation is available at https://github.com/kit-mrt/future-motion

Road Barlow Twins: Redundancy Reduction for Road Environment Descriptors and Motion Prediction

Jun 19, 2023

Abstract:Anticipating the future motion of traffic agents is vital for self-driving vehicles to ensure their safe operation. We introduce a novel self-supervised pre-training method as well as a transformer model for motion prediction. Our method is based on Barlow Twins and applies the redundancy reduction principle to embeddings generated from HD maps. Additionally, we introduce a novel approach for redundancy reduction, where a potentially large and variable set of road environment tokens is transformed into a fixed-size set of road environment descriptors (RED). Our experiments reveal that the proposed pre-training method can improve minADE and minFDE by 12% and 15% and outperform contrastive learning with PreTraM and SimCLR in a semi-supervised setting. Our REDMotion model achieves results that are competitive with those of recent related methods such as MultiPath++ or Scene Transformer. Code is available at: https://github.com/kit-mrt/road-barlow-twins

Tackling Existence Probabilities of Objects with Motion Planning for Automated Urban Driving

Feb 04, 2020

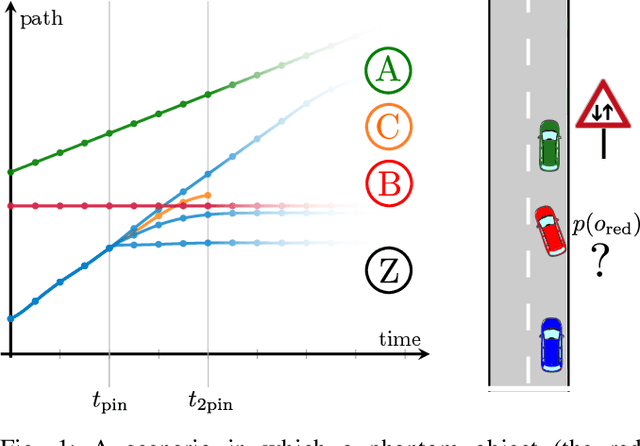

Abstract:Motion planners take uncertain information about the environment as an input. The environment information is most of the time noisy and has a tendency to contain false positive object detections, rather than false negatives. The state-of-the art motion planning approaches take uncertain state and prediction of objects into account, but fail to distinguish between their existence probabilities. In this paper we present a planning approach that considers the existence probabilities of objects. The proposed approach reacts to falsely detected phantom objects smoothly, and in this way tolerates the faults arising from perception and prediction without performing harsh reactions, unless such reactions are unavoidable for maintaining safety.

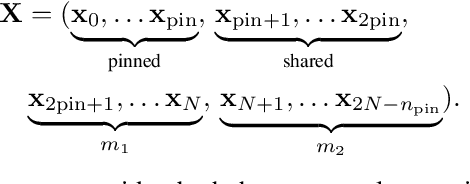

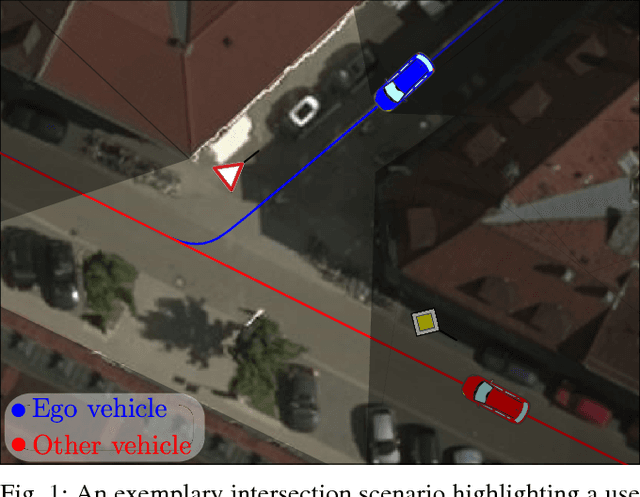

Limited Visibility and Uncertainty Aware Motion Planning for Automated Driving

Oct 30, 2018

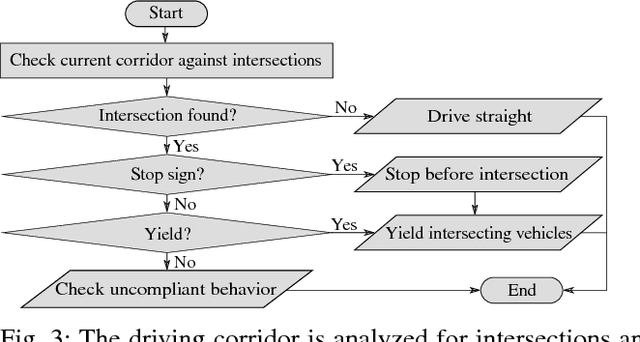

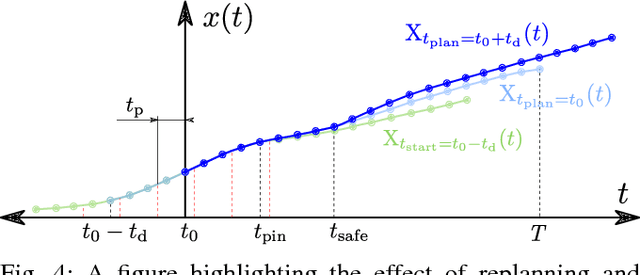

Abstract:Adverse weather conditions and occlusions in urban environments result in impaired perception. The uncertainties are handled in different modules of an automated vehicle, ranging from sensor level over situation prediction until motion planning. This paper focuses on motion planning given an uncertain environment model with occlusions. We present a method to remain collision free for the worst-case evolution of the given scene. We define criteria that measure the available margins to a collision while considering visibility and interactions, and consequently integrate conditions that apply these criteria into an optimization-based motion planner. We show the generality of our method by validating it in several distinct urban scenarios.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge