Olyvia Kundu

Optimized edge-based grasping method for a cluttered environment

Sep 04, 2018

Abstract:This paper looks into the problem of grasping region localization along with suitable pose from a cluttered environment without any a priori knowledge of the object geometry. This end-to-end method detects the handles from a single frame of input sensor. The pipeline starts with the creation of multiple surface segments to detect the required gap in the first stage, and eventually helps in detecting boundary lines. Our novelty lies in the fact that we have merged color based edge and depth edge in order to get more reliable boundary points through which a pair of boundary line is fitted. Also this information is used to validate the handle by measuring the angle between the boundary lines and also by checking for amy potential occlusion. In addition, we also proposed an optimizing cost function based method to choose the best handle from a set of valid handles. The method proposed is tested on real-life datasets and is found to out form state of the art methods in terms of precision.

A Novel Geometry-based Algorithm for Robust Grasping in Extreme Clutter Environment

Jul 27, 2018

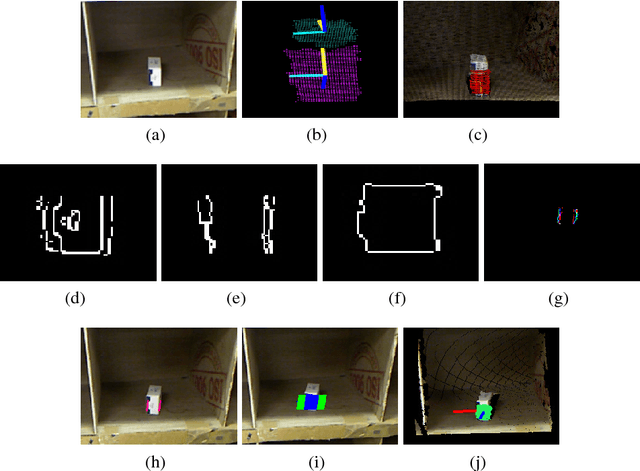

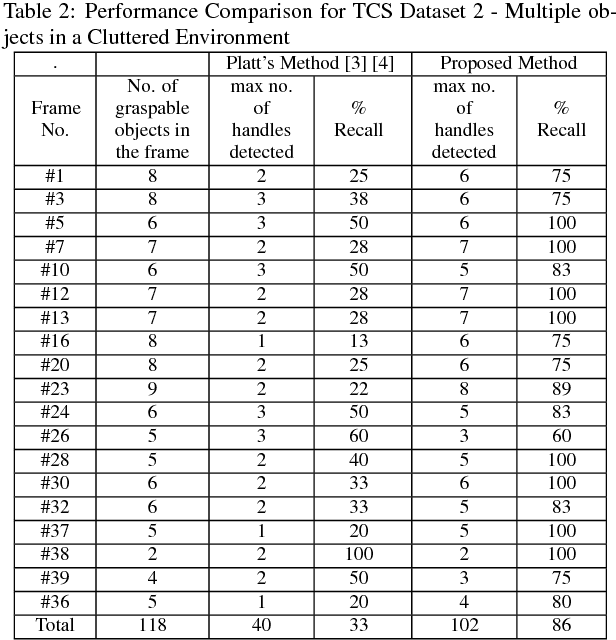

Abstract:This paper looks into the problem of grasping unknown objects in a cluttered environment using 3D point cloud data obtained from a range or an RGBD sensor. The objective is to identify graspable regions and detect suitable grasp poses from a single view, possibly, partial 3D point cloud without any apriori knowledge of the object geometry. The problem is solved in two steps: (1) identifying and segmenting various object surfaces and, (2) searching for suitable grasping handles on these surfaces by applying geometric constraints of the physical gripper. The first step is solved by using a modified version of region growing algorithm that uses a pair of thresholds for smoothness constraint on local surface normals to find natural boundaries of object surfaces. In this process, a novel concept of edge point is introduced that allows us to segment between different surfaces of the same object. The second step is solved by converting a 6D pose detection problem into a 1D linear search problem by projecting 3D cloud points onto the principal axes of the object surface. The graspable handles are then localized by applying physical constraints of the gripper. The resulting method allows us to grasp all kinds of objects including rectangular or box-type objects with flat surfaces which have been difficult so far to deal with in the grasping literature. The proposed method is simple and can be implemented in real-time and does not require any off-line training phase for finding these affordances. The improvements achieved is demonstrated through comparison with another state-of-the-art grasping algorithm on various publicly-available and self-created datasets.

Design and Development of an automated Robotic Pick & Stow System for an e-Commerce Warehouse

Mar 07, 2017

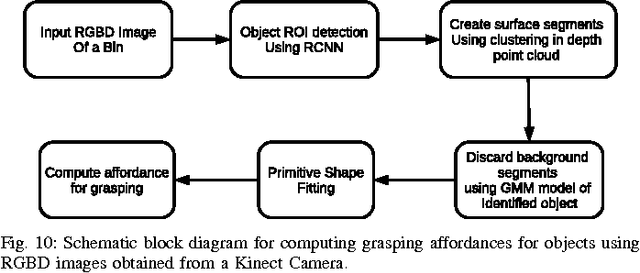

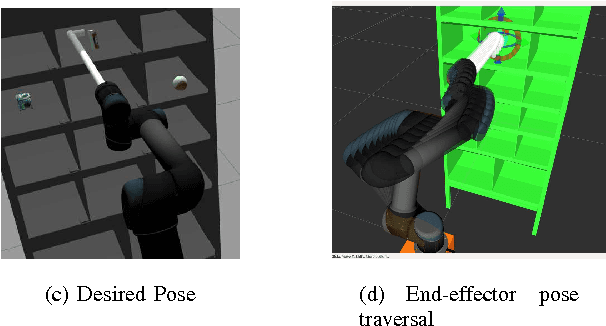

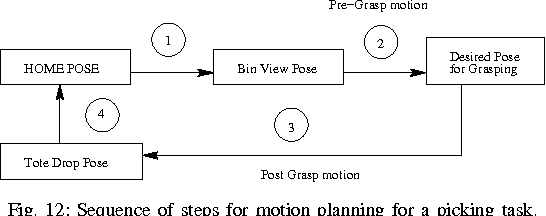

Abstract:In this paper, we provide details of a robotic system that can automate the task of picking and stowing objects from and to a rack in an e-commerce fulfillment warehouse. The system primarily comprises of four main modules: (1) Perception module responsible for recognizing query objects and localizing them in the 3-dimensional robot workspace; (2) Planning module generates necessary paths that the robot end- effector has to take for reaching the objects in the rack or in the tote; (3) Calibration module that defines the physical workspace for the robot visible through the on-board vision system; and (4) Gripping and suction system for picking and stowing different kinds of objects. The perception module uses a faster region-based Convolutional Neural Network (R-CNN) to recognize objects. We designed a novel two finger gripper that incorporates pneumatic valve based suction effect to enhance its ability to pick different kinds of objects. The system was developed by IITK-TCS team for participation in the Amazon Picking Challenge 2016 event. The team secured a fifth place in the stowing task in the event. The purpose of this article is to share our experiences with students and practicing engineers and enable them to build similar systems. The overall efficacy of the system is demonstrated through several simulation as well as real-world experiments with actual robots.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge