Olli Silven

Shavette: Low Power Neural Network Acceleration via Algorithm-level Error Detection and Undervolting

Oct 17, 2024

Abstract:Reduced voltage operation is an effective technique for substantial energy efficiency improvement in digital circuits. This brief introduces a simple approach for enabling reduced voltage operation of Deep Neural Network (DNN) accelerators by mere software modifications. Conventional approaches for enabling reduced voltage operation e.g., Timing Error Detection (TED) systems, incur significant development costs and overheads, while not being applicable to the off-the-shelf components. Contrary to those, the solution proposed in this paper relies on algorithm-based error detection, and hence, is implemented with low development costs, does not require any circuit modifications, and is even applicable to commodity devices. By showcasing the solution through experimenting on popular DNNs, i.e., LeNet and VGG16, on a GPU platform, we demonstrate 18% to 25% energy saving with no accuracy loss of the models and negligible throughput compromise (< 3.9%), considering the overheads from integration of the error detection schemes into the DNN. The integration of presented algorithmic solution into the design is simpler when compared conventional TED based techniques that require extensive circuit-level modifications, cell library characterizations or special support from the design tools.

Few-shot Class-incremental Learning: A Survey

Aug 13, 2023

Abstract:Few-shot Class-Incremental Learning (FSCIL) presents a unique challenge in machine learning, as it necessitates the continuous learning of new classes from sparse labeled training samples without forgetting previous knowledge. While this field has seen recent progress, it remains an active area of exploration. This paper aims to provide a comprehensive and systematic review of FSCIL. In our in-depth examination, we delve into various facets of FSCIL, encompassing the problem definition, the discussion of primary challenges of unreliable empirical risk minimization and the stability-plasticity dilemma, general schemes, and relevant problems of incremental learning and few-shot learning. Besides, we offer an overview of benchmark datasets and evaluation metrics. Furthermore, we introduce the classification methods in FSCIL from data-based, structure-based, and optimization-based approaches and the object detection methods in FSCIL from anchor-free and anchor-based approaches. Beyond these, we illuminate several promising research directions within FSCIL that merit further investigation.

Challenges of Artificial Intelligence -- From Machine Learning and Computer Vision to Emotional Intelligence

Jan 05, 2022

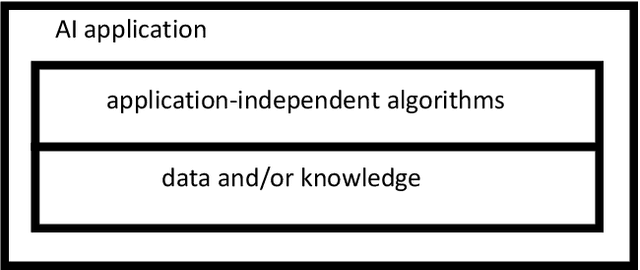

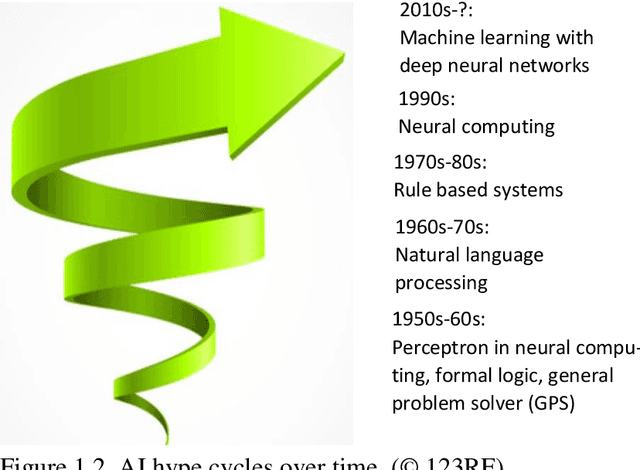

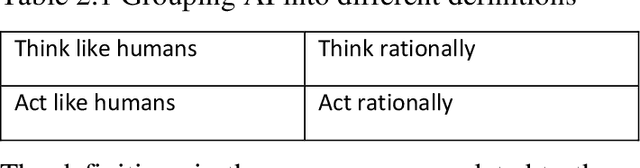

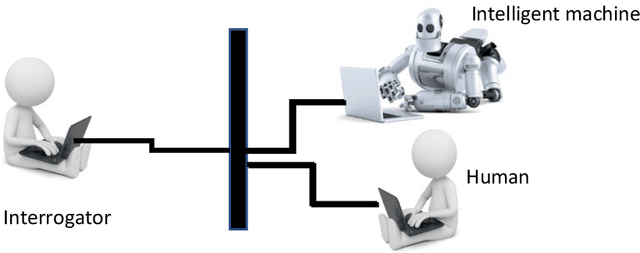

Abstract:Artificial intelligence (AI) has become a part of everyday conversation and our lives. It is considered as the new electricity that is revolutionizing the world. AI is heavily invested in both industry and academy. However, there is also a lot of hype in the current AI debate. AI based on so-called deep learning has achieved impressive results in many problems, but its limits are already visible. AI has been under research since the 1940s, and the industry has seen many ups and downs due to over-expectations and related disappointments that have followed. The purpose of this book is to give a realistic picture of AI, its history, its potential and limitations. We believe that AI is a helper, not a ruler of humans. We begin by describing what AI is and how it has evolved over the decades. After fundamentals, we explain the importance of massive data for the current mainstream of artificial intelligence. The most common representations for AI, methods, and machine learning are covered. In addition, the main application areas are introduced. Computer vision has been central to the development of AI. The book provides a general introduction to computer vision, and includes an exposure to the results and applications of our own research. Emotions are central to human intelligence, but little use has been made in AI. We present the basics of emotional intelligence and our own research on the topic. We discuss super-intelligence that transcends human understanding, explaining why such achievement seems impossible on the basis of present knowledge,and how AI could be improved. Finally, a summary is made of the current state of AI and what to do in the future. In the appendix, we look at the development of AI education, especially from the perspective of contents at our own university.

Transport Triggered Array Processor for Vision Applications

Jun 10, 2019

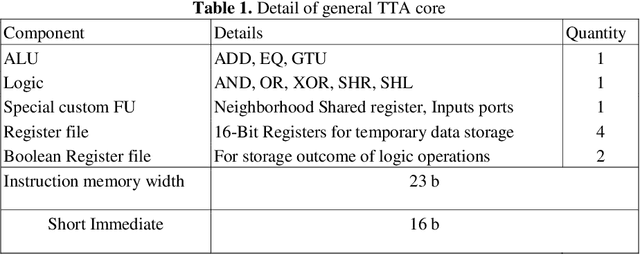

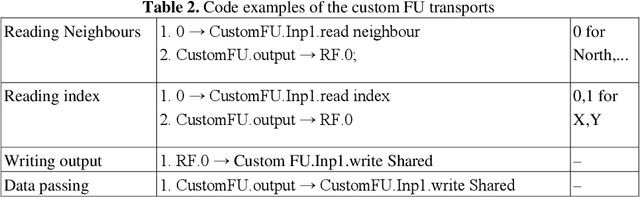

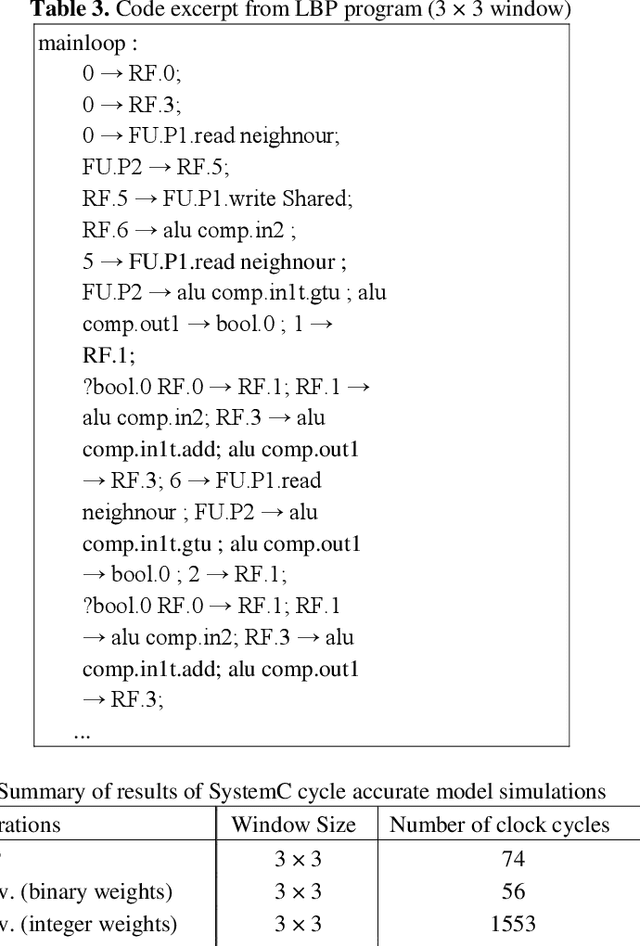

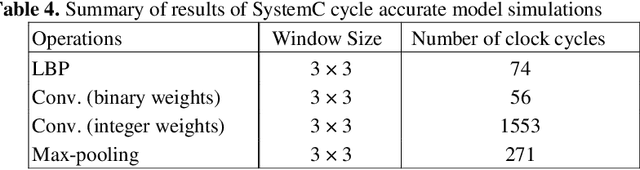

Abstract:Low-level sensory data processing in many Internet-of-Things (IoT) devices pursue energy efficiency by utilizing sleep modes or slowing the clocking to the minimum. To curb the share of stand-by power dissipation in those designs, near-threshold/sub-threshold operational points or ultra-low-leakage processes in fabrication are employed. Those limit the clocking rates significantly, reducing the computing throughputs of individual processing cores. In this contribution we explore compensating for the performance loss of operating in near-threshold region (Vdd =0.6V) through massive parallelization. Benefits of near-threshold operation and massive parallelism are optimum energy consumption per instruction operation and minimized memory roundtrips, respectively. The Processing Elements (PE) of the design are based on Transport Triggered Architecture. The fine grained programmable parallel solution allows for fast and efficient computation of learnable low-level features (e.g. local binary descriptors and convolutions). Other operations, including Max-pooling have also been implemented. The programmable design achieves excellent energy efficiency for Local Binary Patterns computations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge