Ola Hall

Leveraging ChatGPT's Multimodal Vision Capabilities to Rank Satellite Images by Poverty Level: Advancing Tools for Social Science Research

Jan 24, 2025Abstract:This paper investigates the novel application of Large Language Models (LLMs) with vision capabilities to analyze satellite imagery for village-level poverty prediction. Although LLMs were originally designed for natural language understanding, their adaptability to multimodal tasks, including geospatial analysis, has opened new frontiers in data-driven research. By leveraging advancements in vision-enabled LLMs, we assess their ability to provide interpretable, scalable, and reliable insights into human poverty from satellite images. Using a pairwise comparison approach, we demonstrate that ChatGPT can rank satellite images based on poverty levels with accuracy comparable to domain experts. These findings highlight both the promise and the limitations of LLMs in socioeconomic research, providing a foundation for their integration into poverty assessment workflows. This study contributes to the ongoing exploration of unconventional data sources for welfare analysis and opens pathways for cost-effective, large-scale poverty monitoring.

Enhancing Carbon Emission Reduction Strategies using OCO and ICOS data

Oct 05, 2024

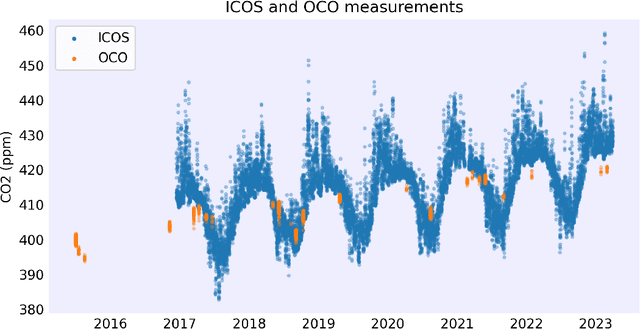

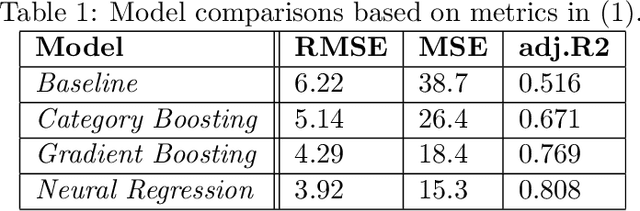

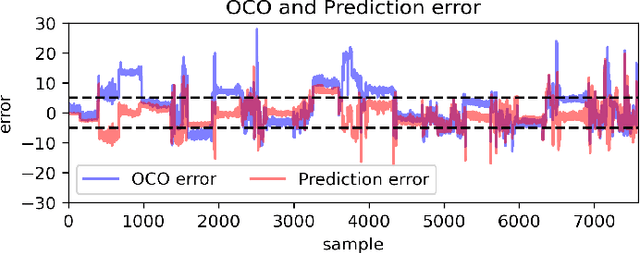

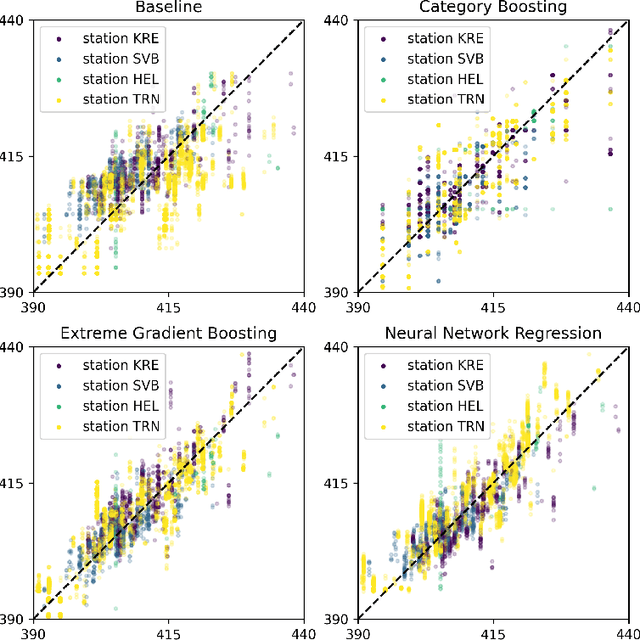

Abstract:We propose a methodology to enhance local CO2 monitoring by integrating satellite data from the Orbiting Carbon Observatories (OCO-2 and OCO-3) with ground level observations from the Integrated Carbon Observation System (ICOS) and weather data from the ECMWF Reanalysis v5 (ERA5). Unlike traditional methods that downsample national data, our approach uses multimodal data fusion for high-resolution CO2 estimations. We employ weighted K-nearest neighbor (KNN) interpolation with machine learning models to predict ground level CO2 from satellite measurements, achieving a Root Mean Squared Error of 3.92 ppm. Our results show the effectiveness of integrating diverse data sources in capturing local emission patterns, highlighting the value of high-resolution atmospheric transport models. The developed model improves the granularity of CO2 monitoring, providing precise insights for targeted carbon mitigation strategies, and represents a novel application of neural networks and KNN in environmental monitoring, adaptable to various regions and temporal scales.

Towards Explaining Satellite Based Poverty Predictions with Convolutional Neural Networks

Dec 01, 2023Abstract:Deep convolutional neural networks (CNNs) have been shown to predict poverty and development indicators from satellite images with surprising accuracy. This paper presents a first attempt at analyzing the CNNs responses in detail and explaining the basis for the predictions. The CNN model, while trained on relatively low resolution day- and night-time satellite images, is able to outperform human subjects who look at high-resolution images in ranking the Wealth Index categories. Multiple explainability experiments performed on the model indicate the importance of the sizes of the objects, pixel colors in the image, and provide a visualization of the importance of different structures in input images. A visualization is also provided of type images that maximize the network prediction of Wealth Index, which provides clues on what the CNN prediction is based on.

Satellite Image and Machine Learning based Knowledge Extraction in the Poverty and Welfare Domain

Mar 02, 2022

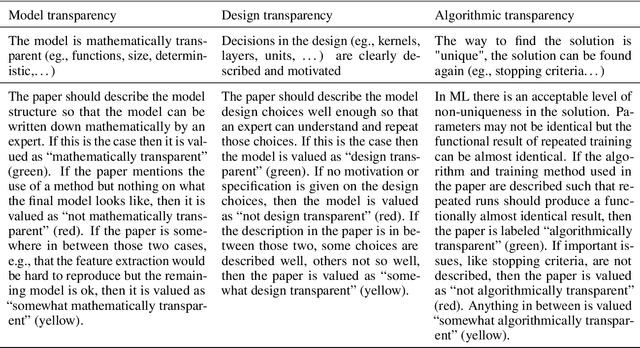

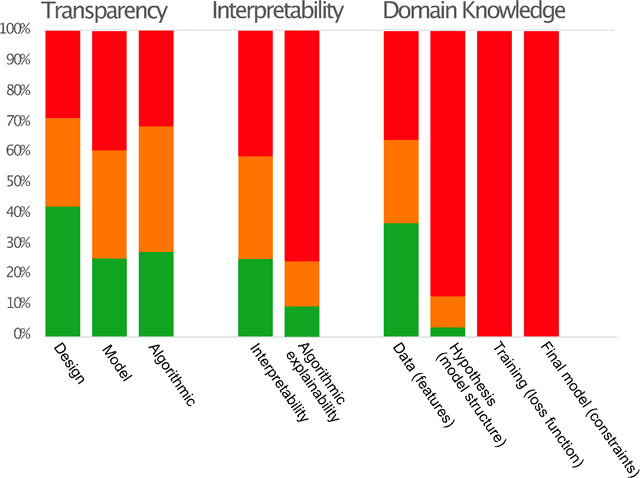

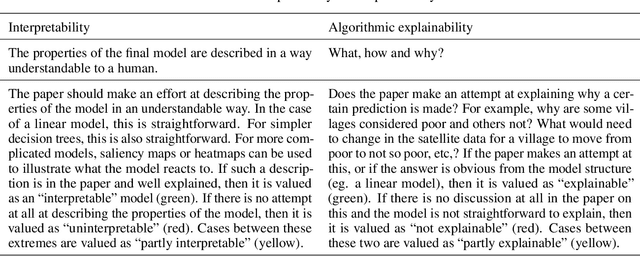

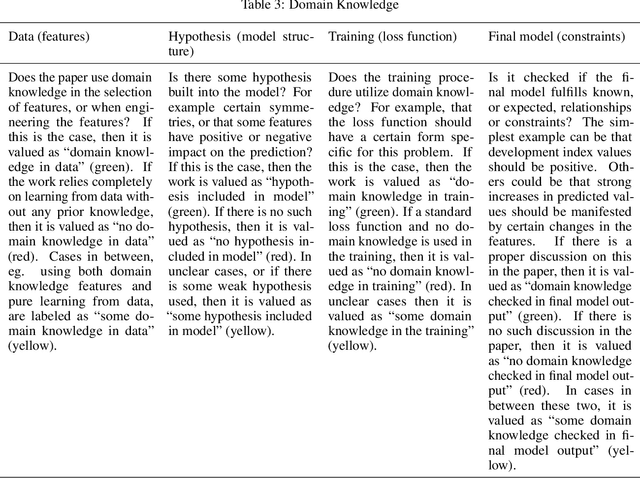

Abstract:Recent advances in artificial intelligence and machine learning have created a step change in how to measure human development indicators, in particular asset based poverty. The combination of satellite imagery and machine learning has the capability to estimate poverty at a level similar to what is achieved with workhorse methods such as face-to-face interviews and household surveys. An increasingly important issue beyond static estimations is whether this technology can contribute to scientific discovery and consequently new knowledge in the poverty and welfare domain. A foundation for achieving scientific insights is domain knowledge, which in turn translates into explainability and scientific consistency. We review the literature focusing on three core elements relevant in this context: transparency, interpretability, and explainability and investigate how they relates to the poverty, machine learning and satellite imagery nexus. Our review of the field shows that the status of the three core elements of explainable machine learning (transparency, interpretability and domain knowledge) is varied and does not completely fulfill the requirements set up for scientific insights and discoveries. We argue that explainability is essential to support wider dissemination and acceptance of this research, and explainability means more than just interpretability.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge