Satellite Image and Machine Learning based Knowledge Extraction in the Poverty and Welfare Domain

Paper and Code

Mar 02, 2022

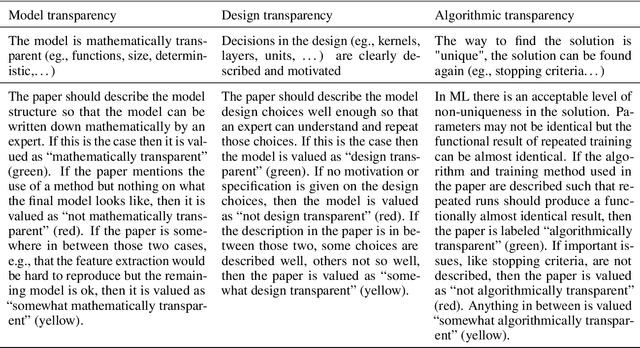

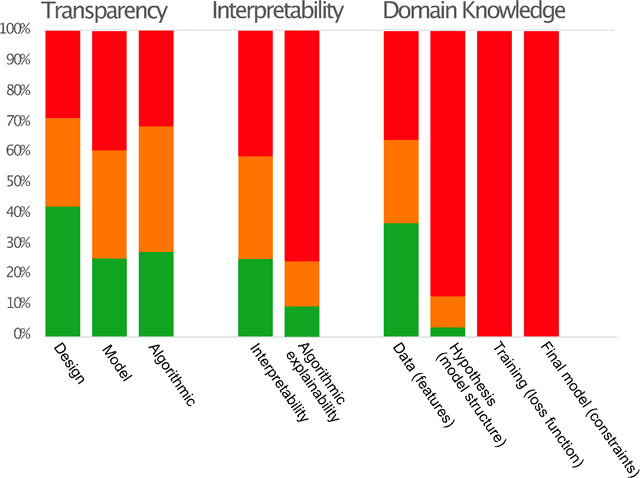

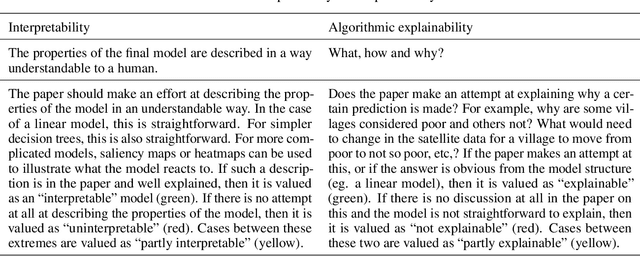

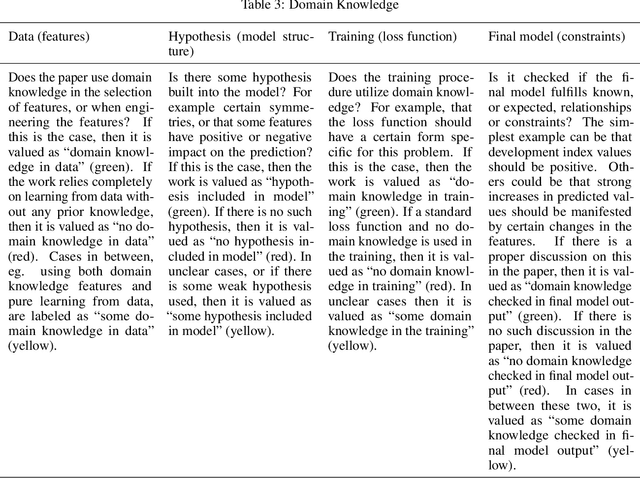

Recent advances in artificial intelligence and machine learning have created a step change in how to measure human development indicators, in particular asset based poverty. The combination of satellite imagery and machine learning has the capability to estimate poverty at a level similar to what is achieved with workhorse methods such as face-to-face interviews and household surveys. An increasingly important issue beyond static estimations is whether this technology can contribute to scientific discovery and consequently new knowledge in the poverty and welfare domain. A foundation for achieving scientific insights is domain knowledge, which in turn translates into explainability and scientific consistency. We review the literature focusing on three core elements relevant in this context: transparency, interpretability, and explainability and investigate how they relates to the poverty, machine learning and satellite imagery nexus. Our review of the field shows that the status of the three core elements of explainable machine learning (transparency, interpretability and domain knowledge) is varied and does not completely fulfill the requirements set up for scientific insights and discoveries. We argue that explainability is essential to support wider dissemination and acceptance of this research, and explainability means more than just interpretability.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge