Nyle Siddiqui

DLCR: A Generative Data Expansion Framework via Diffusion for Clothes-Changing Person Re-ID

Nov 11, 2024

Abstract:With the recent exhibited strength of generative diffusion models, an open research question is \textit{if images generated by these models can be used to learn better visual representations}. While this generative data expansion may suffice for easier visual tasks, we explore its efficacy on a more difficult discriminative task: clothes-changing person re-identification (CC-ReID). CC-ReID aims to match people appearing in non-overlapping cameras, even when they change their clothes across cameras. Not only are current CC-ReID models constrained by the limited diversity of clothing in current CC-ReID datasets, but generating additional data that retains important personal features for accurate identification is a current challenge. To address this issue we propose DLCR, a novel data expansion framework that leverages pre-trained diffusion and large language models (LLMs) to accurately generate diverse images of individuals in varied attire. We generate additional data for five benchmark CC-ReID datasets (PRCC, CCVID, LaST, VC-Clothes, and LTCC) and \textbf{increase their clothing diversity by \boldmath{$10$}x, totaling over \boldmath{$2.1$}M images generated}. DLCR employs diffusion-based text-guided inpainting, conditioned on clothing prompts constructed using LLMs, to generate synthetic data that only modifies a subject's clothes while preserving their personally identifiable features. With this massive increase in data, we introduce two novel strategies - progressive learning and test-time prediction refinement - that respectively reduce training time and further boosts CC-ReID performance. On the PRCC dataset, we obtain a large top-1 accuracy improvement of $11.3\%$ by training CAL, a previous state of the art (SOTA) method, with DLCR-generated data. We publicly release our code and generated data for each dataset here: \url{https://github.com/CroitoruAlin/dlcr}.

DVANet: Disentangling View and Action Features for Multi-View Action Recognition

Dec 10, 2023Abstract:In this work, we present a novel approach to multi-view action recognition where we guide learned action representations to be separated from view-relevant information in a video. When trying to classify action instances captured from multiple viewpoints, there is a higher degree of difficulty due to the difference in background, occlusion, and visibility of the captured action from different camera angles. To tackle the various problems introduced in multi-view action recognition, we propose a novel configuration of learnable transformer decoder queries, in conjunction with two supervised contrastive losses, to enforce the learning of action features that are robust to shifts in viewpoints. Our disentangled feature learning occurs in two stages: the transformer decoder uses separate queries to separately learn action and view information, which are then further disentangled using our two contrastive losses. We show that our model and method of training significantly outperforms all other uni-modal models on four multi-view action recognition datasets: NTU RGB+D, NTU RGB+D 120, PKU-MMD, and N-UCLA. Compared to previous RGB works, we see maximal improvements of 1.5\%, 4.8\%, 2.2\%, and 4.8\% on each dataset, respectively.

Mitigating Presentation Attack using DCGAN and Deep CNN

Jun 22, 2022

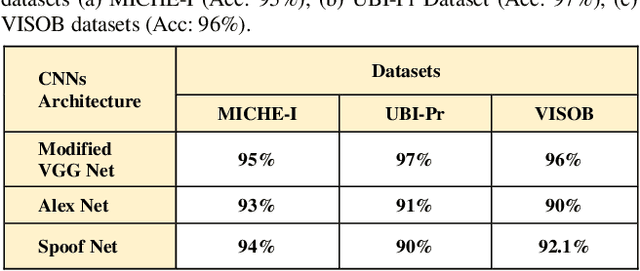

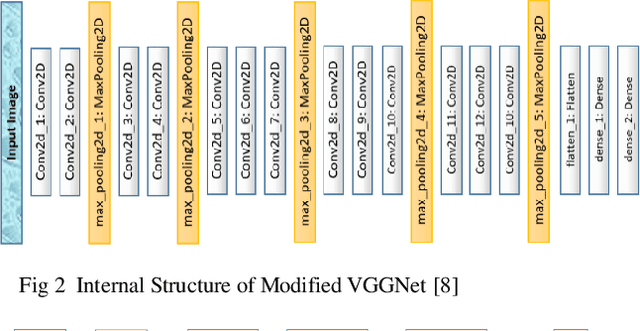

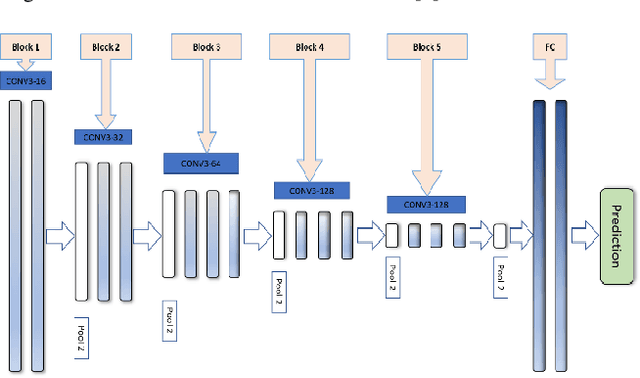

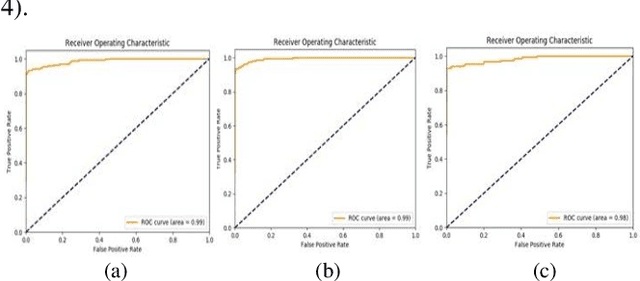

Abstract:Biometric based authentication is currently playing an essential role over conventional authentication system; however, the risk of presentation attacks subsequently rising. Our research aims at identifying the areas where presentation attack can be prevented even though adequate biometric image samples of users are limited. Our work focusses on generating photorealistic synthetic images from the real image sets by implementing Deep Convolution Generative Adversarial Net (DCGAN). We have implemented the temporal and spatial augmentation during the fake image generation. Our work detects the presentation attacks on facial and iris images using our deep CNN, inspired by VGGNet [1]. We applied the deep neural net techniques on three different biometric image datasets, namely MICHE I [2], VISOB [3], and UBIPr [4]. The datasets, used in this research, contain images that are captured both in controlled and uncontrolled environment along with different resolutions and sizes. We obtained the best test accuracy of 97% on UBI-Pr [4] Iris datasets. For MICHE-I [2] and VISOB [3] datasets, we achieved the test accuracies of 95% and 96% respectively.

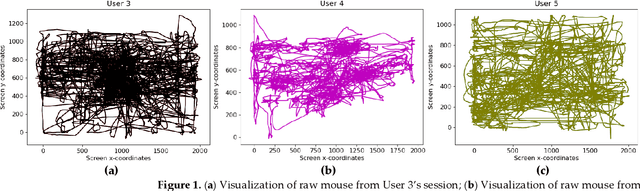

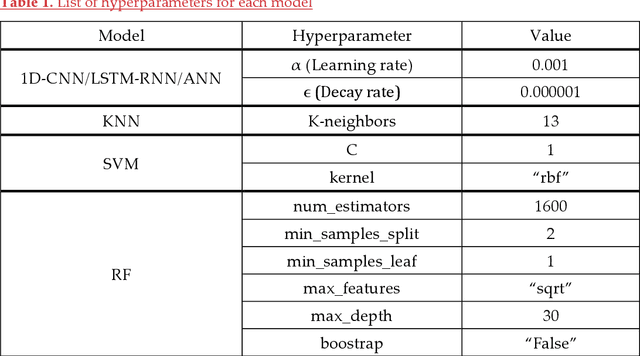

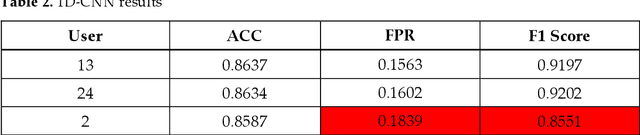

Machine and Deep Learning Applications to Mouse Dynamics for Continuous User Authentication

May 26, 2022

Abstract:Static authentication methods, like passwords, grow increasingly weak with advancements in technology and attack strategies. Continuous authentication has been proposed as a solution, in which users who have gained access to an account are still monitored in order to continuously verify that the user is not an imposter who had access to the user credentials. Mouse dynamics is the behavior of a users mouse movements and is a biometric that has shown great promise for continuous authentication schemes. This article builds upon our previous published work by evaluating our dataset of 40 users using three machine learning and deep learning algorithms. Two evaluation scenarios are considered: binary classifiers are used for user authentication, with the top performer being a 1-dimensional convolutional neural network with a peak average test accuracy of 85.73% across the top 10 users. Multi class classification is also examined using an artificial neural network which reaches an astounding peak accuracy of 92.48% the highest accuracy we have seen for any classifier on this dataset.

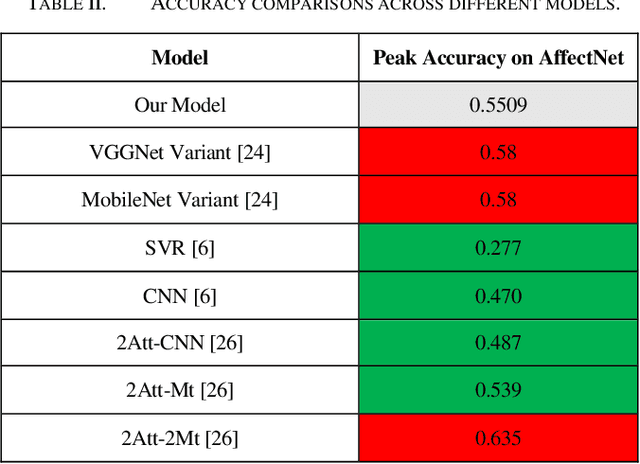

A Robust Framework for Deep Learning Approaches to Facial Emotion Recognition and Evaluation

Jan 30, 2022

Abstract:Facial emotion recognition is a vast and complex problem space within the domain of computer vision and thus requires a universally accepted baseline method with which to evaluate proposed models. While test datasets have served this purpose in the academic sphere real world application and testing of such models lacks any real comparison. Therefore we propose a framework in which models developed for FER can be compared and contrasted against one another in a constant standardized fashion. A lightweight convolutional neural network is trained on the AffectNet dataset a large variable dataset for facial emotion recognition and a web application is developed and deployed with our proposed framework as a proof of concept. The CNN is embedded into our application and is capable of instant real time facial emotion recognition. When tested on the AffectNet test set this model achieves high accuracy for emotion classification of eight different emotions. Using our framework the validity of this model and others can be properly tested by evaluating a model efficacy not only based on its accuracy on a sample test dataset, but also on in the wild experiments. Additionally, our application is built with the ability to save and store any image captured or uploaded to it for emotion recognition, allowing for the curation of more quality and diverse facial emotion recognition datasets.

A Modern Analysis of Aging Machine Learning Based IoT Cybersecurity Methods

Oct 15, 2021

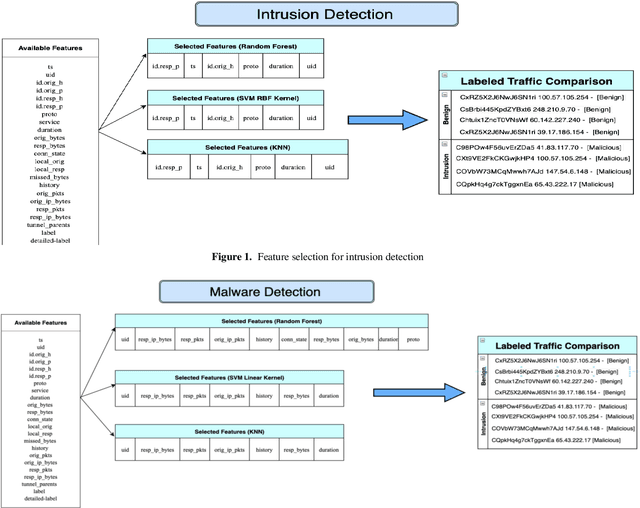

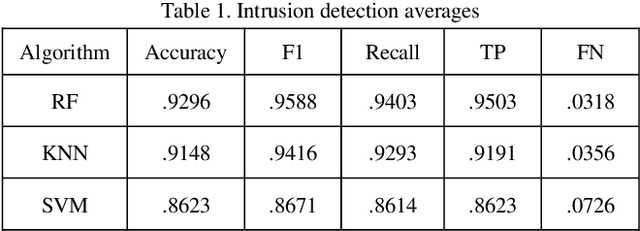

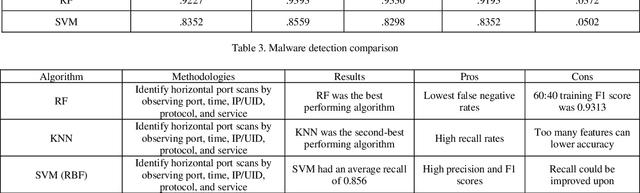

Abstract:Modern scientific advancements often contribute to the introduction and refinement of never-before-seen technologies. This can be quite the task for humans to maintain and monitor and as a result, our society has become reliant on machine learning to assist in this task. With new technology comes new methods and thus new ways to circumvent existing cyber security measures. This study examines the effectiveness of three distinct Internet of Things cyber security algorithms currently used in industry today for malware and intrusion detection: Random Forest (RF), Support-Vector Machine (SVM), and K-Nearest Neighbor (KNN). Each algorithm was trained and tested on the Aposemat IoT-23 dataset which was published in January 2020 with the earliest of captures from 2018 and latest from 2019. The RF, SVM, and KNN reached peak accuracies of 92.96%, 86.23%, and 91.48%, respectively, in intrusion detection and 92.27%, 83.52%, and 89.80% in malware detection. It was found all three algorithms are capable of being effectively utilized for the current landscape of IoT cyber security in 2021.

Continuous Authentication Using Mouse Movements, Machine Learning, and Minecraft

Oct 15, 2021

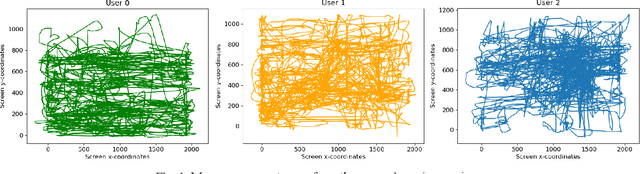

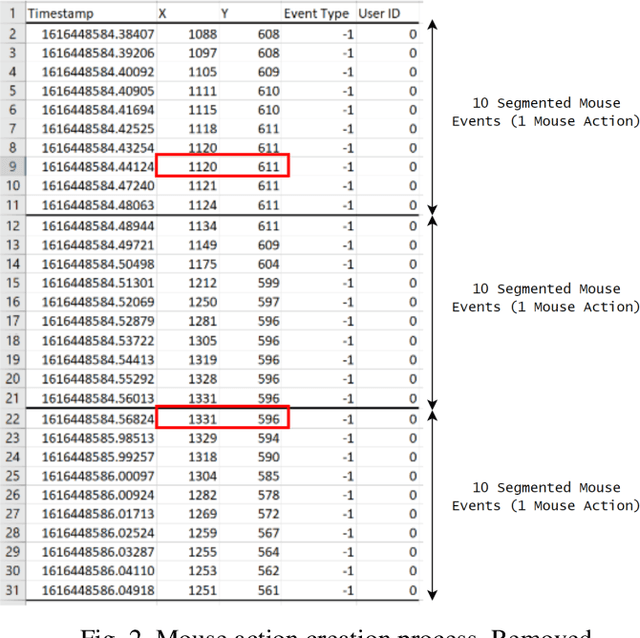

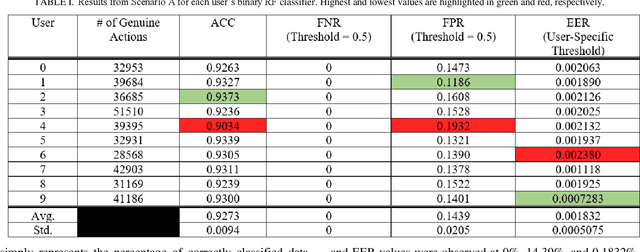

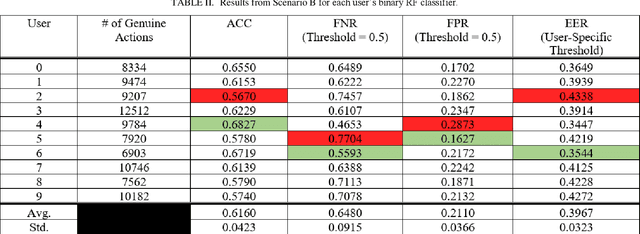

Abstract:Mouse dynamics has grown in popularity as a novel irreproducible behavioral biometric. Datasets which contain general unrestricted mouse movements from users are sparse in the current literature. The Balabit mouse dynamics dataset produced in 2016 was made for a data science competition and despite some of its shortcomings, is considered to be the first publicly available mouse dynamics dataset. Collecting mouse movements in a dull administrative manner as Balabit does may unintentionally homogenize data and is also not representative of realworld application scenarios. This paper presents a novel mouse dynamics dataset that has been collected while 10 users play the video game Minecraft on a desktop computer. Binary Random Forest (RF) classifiers are created for each user to detect differences between a specific users movements and an imposters movements. Two evaluation scenarios are proposed to evaluate the performance of these classifiers; one scenario outperformed previous works in all evaluation metrics, reaching average accuracy rates of 92%, while the other scenario successfully reported reduced instances of false authentications of imposters.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge