Norbert Franke

Efficient Beam Search for Initial Access Using Collaborative Filtering

Sep 14, 2022

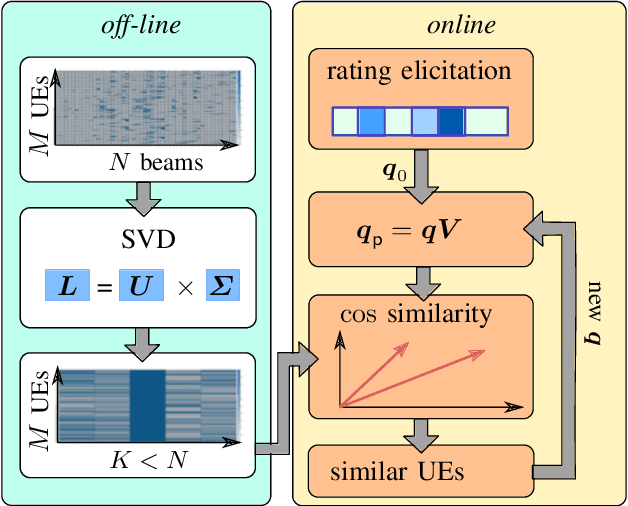

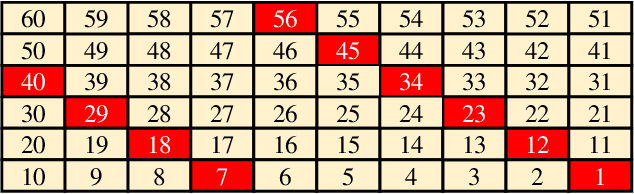

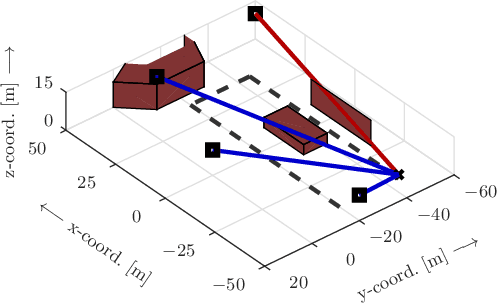

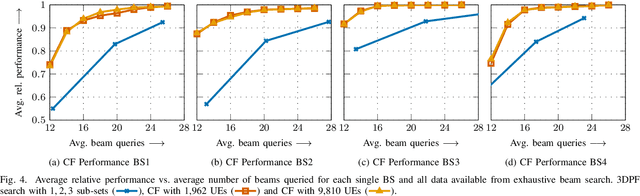

Abstract:Beamforming-capable antenna arrays overcome the high free-space path loss at higher carrier frequencies. However, the beams must be properly aligned to ensure that the highest power is radiated towards (and received by) the user equipment (UE). While there are methods that improve upon an exhaustive search for optimal beams by some form of hierarchical search, they can be prone to return only locally optimal solutions with small beam gains. Other approaches address this problem by exploiting contextual information, e.g., the position of the UE or information from neighboring base stations (BS), but the burden of computing and communicating this additional information can be high. Methods based on machine learning so far suffer from the accompanying training, performance monitoring and deployment complexity that hinders their application at scale. This paper proposes a novel method for solving the initial beam-discovery problem. It is scalable, and easy to tune and to implement. Our algorithm is based on a recommender system that associates groups (i.e., UEs) and preferences (i.e., beams from a codebook) based on a training data set. Whenever a new UE needs to be served our algorithm returns the best beams in this user cluster. Our simulation results demonstrate the efficiency and robustness of our approach, not only in single BS setups but also in setups that require a coordination among several BSs. Our method consistently outperforms standard baseline algorithms in the given task.

Towards Realistic Statistical Channel Models For Positioning: Evaluating the Impact of Early Clusters

Jul 16, 2022

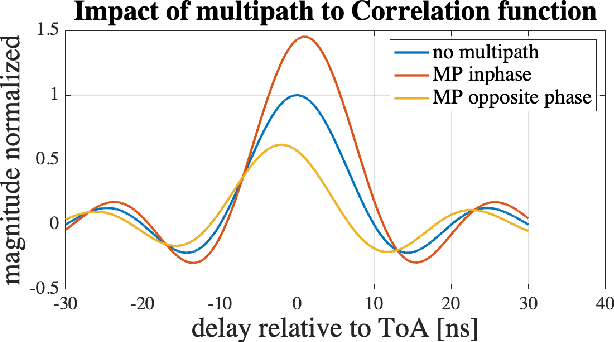

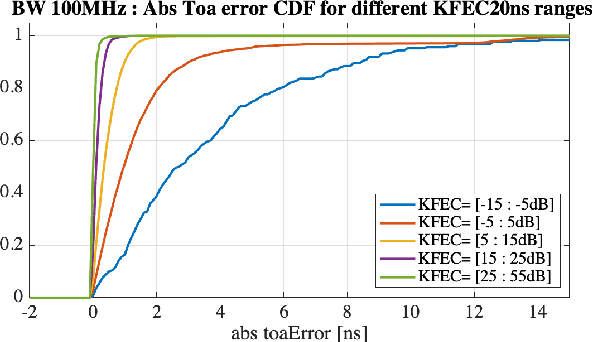

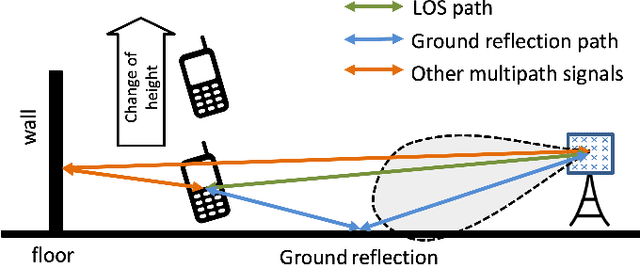

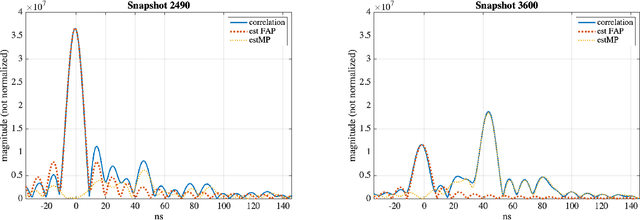

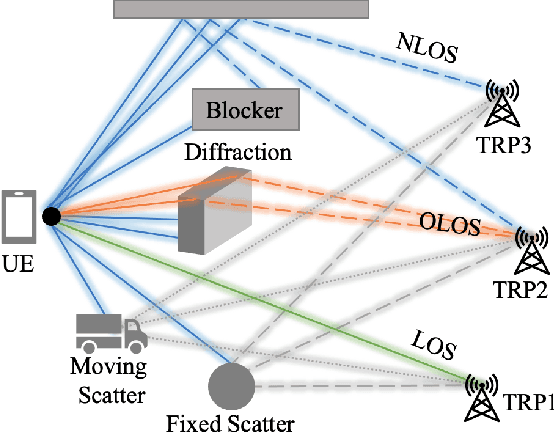

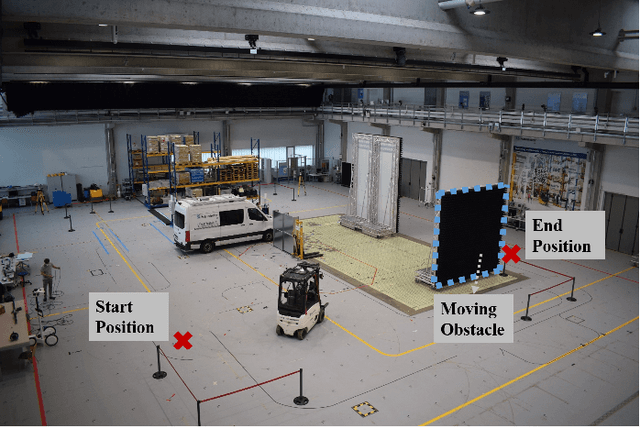

Abstract:Physical effects such as reflection, refraction, and diffraction cause a radio signal to arrive from a transmitter to a receiver in multiple replicas that have different amplitude and rotation. Bandwidth-limited signals, such as positioning reference signals, have a limited time resolution. In reality, the signal is often reflected in the close vicinity of a transmitter and receiver, which causes the displacement of the observed peak from the true peak expected according to the line of sight (LOS) geometry between the transmitter and receiver. In this paper, we show that the existing channel model specified for performance evaluation within 3GPP fails to model the above phenomena. As a result, the simulation results deviate significantly from the measured values. Based on our measurement and simulation results, we propose a model for incorporating the signal reflection by obstacles in the vicinity of transmitter or receiver, so that the outcome of the model corresponds to the measurement made in such scenario.

Complementary Semi-Deterministic Clusters for Realistic Statistical Channel Models for Positioning

Jul 16, 2022

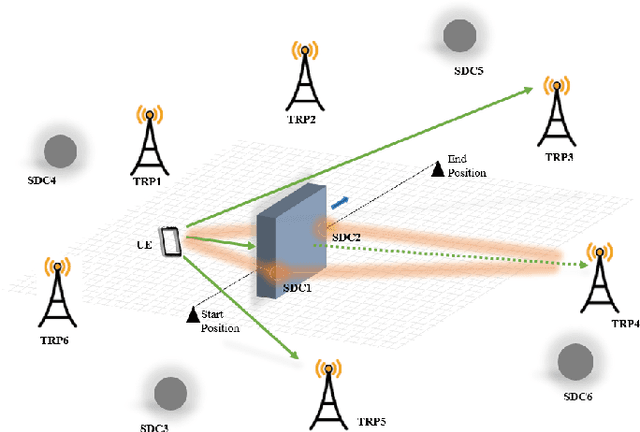

Abstract:Positioning benefits from channel models that capture geometric effects and, in particular, from the signal properties of the first arriving path and the spatial consistency of the propagation condition of multiple links. The models that capture the physical effects observed in a realistic deployment scenario are essential for assessing the potential benefits of enhancements in positioning methods. Channel models based on ray-tracing simulations and statistical channel models, which are current state-of-the-art methods employed to evaluate performance of positioning in 3GPP systems, do not fully capture important aspects applicable to positioning. Hence, we propose an extension of existing statistical channel models with semi-deterministic clusters (SDCs). SDCs allow channels to be simulated using three types of clusters: fixed-, specular-, and random-clusters. Our results show that the proposed model aligns with measurements obtained in a real deployment scenario. Thus, our channel models can be used to develop advanced positioning solutions based on machine learning, which enable positioning with centimeter level accuracy in NLOS and multipath scenarios.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge