Nitin Madnani

Is getting the right answer just about choosing the right words? The role of syntactically-informed features in short answer scoring

Mar 05, 2014

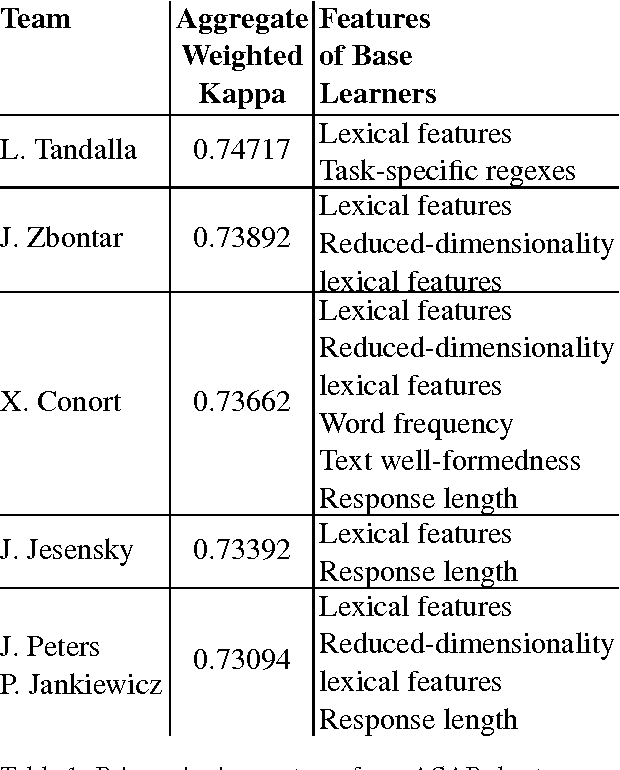

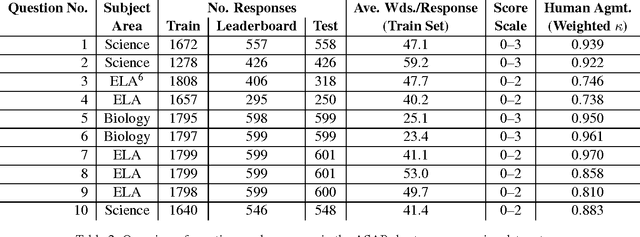

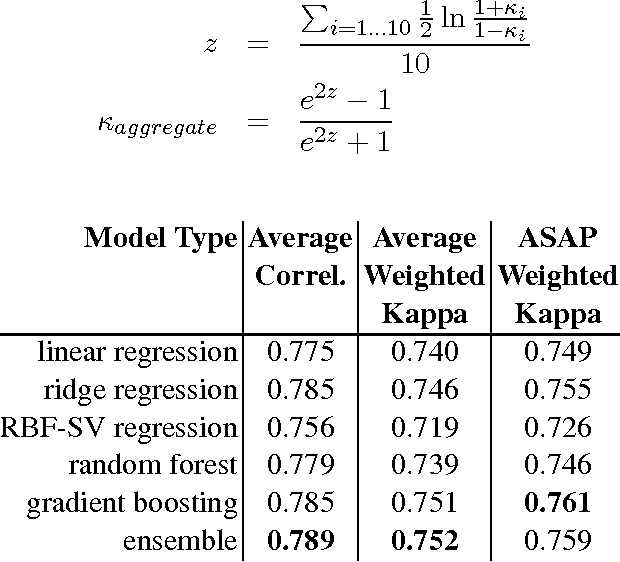

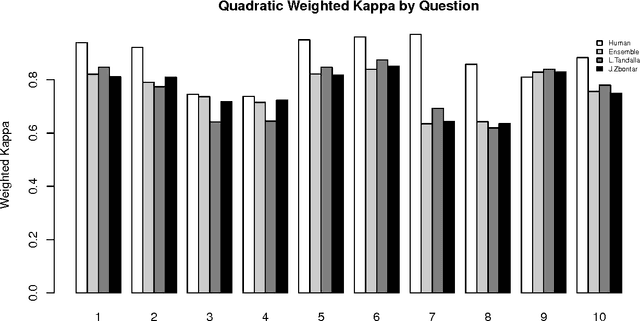

Abstract:Developments in the educational landscape have spurred greater interest in the problem of automatically scoring short answer questions. A recent shared task on this topic revealed a fundamental divide in the modeling approaches that have been applied to this problem, with the best-performing systems split between those that employ a knowledge engineering approach and those that almost solely leverage lexical information (as opposed to higher-level syntactic information) in assigning a score to a given response. This paper aims to introduce the NLP community to the largest corpus currently available for short-answer scoring, provide an overview of methods used in the shared task using this data, and explore the extent to which more syntactically-informed features can contribute to the short answer scoring task in a way that avoids the question-specific manual effort of the knowledge engineering approach.

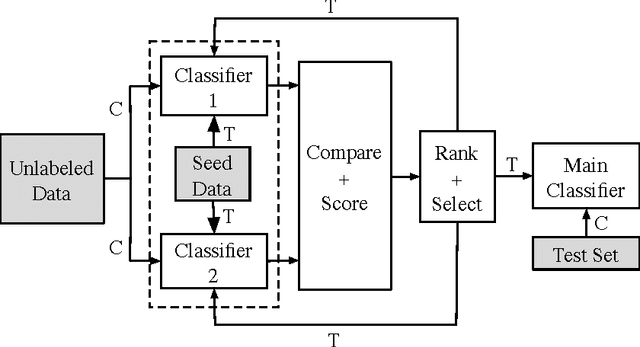

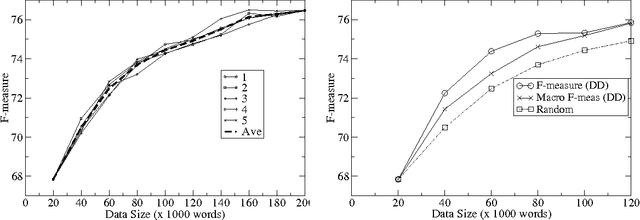

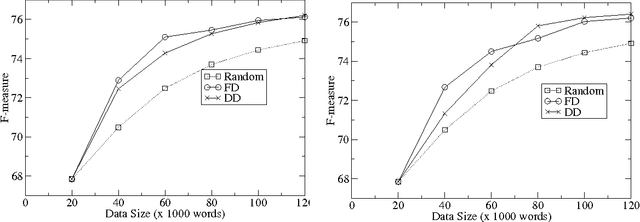

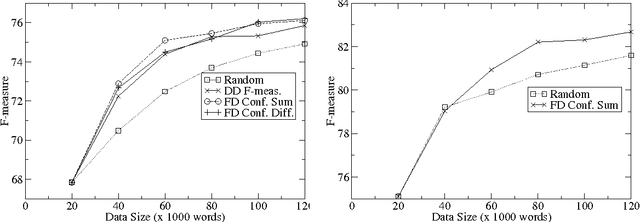

Active Learning for Mention Detection: A Comparison of Sentence Selection Strategies

Nov 10, 2009

Abstract:We propose and compare various sentence selection strategies for active learning for the task of detecting mentions of entities. The best strategy employs the sum of confidences of two statistical classifiers trained on different views of the data. Our experimental results show that, compared to the random selection strategy, this strategy reduces the amount of required labeled training data by over 50% while achieving the same performance. The effect is even more significant when only named mentions are considered: the system achieves the same performance by using only 42% of the training data required by the random selection strategy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge