Nishant Shankar

SuMe: A Dataset Towards Summarizing Biomedical Mechanisms

May 10, 2022

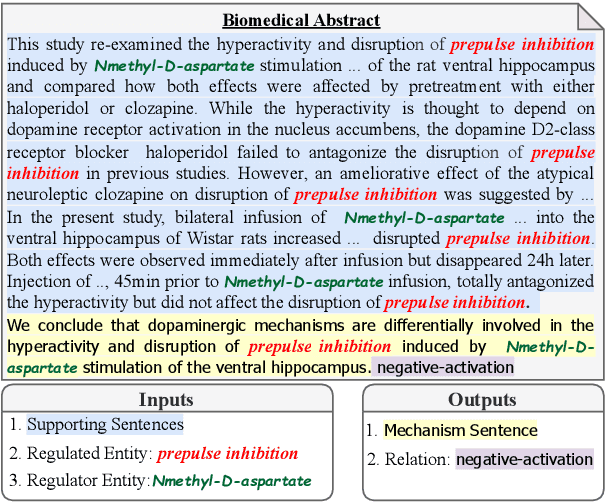

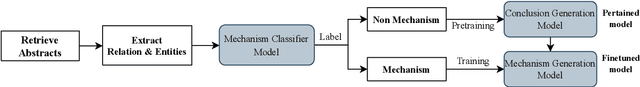

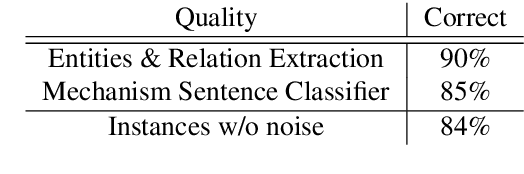

Abstract:Can language models read biomedical texts and explain the biomedical mechanisms discussed? In this work we introduce a biomedical mechanism summarization task. Biomedical studies often investigate the mechanisms behind how one entity (e.g., a protein or a chemical) affects another in a biological context. The abstracts of these publications often include a focused set of sentences that present relevant supporting statements regarding such relationships, associated experimental evidence, and a concluding sentence that summarizes the mechanism underlying the relationship. We leverage this structure and create a summarization task, where the input is a collection of sentences and the main entities in an abstract, and the output includes the relationship and a sentence that summarizes the mechanism. Using a small amount of manually labeled mechanism sentences, we train a mechanism sentence classifier to filter a large biomedical abstract collection and create a summarization dataset with 22k instances. We also introduce conclusion sentence generation as a pretraining task with 611k instances. We benchmark the performance of large bio-domain language models. We find that while the pretraining task help improves performance, the best model produces acceptable mechanism outputs in only 32% of the instances, which shows the task presents significant challenges in biomedical language understanding and summarization.

Face Verification and Forgery Detection for Ophthalmic Surgery Images

Nov 15, 2018

Abstract:Although modern face verification systems are accessible and accurate, they are not always robust to pose variance and occlusions. Moreover, accurate models require a large amount of data to train. We structure our experiments to operate on small amounts of data obtained from an NGO that funds ophthalmic surgeries. We set up our face verification task as that of verifying pre-operation and post-operation images of a patient that undergoes ophthalmic surgery, and as such the post-operation images have occlusions like an eye patch. In this paper, we present a system that performs the face verification task using one-shot learning. To this end, our paper uses deep convolutional networks and compares different model architectures and loss functions. Our best model achieves 85% test accuracy. During inference time, we also attempt to detect image forgeries in addition to performing face verification. To achieve this, we use Error Level Analysis. Finally, we propose an inference pipeline that demonstrates how these techniques can be used to implement an automated face verification and forgery detection system.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge