Ningze Zhong

ETSCL: An Evidence Theory-Based Supervised Contrastive Learning Framework for Multi-modal Glaucoma Grading

Jul 19, 2024

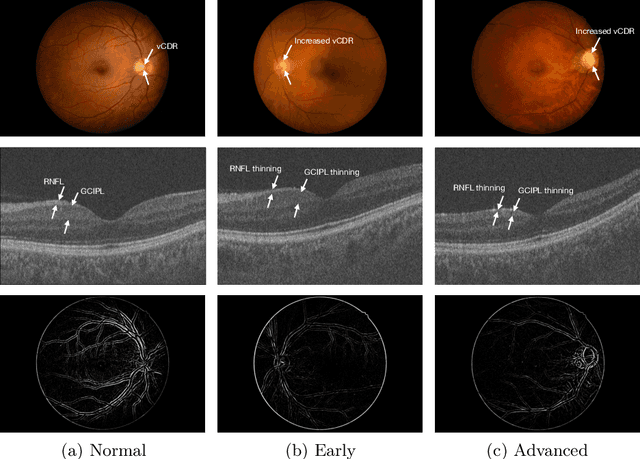

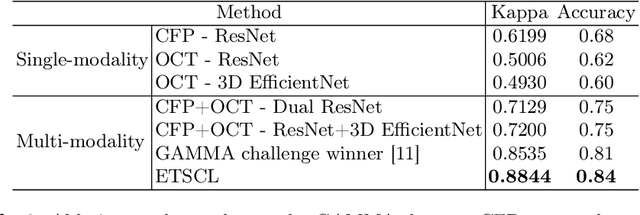

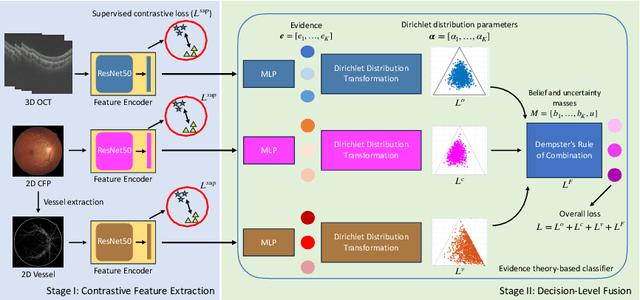

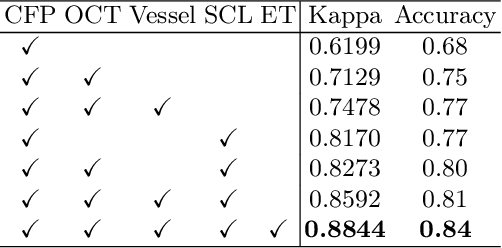

Abstract:Glaucoma is one of the leading causes of vision impairment. Digital imaging techniques, such as color fundus photography (CFP) and optical coherence tomography (OCT), provide quantitative and noninvasive methods for glaucoma diagnosis. Recently, in the field of computer-aided glaucoma diagnosis, multi-modality methods that integrate the CFP and OCT modalities have achieved greater diagnostic accuracy compared to single-modality methods. However, it remains challenging to extract reliable features due to the high similarity of medical images and the unbalanced multi-modal data distribution. Moreover, existing methods overlook the uncertainty estimation of different modalities, leading to unreliable predictions. To address these challenges, we propose a novel framework, namely ETSCL, which consists of a contrastive feature extraction stage and a decision-level fusion stage. Specifically, the supervised contrastive loss is employed to enhance the discriminative power in the feature extraction process, resulting in more effective features. In addition, we utilize the Frangi vesselness algorithm as a preprocessing step to incorporate vessel information to assist in the prediction. In the decision-level fusion stage, an evidence theory-based multi-modality classifier is employed to combine multi-source information with uncertainty estimation. Extensive experiments demonstrate that our method achieves state-of-the-art performance. The code is available at \url{https://github.com/master-Shix/ETSCL}.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge