Nina R Benway

CRoP: Context-wise Robust Static Human-Sensing Personalization

Sep 26, 2024

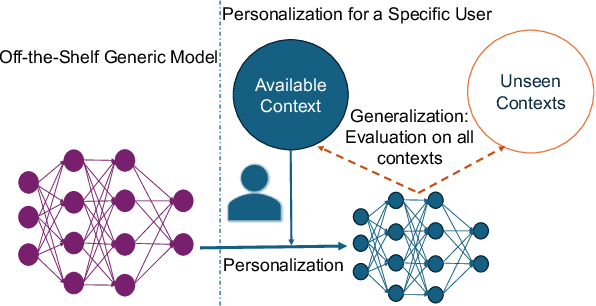

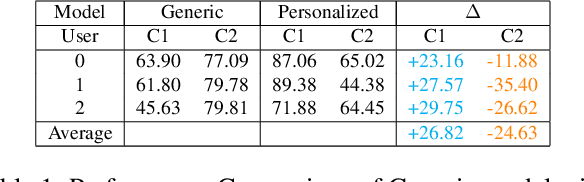

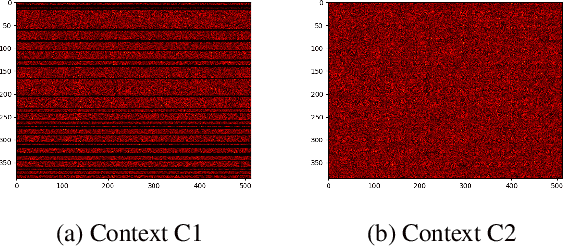

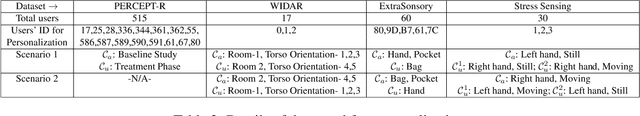

Abstract:The advancement in deep learning and internet-of-things have led to diverse human sensing applications. However, distinct patterns in human sensing, influenced by various factors or contexts, challenge generic neural network model's performance due to natural distribution shifts. To address this, personalization tailors models to individual users. Yet most personalization studies overlook intra-user heterogeneity across contexts in sensory data, limiting intra-user generalizability. This limitation is especially critical in clinical applications, where limited data availability hampers both generalizability and personalization. Notably, intra-user sensing attributes are expected to change due to external factors such as treatment progression, further complicating the challenges.This work introduces CRoP, a novel static personalization approach using an off-the-shelf pre-trained model and pruning to optimize personalization and generalization. CRoP shows superior personalization effectiveness and intra-user robustness across four human-sensing datasets, including two from real-world health domains, highlighting its practical and social impact. Additionally, to support CRoP's generalization ability and design choices, we provide empirical justification through gradient inner product analysis, ablation studies, and comparisons against state-of-the-art baselines.

Prospective Validation of Motor-Based Intervention with Automated Mispronunciation Detection of Rhotics in Residual Speech Sound Disorders

May 30, 2023

Abstract:Because lab accuracy of clinical speech technology systems may be overoptimistic, clinical validation is vital to demonstrate system reproducibility - in this case, the ability of the PERCEPT-R Classifier to predict clinician judgment of American English /r/ during ChainingAI motor-based speech sound disorder intervention. All five participants experienced statistically-significant improvement in untreated words following 10 sessions of combined human-ChainingAI treatment. These gains, despite a wide range of PERCEPT-human and human-human (F1-score) agreement, raise questions about best measuring classification performance for clinical speech that may be perceptually ambiguous.

Classifying Rhoticity of /r/ in Speech Sound Disorder using Age-and-Sex Normalized Formants

May 25, 2023

Abstract:Mispronunciation detection tools could increase treatment access for speech sound disorders impacting, e.g., /r/. We show age-and-sex normalized formant estimation outperforms cepstral representation for detection of fully rhotic vs. derhotic /r/ in the PERCEPT-R Corpus. Gated recurrent neural networks trained on this feature set achieve a mean test participant-specific F1-score =.81 ({\sigma}x=.10, med = .83, n = 48), with post hoc modeling showing no significant effect of child age or sex.

Acoustic-to-Articulatory Speech Inversion Features for Mispronunciation Detection of /r/ in Child Speech Sound Disorders

May 25, 2023

Abstract:Acoustic-to-articulatory speech inversion could enhance automated clinical mispronunciation detection to provide detailed articulatory feedback unattainable by formant-based mispronunciation detection algorithms; however, it is unclear the extent to which a speech inversion system trained on adult speech performs in the context of (1) child and (2) clinical speech. In the absence of an articulatory dataset in children with rhotic speech sound disorders, we show that classifiers trained on tract variables from acoustic-to-articulatory speech inversion meet or exceed the performance of state-of-the-art features when predicting clinician judgment of rhoticity. Index Terms: rhotic, speech sound disorder, mispronunciation detection

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge