Nima Keivan

Unsupervised Metric Relocalization Using Transform Consistency Loss

Nov 01, 2020

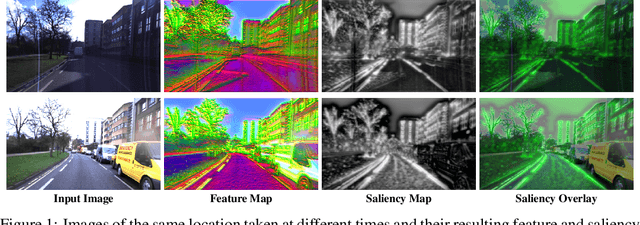

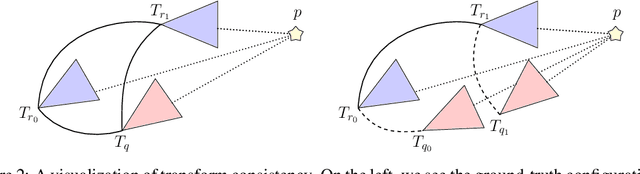

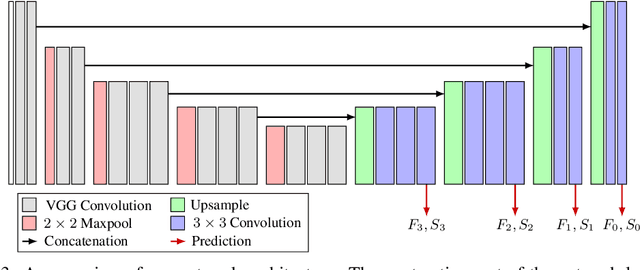

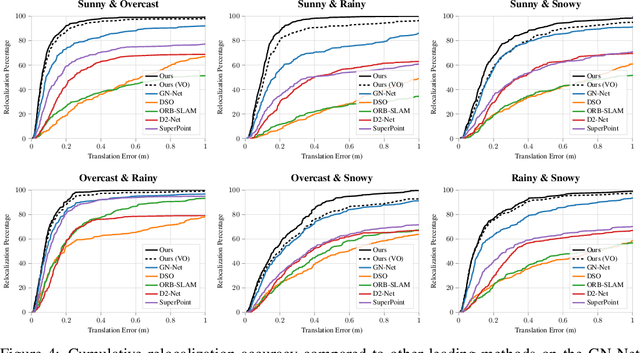

Abstract:Training networks to perform metric relocalization traditionally requires accurate image correspondences. In practice, these are obtained by restricting domain coverage, employing additional sensors, or capturing large multi-view datasets. We instead propose a self-supervised solution, which exploits a key insight: localizing a query image within a map should yield the same absolute pose, regardless of the reference image used for registration. Guided by this intuition, we derive a novel transform consistency loss. Using this loss function, we train a deep neural network to infer dense feature and saliency maps to perform robust metric relocalization in dynamic environments. We evaluate our framework on synthetic and real-world data, showing our approach outperforms other supervised methods when a limited amount of ground-truth information is available.

Light Source Estimation with Analytical Path-tracing

Jan 15, 2017

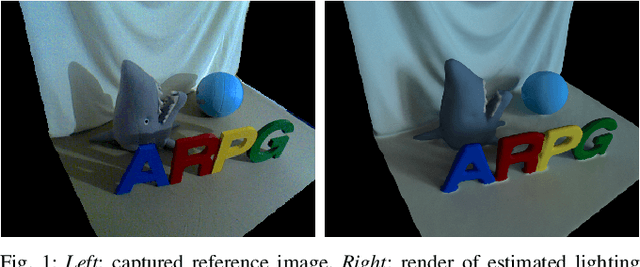

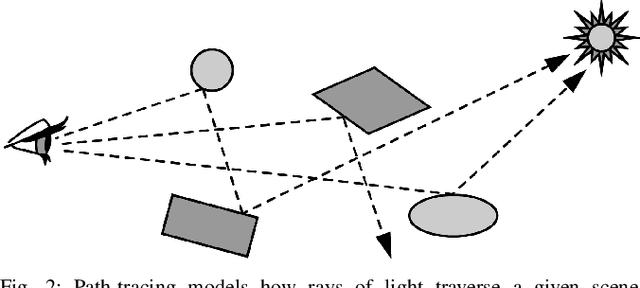

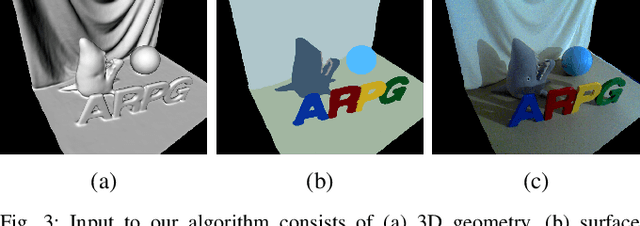

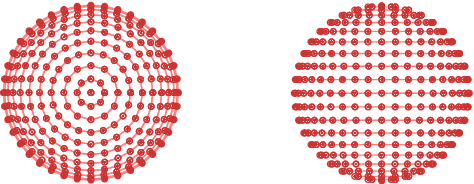

Abstract:We present a novel algorithm for light source estimation in scenes reconstructed with a RGB-D camera based on an analytically-derived formulation of path-tracing. Our algorithm traces the reconstructed scene with a custom path-tracer and computes the analytical derivatives of the light transport equation from principles in optics. These derivatives are then used to perform gradient descent, minimizing the photometric error between one or more captured reference images and renders of our current lighting estimation using an environment map parameterization for light sources. We show that our approach of modeling all light sources as points at infinity approximates lights located near the scene with surprising accuracy. Due to the analytical formulation of derivatives, optimization to the solution is considerably accelerated. We verify our algorithm using both real and synthetic data.

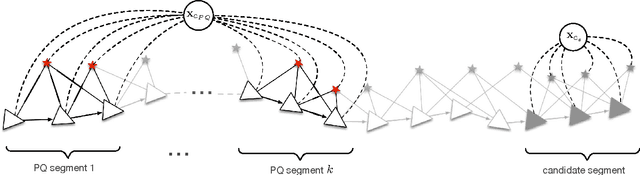

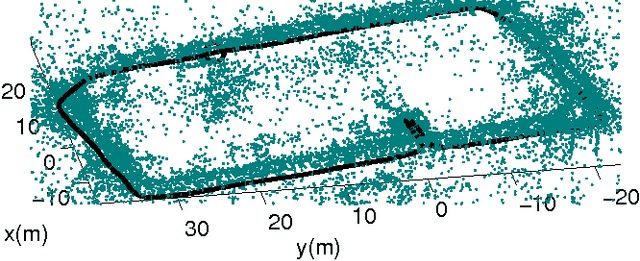

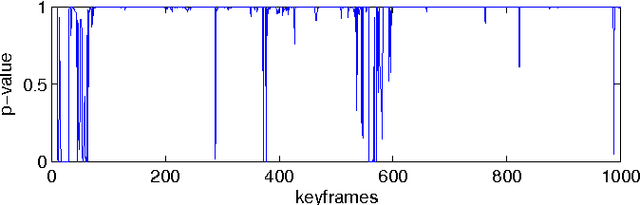

Online SLAM with Any-time Self-calibration and Automatic Change Detection

Nov 05, 2014

Abstract:A framework for online simultaneous localization, mapping and self-calibration is presented which can detect and handle significant change in the calibration parameters. Estimates are computed in constant-time by factoring the problem and focusing on segments of the trajectory that are most informative for the purposes of calibration. A novel technique is presented to detect the probability that a significant change is present in the calibration parameters. The system is then able to re-calibrate. Maximum likelihood trajectory and map estimates are computed using an asynchronous and adaptive optimization. The system requires no prior information and is able to initialize without any special motions or routines, or in the case where observability over calibration parameters is delayed. The system is experimentally validated to calibrate camera intrinsic parameters for a nonlinear camera model on a monocular dataset featuring a significant zoom event partway through, and achieves high accuracy despite unknown initial calibration parameters. Self-calibration and re-calibration parameters are shown to closely match estimates computed using a calibration target. The accuracy of the system is demonstrated with SLAM results that achieve sub-1% distance-travel error even in the presence of significant re-calibration events.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge