Online SLAM with Any-time Self-calibration and Automatic Change Detection

Paper and Code

Nov 05, 2014

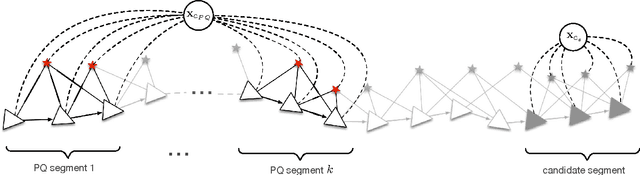

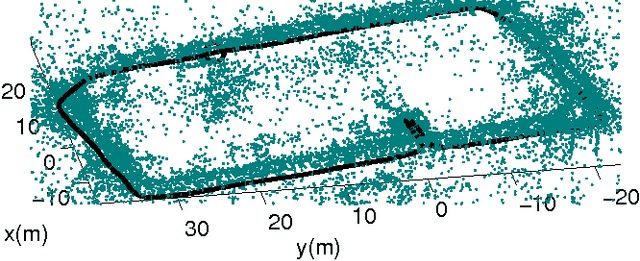

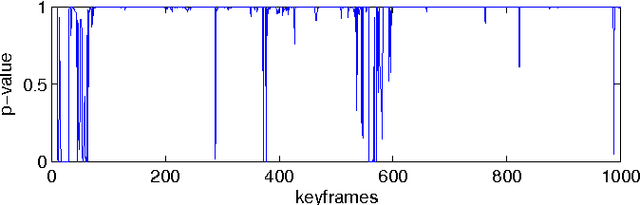

A framework for online simultaneous localization, mapping and self-calibration is presented which can detect and handle significant change in the calibration parameters. Estimates are computed in constant-time by factoring the problem and focusing on segments of the trajectory that are most informative for the purposes of calibration. A novel technique is presented to detect the probability that a significant change is present in the calibration parameters. The system is then able to re-calibrate. Maximum likelihood trajectory and map estimates are computed using an asynchronous and adaptive optimization. The system requires no prior information and is able to initialize without any special motions or routines, or in the case where observability over calibration parameters is delayed. The system is experimentally validated to calibrate camera intrinsic parameters for a nonlinear camera model on a monocular dataset featuring a significant zoom event partway through, and achieves high accuracy despite unknown initial calibration parameters. Self-calibration and re-calibration parameters are shown to closely match estimates computed using a calibration target. The accuracy of the system is demonstrated with SLAM results that achieve sub-1% distance-travel error even in the presence of significant re-calibration events.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge