Fernando Nobre

Unsupervised Metric Relocalization Using Transform Consistency Loss

Nov 01, 2020

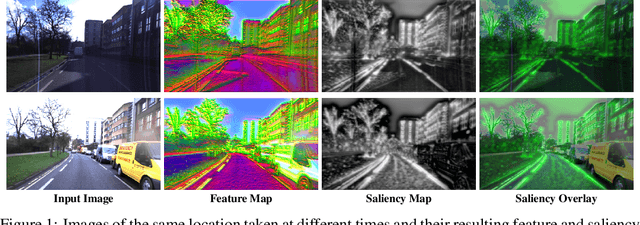

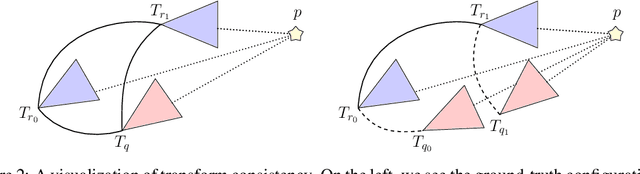

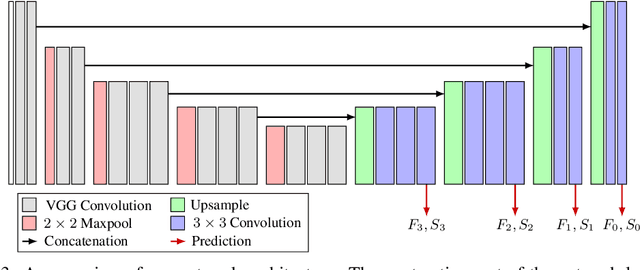

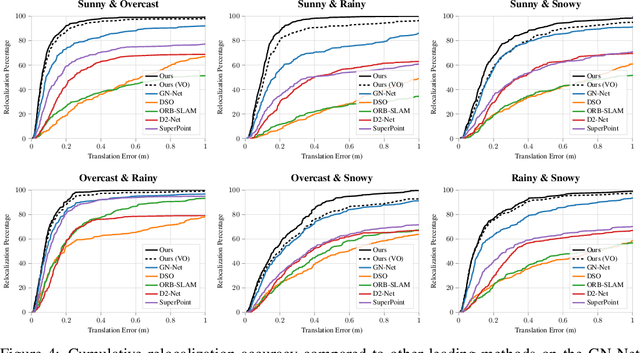

Abstract:Training networks to perform metric relocalization traditionally requires accurate image correspondences. In practice, these are obtained by restricting domain coverage, employing additional sensors, or capturing large multi-view datasets. We instead propose a self-supervised solution, which exploits a key insight: localizing a query image within a map should yield the same absolute pose, regardless of the reference image used for registration. Guided by this intuition, we derive a novel transform consistency loss. Using this loss function, we train a deep neural network to infer dense feature and saliency maps to perform robust metric relocalization in dynamic environments. We evaluate our framework on synthetic and real-world data, showing our approach outperforms other supervised methods when a limited amount of ground-truth information is available.

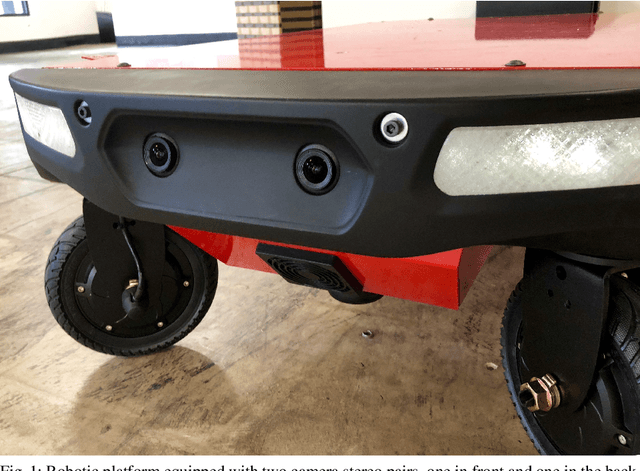

FastCal: Robust Online Self-Calibration for Robotic Systems

Feb 27, 2019

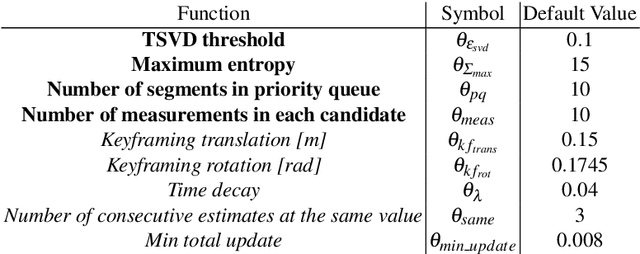

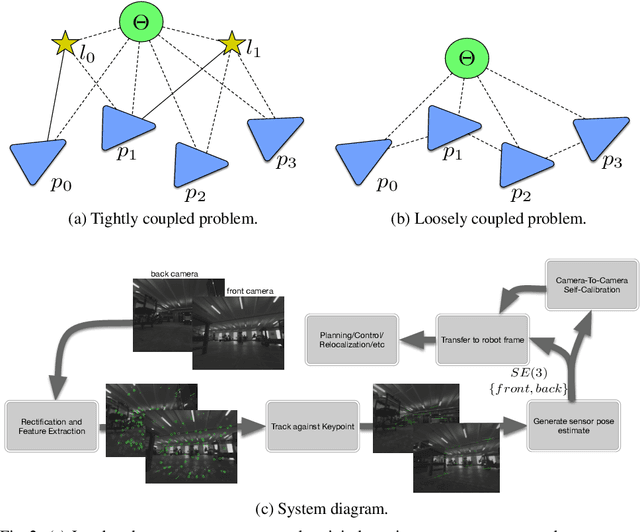

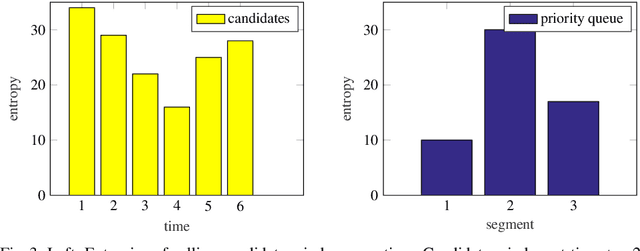

Abstract:We propose a solution for sensor extrinsic self-calibration with very low time complexity, competitive accuracy and graceful handling of often-avoided corner cases: drift in calibration parameters and unobservable directions in the parameter space. It consists of three main parts: 1) information-theoretic based segment selection for constant-time estimation; 2) observability-aware parameter update through a rank-revealing decomposition of the Fisher information matrix; 3) drift-correcting self-calibration through the time-decay of segments. At the core of our FastCal algorithm is the loosely-coupled formulation for sensor extrinsics calibration and efficient selection of measurements. FastCal runs up to an order of magnitude faster than similar self-calibration algorithms (camera-to-camera extrinsics, excluding feature-matching and image pre-processing on all comparisons), making FastCal ideal for integration into existing, resource-constrained, robotics systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge