Niklas Engsner

Exact Spectral Norm Regularization for Neural Networks

Jun 27, 2022

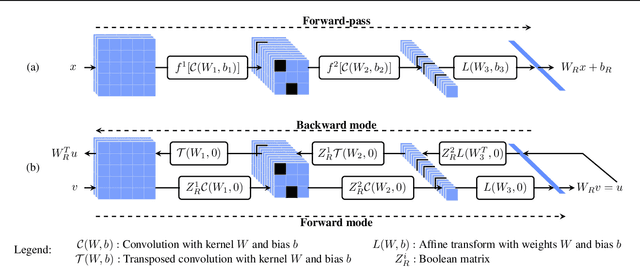

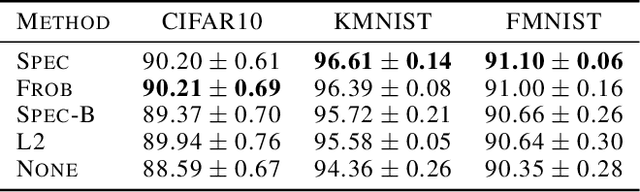

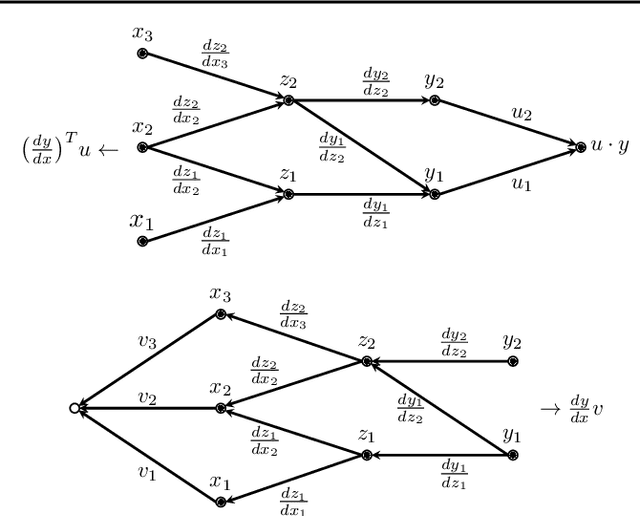

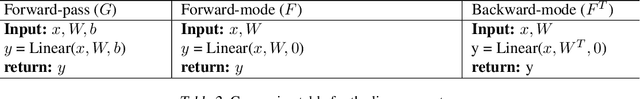

Abstract:We pursue a line of research that seeks to regularize the spectral norm of the Jacobian of the input-output mapping for deep neural networks. While previous work rely on upper bounding techniques, we provide a scheme that targets the exact spectral norm. We showcase that our algorithm achieves an improved generalization performance compared to previous spectral regularization techniques while simultaneously maintaining a strong safeguard against natural and adversarial noise. Moreover, we further explore some previous reasoning concerning the strong adversarial protection that Jacobian regularization provides and show that it can be misleading.

The Ecosystem Path to General AI

Aug 17, 2021

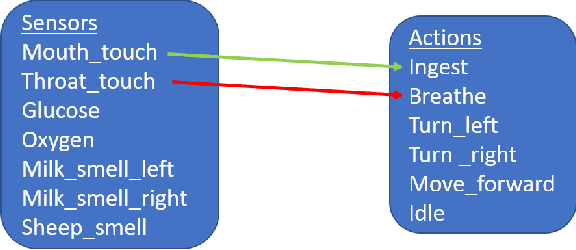

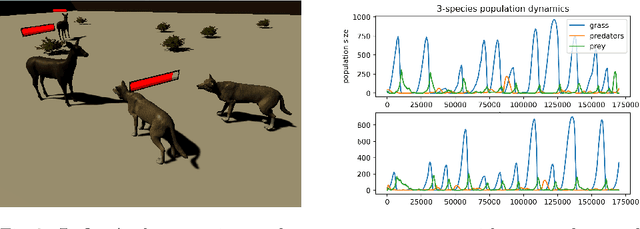

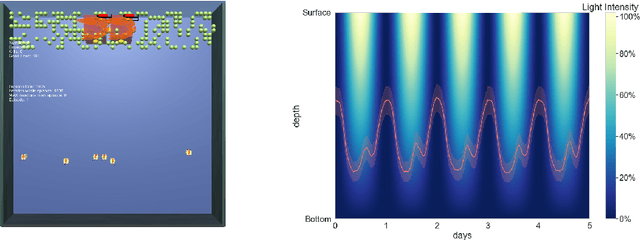

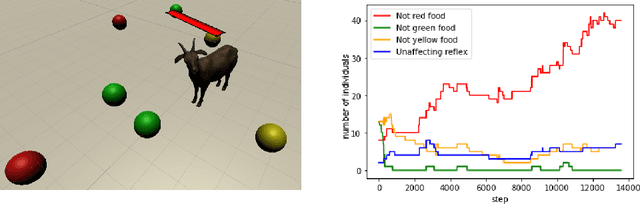

Abstract:We start by discussing the link between ecosystem simulators and general AI. Then we present the open-source ecosystem simulator Ecotwin, which is based on the game engine Unity and operates on ecosystems containing inanimate objects like mountains and lakes, as well as organisms such as animals and plants. Animal cognition is modeled by integrating three separate networks: (i) a \textit{reflex network} for hard-wired reflexes; (ii) a \textit{happiness network} that maps sensory data such as oxygen, water, energy, and smells, to a scalar happiness value; and (iii) a \textit{policy network} for selecting actions. The policy network is trained with reinforcement learning (RL), where the reward signal is defined as the happiness difference from one time step to the next. All organisms are capable of either sexual or asexual reproduction, and they die if they run out of critical resources. We report results from three studies with Ecotwin, in which natural phenomena emerge in the models without being hardwired. First, we study a terrestrial ecosystem with wolves, deer, and grass, in which a Lotka-Volterra style population dynamics emerges. Second, we study a marine ecosystem with phytoplankton, copepods, and krill, in which a diel vertical migration behavior emerges. Third, we study an ecosystem involving lethal dangers, in which certain agents that combine RL with reflexes outperform pure RL agents.

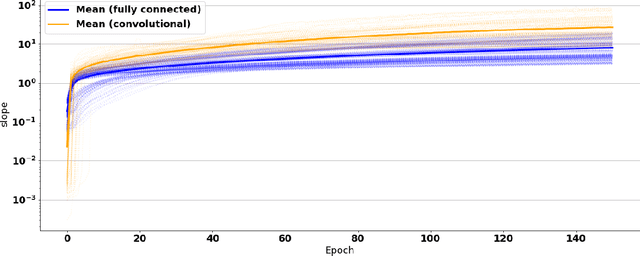

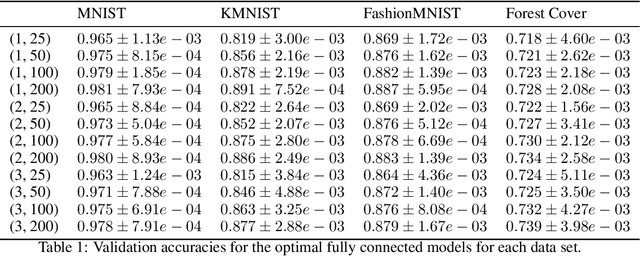

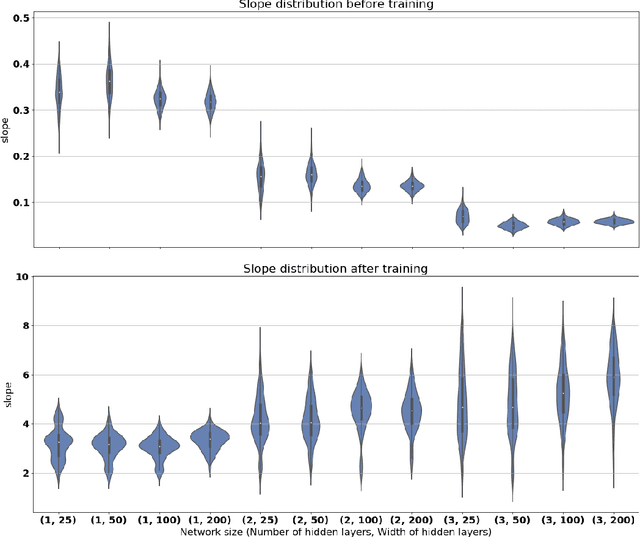

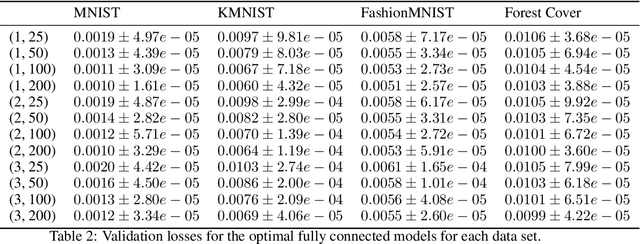

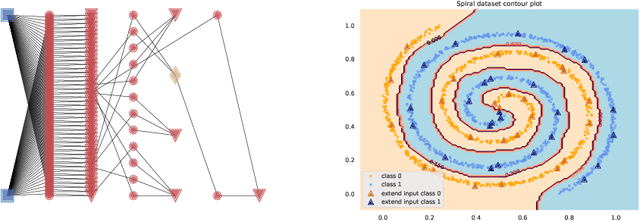

Slope and generalization properties of neural networks

Jul 03, 2021

Abstract:Neural networks are very successful tools in for example advanced classification. From a statistical point of view, fitting a neural network may be seen as a kind of regression, where we seek a function from the input space to a space of classification probabilities that follows the "general" shape of the data, but avoids overfitting by avoiding memorization of individual data points. In statistics, this can be done by controlling the geometric complexity of the regression function. We propose to do something similar when fitting neural networks by controlling the slope of the network. After defining the slope and discussing some of its theoretical properties, we go on to show empirically in examples, using ReLU networks, that the distribution of the slope of a well-trained neural network classifier is generally independent of the width of the layers in a fully connected network, and that the mean of the distribution only has a weak dependence on the model architecture in general. The slope is of similar size throughout the relevant volume, and varies smoothly. It also behaves as predicted in rescaling examples. We discuss possible applications of the slope concept, such as using it as a part of the loss function or stopping criterion during network training, or ranking data sets in terms of their complexity.

Lifelong Learning Starting From Zero

Jun 24, 2019

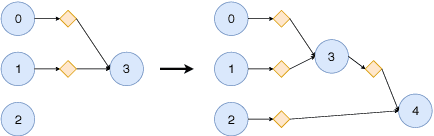

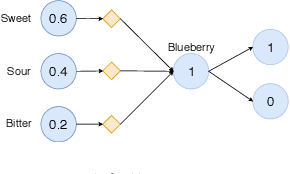

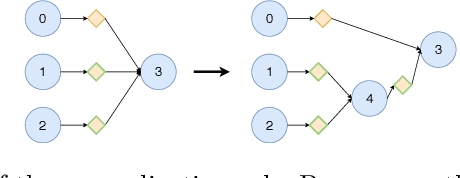

Abstract:We present a deep neural-network model for lifelong learning inspired by several forms of neuroplasticity. The neural network develops continuously in response to signals from the environment. In the beginning, the network is a blank slate with no nodes at all. It develops according to four rules: (i) expansion, which adds new nodes to memorize new input combinations; (ii) generalization, which adds new nodes that generalize from existing ones; (iii) forgetting, which removes nodes that are of relatively little use; and (iv) backpropagation, which fine-tunes the network parameters. We analyze the model from the perspective of accuracy, energy efficiency, and versatility and compare it to other network models, finding better performance in several cases.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge