Filip Slottner Seholm

Lifelong Learning Starting From Zero

Jun 24, 2019

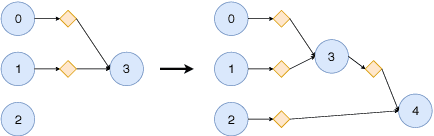

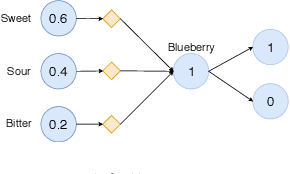

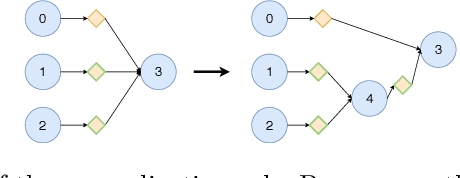

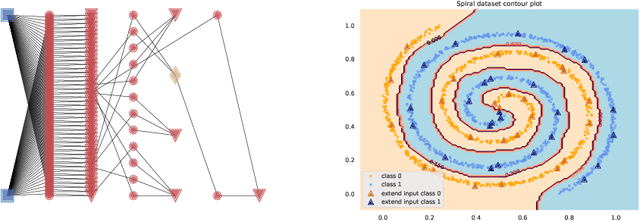

Abstract:We present a deep neural-network model for lifelong learning inspired by several forms of neuroplasticity. The neural network develops continuously in response to signals from the environment. In the beginning, the network is a blank slate with no nodes at all. It develops according to four rules: (i) expansion, which adds new nodes to memorize new input combinations; (ii) generalization, which adds new nodes that generalize from existing ones; (iii) forgetting, which removes nodes that are of relatively little use; and (iv) backpropagation, which fine-tunes the network parameters. We analyze the model from the perspective of accuracy, energy efficiency, and versatility and compare it to other network models, finding better performance in several cases.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge