Nathan Korda

Fully autonomous tuning of a spin qubit

Feb 06, 2024

Abstract:Spanning over two decades, the study of qubits in semiconductors for quantum computing has yielded significant breakthroughs. However, the development of large-scale semiconductor quantum circuits is still limited by challenges in efficiently tuning and operating these circuits. Identifying optimal operating conditions for these qubits is complex, involving the exploration of vast parameter spaces. This presents a real 'needle in the haystack' problem, which, until now, has resisted complete automation due to device variability and fabrication imperfections. In this study, we present the first fully autonomous tuning of a semiconductor qubit, from a grounded device to Rabi oscillations, a clear indication of successful qubit operation. We demonstrate this automation, achieved without human intervention, in a Ge/Si core/shell nanowire device. Our approach integrates deep learning, Bayesian optimization, and computer vision techniques. We expect this automation algorithm to apply to a wide range of semiconductor qubit devices, allowing for statistical studies of qubit quality metrics. As a demonstration of the potential of full automation, we characterise how the Rabi frequency and g-factor depend on barrier gate voltages for one of the qubits found by the algorithm. Twenty years after the initial demonstrations of spin qubit operation, this significant advancement is poised to finally catalyze the operation of large, previously unexplored quantum circuits.

The Automated Bias Triangle Feature Extraction Framework

Dec 05, 2023

Abstract:Bias triangles represent features in stability diagrams of Quantum Dot (QD) devices, whose occurrence and property analysis are crucial indicators for spin physics. Nevertheless, challenges associated with quality and availability of data as well as the subtlety of physical phenomena of interest have hindered an automatic and bespoke analysis framework, often still relying (in part) on human labelling and verification. We introduce a feature extraction framework for bias triangles, built from unsupervised, segmentation-based computer vision methods, which facilitates the direct identification and quantification of physical properties of the former. Thereby, the need for human input or large training datasets to inform supervised learning approaches is circumvented, while additionally enabling the automation of pixelwise shape and feature labeling. In particular, we demonstrate that Pauli Spin Blockade (PSB) detection can be conducted effectively, efficiently and without any training data as a direct result of this approach.

Distributed Clustering of Linear Bandits in Peer to Peer Networks

Jun 07, 2016

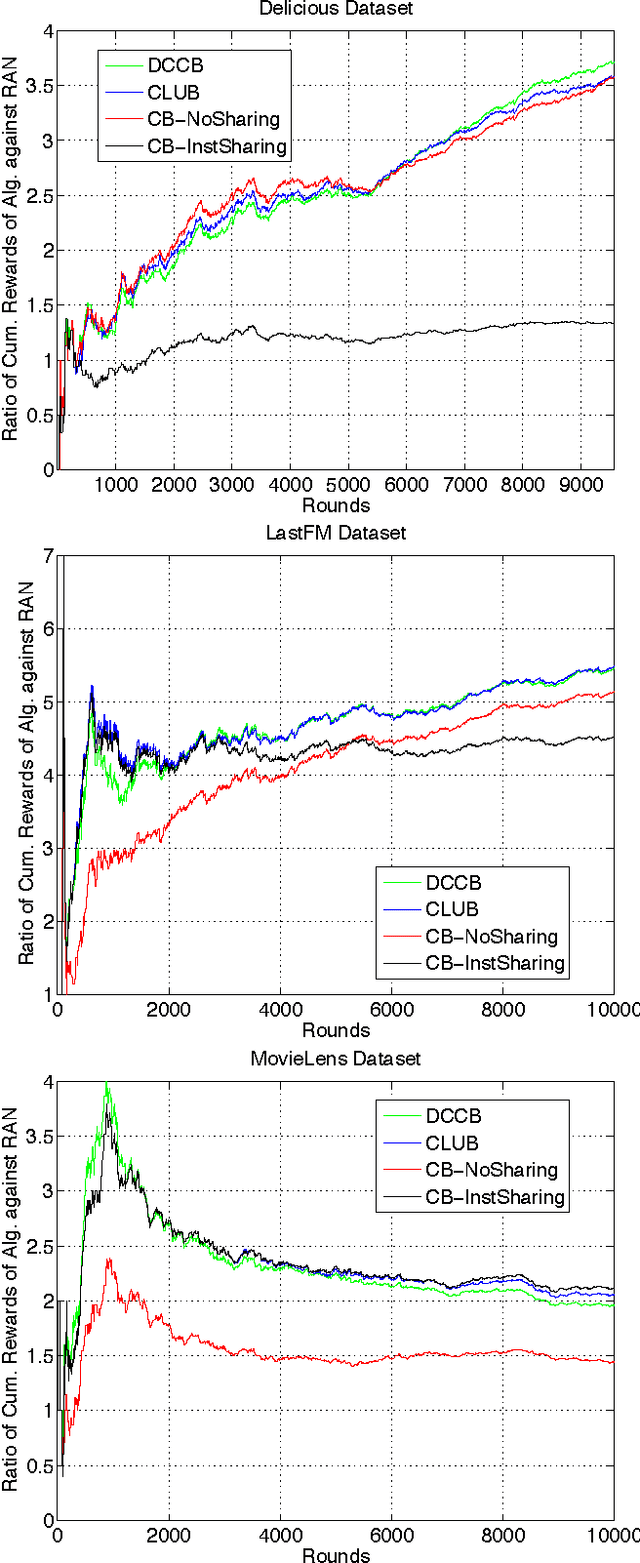

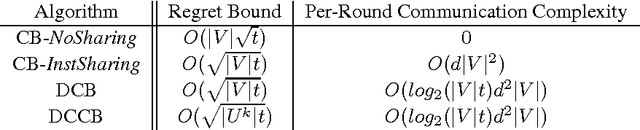

Abstract:We provide two distributed confidence ball algorithms for solving linear bandit problems in peer to peer networks with limited communication capabilities. For the first, we assume that all the peers are solving the same linear bandit problem, and prove that our algorithm achieves the optimal asymptotic regret rate of any centralised algorithm that can instantly communicate information between the peers. For the second, we assume that there are clusters of peers solving the same bandit problem within each cluster, and we prove that our algorithm discovers these clusters, while achieving the optimal asymptotic regret rate within each one. Through experiments on several real-world datasets, we demonstrate the performance of proposed algorithms compared to the state-of-the-art.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge