Nassim Belmecheri

Learning Graph Representation of Agent Diffusers

May 15, 2025

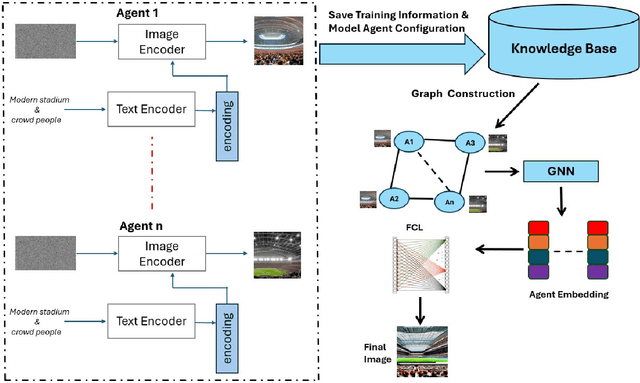

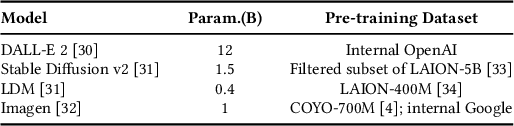

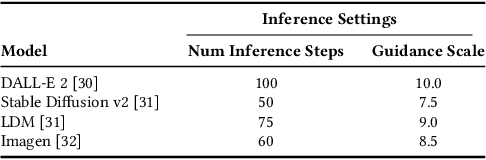

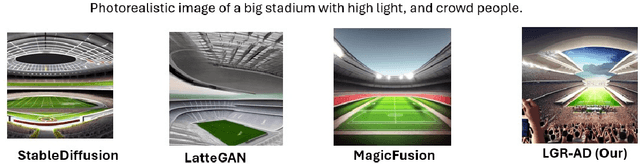

Abstract:Diffusion-based generative models have significantly advanced text-to-image synthesis, demonstrating impressive text comprehension and zero-shot generalization. These models refine images from random noise based on textual prompts, with initial reliance on text input shifting towards enhanced visual fidelity over time. This transition suggests that static model parameters might not optimally address the distinct phases of generation. We introduce LGR-AD (Learning Graph Representation of Agent Diffusers), a novel multi-agent system designed to improve adaptability in dynamic computer vision tasks. LGR-AD models the generation process as a distributed system of interacting agents, each representing an expert sub-model. These agents dynamically adapt to varying conditions and collaborate through a graph neural network that encodes their relationships and performance metrics. Our approach employs a coordination mechanism based on top-$k$ maximum spanning trees, optimizing the generation process. Each agent's decision-making is guided by a meta-model that minimizes a novel loss function, balancing accuracy and diversity. Theoretical analysis and extensive empirical evaluations show that LGR-AD outperforms traditional diffusion models across various benchmarks, highlighting its potential for scalable and flexible solutions in complex image generation tasks. Code is available at: https://github.com/YousIA/LGR_AD

Explainable Scene Understanding with Qualitative Representations and Graph Neural Networks

Apr 17, 2025Abstract:This paper investigates the integration of graph neural networks (GNNs) with Qualitative Explainable Graphs (QXGs) for scene understanding in automated driving. Scene understanding is the basis for any further reactive or proactive decision-making. Scene understanding and related reasoning is inherently an explanation task: why is another traffic participant doing something, what or who caused their actions? While previous work demonstrated QXGs' effectiveness using shallow machine learning models, these approaches were limited to analysing single relation chains between object pairs, disregarding the broader scene context. We propose a novel GNN architecture that processes entire graph structures to identify relevant objects in traffic scenes. We evaluate our method on the nuScenes dataset enriched with DriveLM's human-annotated relevance labels. Experimental results show that our GNN-based approach achieves superior performance compared to baseline methods. The model effectively handles the inherent class imbalance in relevant object identification tasks while considering the complete spatial-temporal relationships between all objects in the scene. Our work demonstrates the potential of combining qualitative representations with deep learning approaches for explainable scene understanding in autonomous driving systems.

Evaluating Human Trajectory Prediction with Metamorphic Testing

Jul 26, 2024

Abstract:The prediction of human trajectories is important for planning in autonomous systems that act in the real world, e.g. automated driving or mobile robots. Human trajectory prediction is a noisy process, and no prediction does precisely match any future trajectory. It is therefore approached as a stochastic problem, where the goal is to minimise the error between the true and the predicted trajectory. In this work, we explore the application of metamorphic testing for human trajectory prediction. Metamorphic testing is designed to handle unclear or missing test oracles. It is well-designed for human trajectory prediction, where there is no clear criterion of correct or incorrect human behaviour. Metamorphic relations rely on transformations over source test cases and exploit invariants. A setting well-designed for human trajectory prediction where there are many symmetries of expected human behaviour under variations of the input, e.g. mirroring and rescaling of the input data. We discuss how metamorphic testing can be applied to stochastic human trajectory prediction and introduce the Wasserstein Violation Criterion to statistically assess whether a follow-up test case violates a label-preserving metamorphic relation.

Towards Trustworthy Automated Driving through Qualitative Scene Understanding and Explanations

Mar 25, 2024

Abstract:Understanding driving scenes and communicating automated vehicle decisions are key requirements for trustworthy automated driving. In this article, we introduce the Qualitative Explainable Graph (QXG), which is a unified symbolic and qualitative representation for scene understanding in urban mobility. The QXG enables interpreting an automated vehicle's environment using sensor data and machine learning models. It utilizes spatio-temporal graphs and qualitative constraints to extract scene semantics from raw sensor inputs, such as LiDAR and camera data, offering an interpretable scene model. A QXG can be incrementally constructed in real-time, making it a versatile tool for in-vehicle explanations across various sensor types. Our research showcases the potential of QXG, particularly in the context of automated driving, where it can rationalize decisions by linking the graph with observed actions. These explanations can serve diverse purposes, from informing passengers and alerting vulnerable road users to enabling post-hoc analysis of prior behaviors.

Acquiring Qualitative Explainable Graphs for Automated Driving Scene Interpretation

Aug 24, 2023Abstract:The future of automated driving (AD) is rooted in the development of robust, fair and explainable artificial intelligence methods. Upon request, automated vehicles must be able to explain their decisions to the driver and the car passengers, to the pedestrians and other vulnerable road users and potentially to external auditors in case of accidents. However, nowadays, most explainable methods still rely on quantitative analysis of the AD scene representations captured by multiple sensors. This paper proposes a novel representation of AD scenes, called Qualitative eXplainable Graph (QXG), dedicated to qualitative spatiotemporal reasoning of long-term scenes. The construction of this graph exploits the recent Qualitative Constraint Acquisition paradigm. Our experimental results on NuScenes, an open real-world multi-modal dataset, show that the qualitative eXplainable graph of an AD scene composed of 40 frames can be computed in real-time and light in space storage which makes it a potentially interesting tool for improved and more trustworthy perception and control processes in AD.

Boosting the Learning for Ranking Patterns

Mar 05, 2022

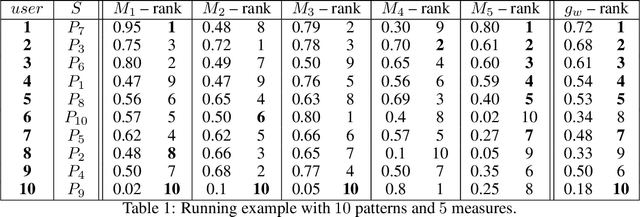

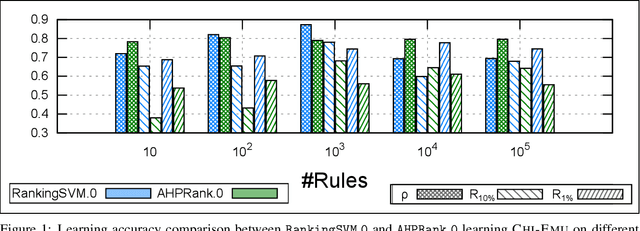

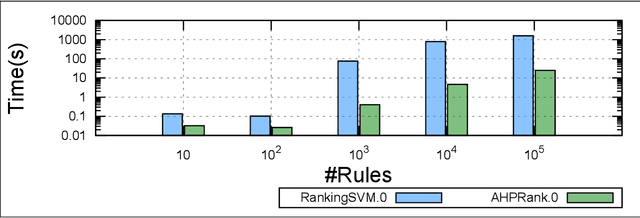

Abstract:Discovering relevant patterns for a particular user remains a challenging tasks in data mining. Several approaches have been proposed to learn user-specific pattern ranking functions. These approaches generalize well, but at the expense of the running time. On the other hand, several measures are often used to evaluate the interestingness of patterns, with the hope to reveal a ranking that is as close as possible to the user-specific ranking. In this paper, we formulate the problem of learning pattern ranking functions as a multicriteria decision making problem. Our approach aggregates different interestingness measures into a single weighted linear ranking function, using an interactive learning procedure that operates in either passive or active modes. A fast learning step is used for eliciting the weights of all the measures by mean of pairwise comparisons. This approach is based on Analytic Hierarchy Process (AHP), and a set of user-ranked patterns to build a preference matrix, which compares the importance of measures according to the user-specific interestingness. A sensitivity based heuristic is proposed for the active learning mode, in order to insure high quality results with few user ranking queries. Experiments conducted on well-known datasets show that our approach significantly reduces the running time and returns precise pattern ranking, while being robust to user-error compared with state-of-the-art approaches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge