Naoya Muramatsu

AcinoSet: A 3D Pose Estimation Dataset and Baseline Models for Cheetahs in the Wild

Mar 24, 2021

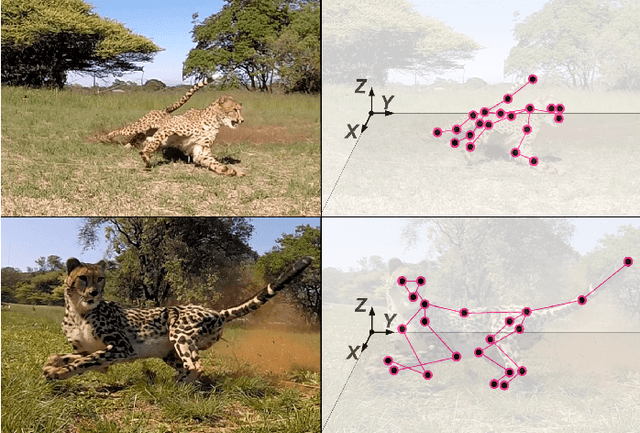

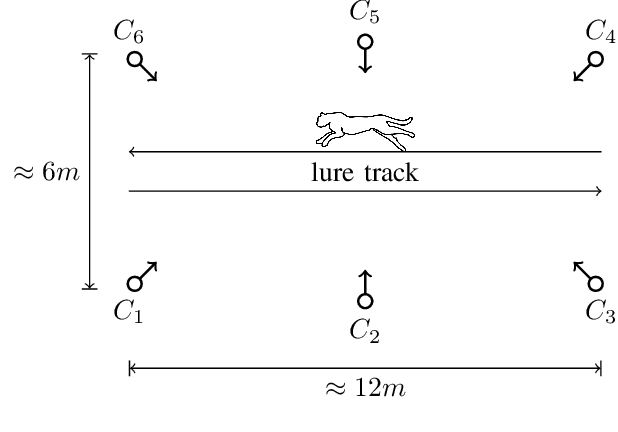

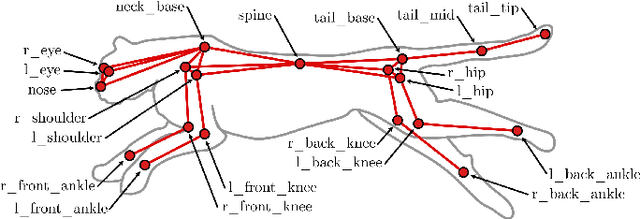

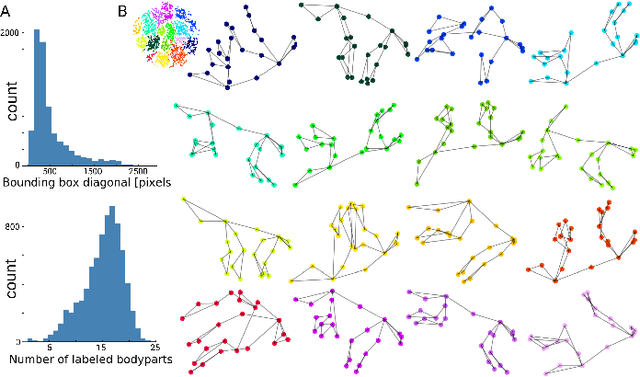

Abstract:Animals are capable of extreme agility, yet understanding their complex dynamics, which have ecological, biomechanical and evolutionary implications, remains challenging. Being able to study this incredible agility will be critical for the development of next-generation autonomous legged robots. In particular, the cheetah (acinonyx jubatus) is supremely fast and maneuverable, yet quantifying its whole-body 3D kinematic data during locomotion in the wild remains a challenge, even with new deep learning-based methods. In this work we present an extensive dataset of free-running cheetahs in the wild, called AcinoSet, that contains 119,490 frames of multi-view synchronized high-speed video footage, camera calibration files and 7,588 human-annotated frames. We utilize markerless animal pose estimation to provide 2D keypoints. Then, we use three methods that serve as strong baselines for 3D pose estimation tool development: traditional sparse bundle adjustment, an Extended Kalman Filter, and a trajectory optimization-based method we call Full Trajectory Estimation. The resulting 3D trajectories, human-checked 3D ground truth, and an interactive tool to inspect the data is also provided. We believe this dataset will be useful for a diverse range of fields such as ecology, neuroscience, robotics, biomechanics as well as computer vision.

Combining Spiking Neural Network and Artificial Neural Network for Enhanced Image Classification

Feb 28, 2021

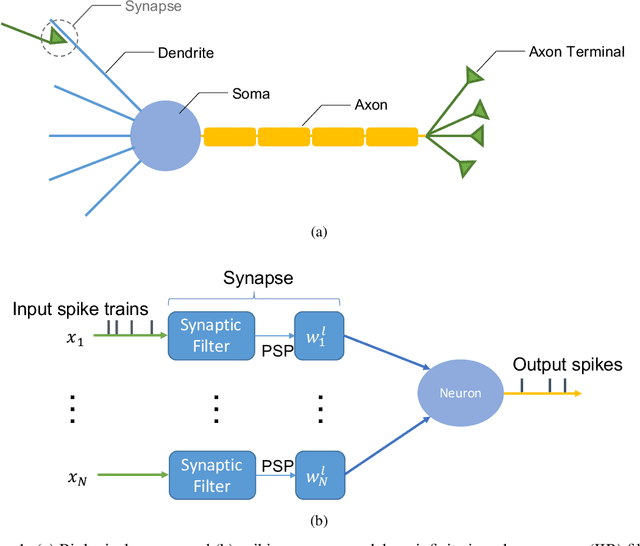

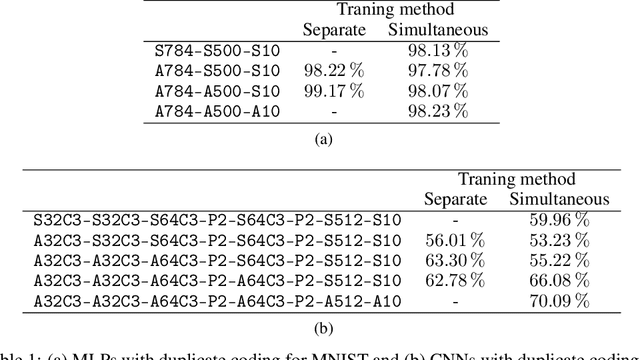

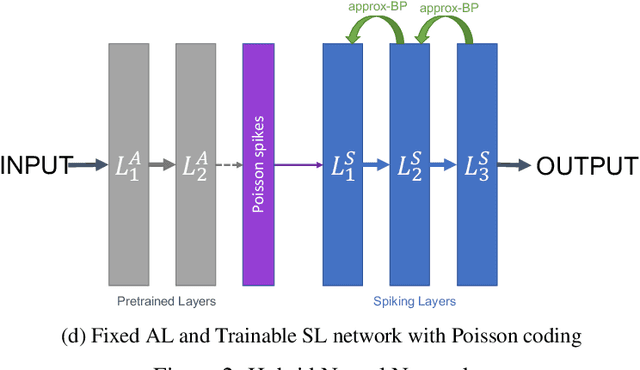

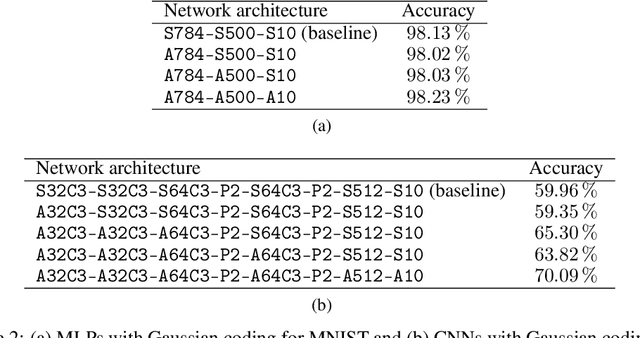

Abstract:With the continued innovations of deep neural networks, spiking neural networks (SNNs) that more closely resemble biological brain synapses have attracted attention owing to their low power consumption.However, for continuous data values, they must employ a coding process to convert the values to spike trains.Thus, they have not yet exceeded the performance of artificial neural networks (ANNs), which handle such values directly.To this end, we combine an ANN and an SNN to build versatile hybrid neural networks (HNNs) that improve the concerned performance.To qualify this performance, MNIST and CIFAR-10 image datasets are used for various classification tasks in which the training and coding methods changes.In addition, we present simultaneous and separate methods to train the artificial and spiking layers, considering the coding methods of each.We find that increasing the number of artificial layers at the expense of spiking layers improves the HNN performance.For straightforward datasets such as MNIST, it is easy to achieve the same performance as ANNs by using duplicate coding and separate learning.However, for more complex tasks, the use of Gaussian coding and simultaneous learning is found to improve the accuracy of HNNs while utilizing a smaller number of artificial layers.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge