Combining Spiking Neural Network and Artificial Neural Network for Enhanced Image Classification

Paper and Code

Feb 28, 2021

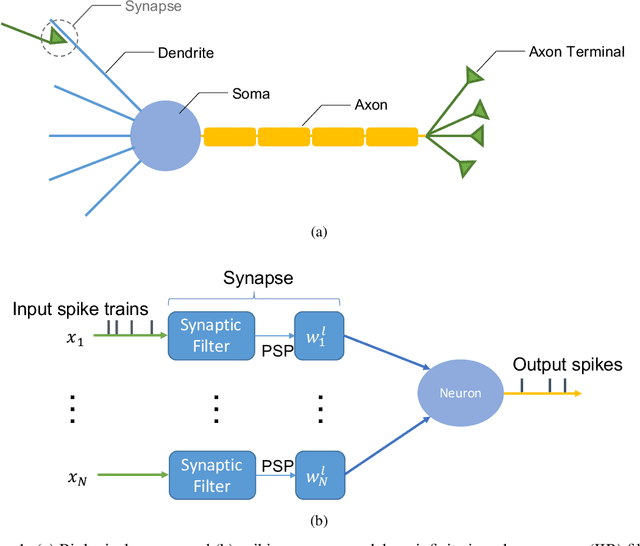

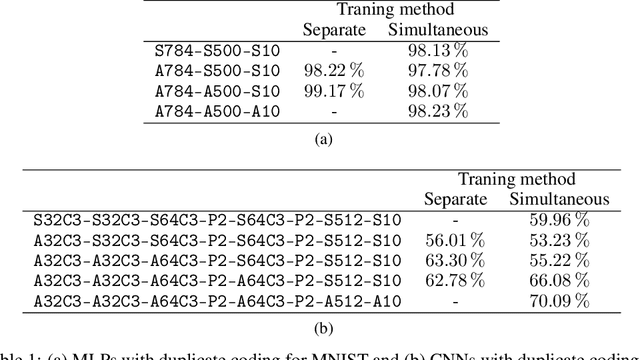

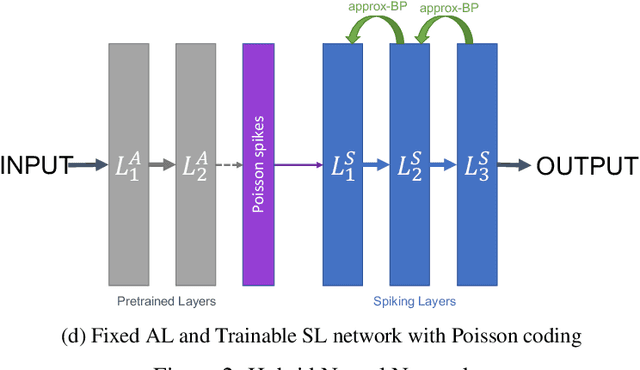

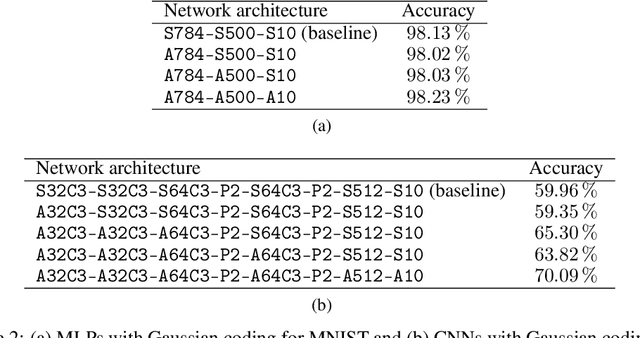

With the continued innovations of deep neural networks, spiking neural networks (SNNs) that more closely resemble biological brain synapses have attracted attention owing to their low power consumption.However, for continuous data values, they must employ a coding process to convert the values to spike trains.Thus, they have not yet exceeded the performance of artificial neural networks (ANNs), which handle such values directly.To this end, we combine an ANN and an SNN to build versatile hybrid neural networks (HNNs) that improve the concerned performance.To qualify this performance, MNIST and CIFAR-10 image datasets are used for various classification tasks in which the training and coding methods changes.In addition, we present simultaneous and separate methods to train the artificial and spiking layers, considering the coding methods of each.We find that increasing the number of artificial layers at the expense of spiking layers improves the HNN performance.For straightforward datasets such as MNIST, it is easy to achieve the same performance as ANNs by using duplicate coding and separate learning.However, for more complex tasks, the use of Gaussian coding and simultaneous learning is found to improve the accuracy of HNNs while utilizing a smaller number of artificial layers.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge