Nadine Kroher

Towards Training Music Taggers on Synthetic Data

Jul 02, 2024

Abstract:Most contemporary music tagging systems rely on large volumes of annotated data. As an alternative, we investigate the extent to which synthetically generated music excerpts can improve tagging systems when only small annotated collections are available. To this end, we release GTZAN-synth, a synthetic dataset that follows the taxonomy of the well-known GTZAN dataset while being ten times larger in data volume. We first observe that simply adding this synthetic dataset to the training split of GTZAN does not result into performance improvements. We then proceed to investigating domain adaptation, transfer learning and fine-tuning strategies for the task at hand and draw the conclusion that the last two options yield an increase in accuracy. Overall, the proposed approach can be considered as a first guide in a promising field for future research.

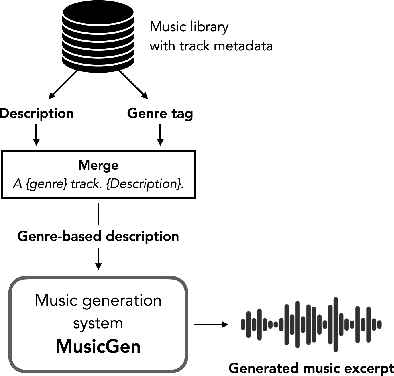

Can MusicGen Create Training Data for MIR Tasks?

Nov 15, 2023

Abstract:We are investigating the broader concept of using AI-based generative music systems to generate training data for Music Information Retrieval (MIR) tasks. To kick off this line of work, we ran an initial experiment in which we trained a genre classifier on a fully artificial music dataset created with MusicGen. We constructed over 50 000 genre- conditioned textual descriptions and generated a collection of music excerpts that covers five musical genres. Our preliminary results show that the proposed model can learn genre-specific characteristics from artificial music tracks that generalise well to real-world music recordings.

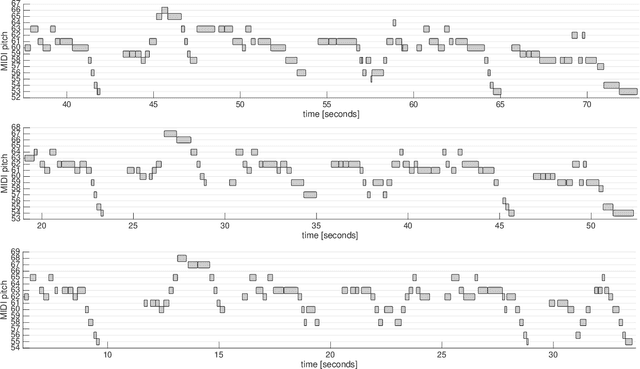

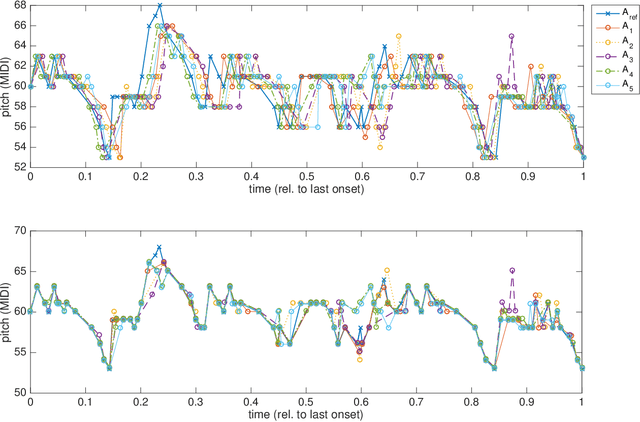

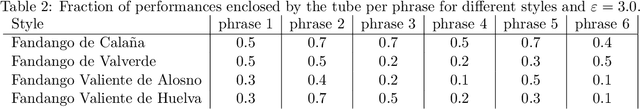

Computing Melodic Templates in Oral Music Traditions

Sep 27, 2022

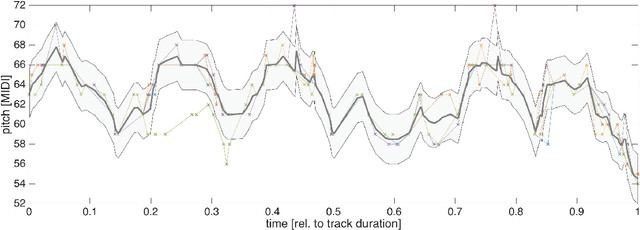

Abstract:The term melodic template or skeleton refers to a basic melody which is subject to variation during a music performance. In many oral music tradition, these templates are implicitly passed throughout generations without ever being formalized in a score. In this work, we introduce a new geometric optimization problem, the spanning tube problem, to approximate a melodic template for a set of labeled performance transcriptions corresponding to an specific style in oral music traditions. Given a set of $n$ piecewise linear functions, we solve the problem of finding a continuous function, $f^*$, and a minimum value, $\varepsilon^*$, such that, the vertical segment of length $2\varepsilon^*$ centered at $(x,f^*(x))$ intersects at least $p$ functions ($p\leq n$). The method explored here also provide a novel tool for quantitatively assess the amount of melodic variation which occurs across performances.

Maths, Computation and Flamenco: overview and challenges

Sep 22, 2022Abstract:Flamenco is a rich performance-oriented art music genre from Southern Spain which attracts a growing community of aficionados around the globe. Due to its improvisational and expressive nature, its unique musical characteristics, and the fact that the genre is largely undocumented, flamenco poses a number of interesting mathematical and computational challenges. Most existing approaches in Musical Information Retrieval (MIR) were developed in the context of popular or classical music and do often not generalize well to non-Western music traditions, in particular when the underlying music theoretical assumptions do not hold for these genres. Over the recent decade, a number of computational problems related to the automatic analysis of flamenco music have been defined and several methods addressing a variety of musical aspects have been proposed. This paper provides an overview of the challenges which arise in the context of computational analysis of flamenco music and outlines an overview of existing approaches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge