Myke C. Cohen

Birds of a Different Feather Flock Together: Exploring Opportunities and Challenges in Animal-Human-Machine Teaming

Apr 17, 2025Abstract:Animal-Human-Machine (AHM) teams are a type of hybrid intelligence system wherein interactions between a human, AI-enabled machine, and animal members can result in unique capabilities greater than the sum of their parts. This paper calls for a systematic approach to studying the design of AHM team structures to optimize performance and overcome limitations in various applied settings. We consider the challenges and opportunities in investigating the synergistic potential of AHM team members by introducing a set of dimensions of AHM team functioning to effectively utilize each member's strengths while compensating for individual weaknesses. Using three representative examples of such teams -- security screening, search-and-rescue, and guide dogs -- the paper illustrates how AHM teams can tackle complex tasks. We conclude with open research directions that this multidimensional approach presents for studying hybrid human-AI systems beyond AHM teams.

Exploratory Models of Human-AI Teams: Leveraging Human Digital Twins to Investigate Trust Development

Nov 01, 2024

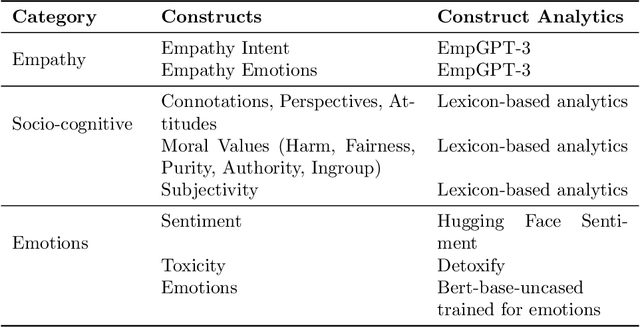

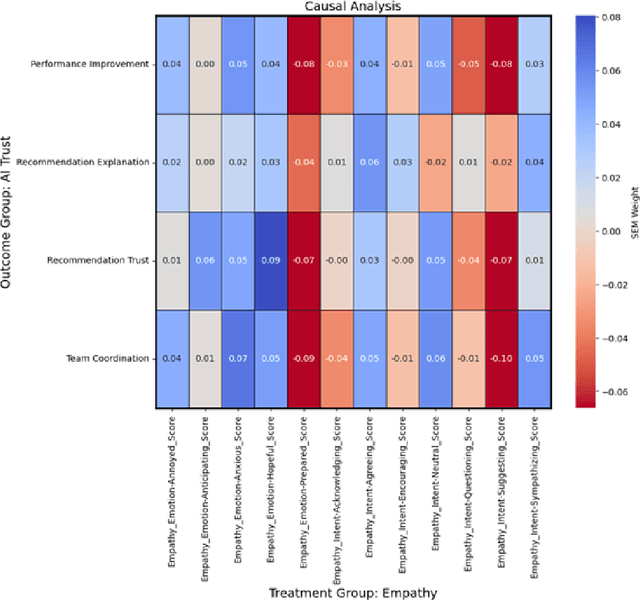

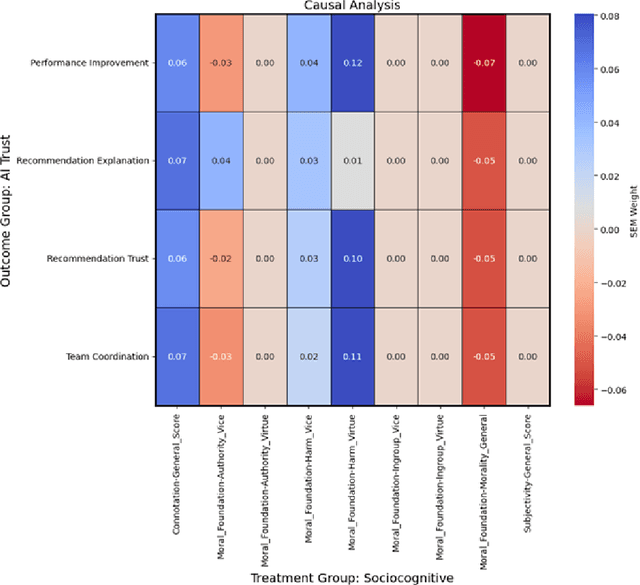

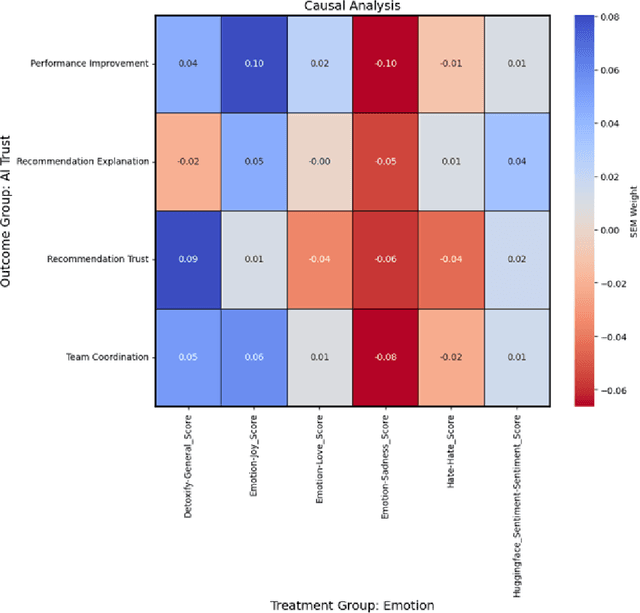

Abstract:As human-agent teaming (HAT) research continues to grow, computational methods for modeling HAT behaviors and measuring HAT effectiveness also continue to develop. One rising method involves the use of human digital twins (HDT) to approximate human behaviors and socio-emotional-cognitive reactions to AI-driven agent team members. In this paper, we address three research questions relating to the use of digital twins for modeling trust in HATs. First, to address the question of how we can appropriately model and operationalize HAT trust through HDT HAT experiments, we conducted causal analytics of team communication data to understand the impact of empathy, socio-cognitive, and emotional constructs on trust formation. Additionally, we reflect on the current state of the HAT trust science to discuss characteristics of HAT trust that must be replicable by a HDT such as individual differences in trust tendencies, emergent trust patterns, and appropriate measurement of these characteristics over time. Second, to address the question of how valid measures of HDT trust are for approximating human trust in HATs, we discuss the properties of HDT trust: self-report measures, interaction-based measures, and compliance type behavioral measures. Additionally, we share results of preliminary simulations comparing different LLM models for generating HDT communications and analyze their ability to replicate human-like trust dynamics. Third, to address how HAT experimental manipulations will extend to human digital twin studies, we share experimental design focusing on propensity to trust for HDTs vs. transparency and competency-based trust for AI agents.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge