Murat Torlak

Low Complexity Radio Frequency Interference Mitigation for Radio Astronomy Using Large Antenna Array

Mar 07, 2024

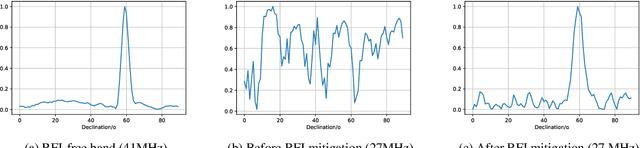

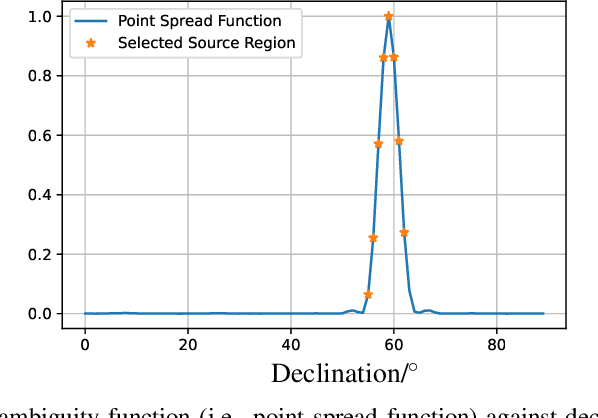

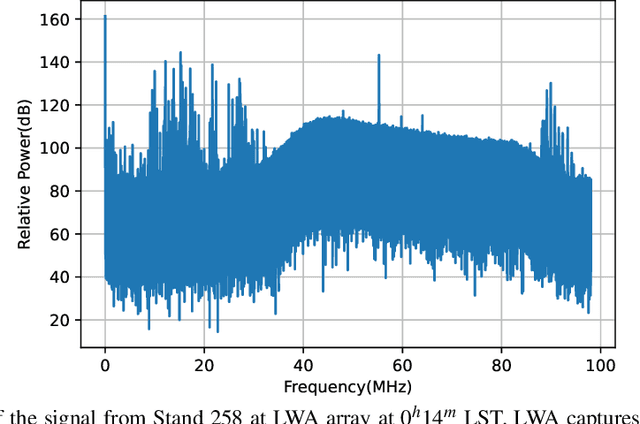

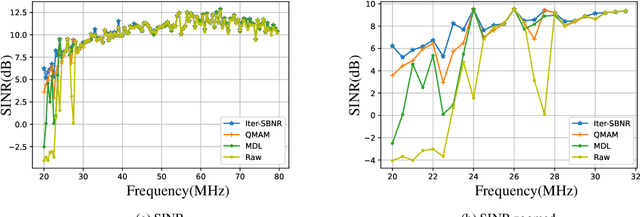

Abstract:With the ongoing growth in radio communications, there is an increased contamination of radio astronomical source data, which hinders the study of celestial radio sources. In many cases, fast mitigation of strong radio frequency interference (RFI) is valuable for studying short lived radio transients so that the astronomers can perform detailed observations of celestial radio sources. The standard method to manually excise contaminated blocks in time and frequency makes the removed data useless for radio astronomy analyses. This motivates the need for better radio frequency interference (RFI) mitigation techniques for array of size M antennas. Although many solutions for mitigating strong RFI improves the quality of the final celestial source signal, many standard approaches require all the eigenvalues of the spatial covariance matrix ($\textbf{R} \in \mathbb{C}^{M \times M}$) of the received signal, which has $O(M^3)$ computation complexity for removing RFI of size $d$ where $\textit{d} \ll M$. In this work, we investigate two approaches for RFI mitigation, 1) the computationally efficient Lanczos method based on the Quadratic Mean to Arithmetic Mean (QMAM) approach using information from previously-collected data under similar radio-sky-conditions, and 2) an approach using a celestial source as a reference for RFI mitigation. QMAM uses the Lanczos method for finding the Rayleigh-Ritz values of the covariance matrix $\textbf{R}$, thus, reducing the computational complexity of the overall approach to $O(\textit{d}M^2)$. Our numerical results, using data from the radio observatory Long Wavelength Array (LWA-1), demonstrate the effectiveness of both proposed approaches to remove strong RFI, with the QMAM-based approach still being computationally efficient.

Frequency Estimation Using Complex-Valued Shifted Window Transformer

Sep 17, 2023

Abstract:Estimating closely spaced frequency components of a signal is a fundamental problem in statistical signal processing. In this letter, we introduce 1-D real-valued and complex-valued shifted window (Swin) transformers, referred to as SwinFreq and CVSwinFreq, respectively, for line-spectra frequency estimation on 1-D complex-valued signals. Whereas 2-D Swin transformer-based models have gained traction for optical image super-resolution, we introduce for the first time a complex-valued Swin module designed to leverage the complex-valued nature of signals for a wide array of applications. The proposed approach overcomes the limitations of the classical algorithms such as the periodogram, MUSIC, and OMP in addition to state-of-the-art deep learning approach cResFreq. SwinFreq and CVSwinFreq boast superior performance at low signal-to-noise ratio SNR and improved resolution capability while requiring fewer model parameters than cResFreq, thus deeming it more suitable for edge and mobile applications. We find that the real-valued Swin-Freq outperforms its complex-valued counterpart CVSwinFreq for several tasks while touting a smaller model size. Finally, we apply the proposed techniques for radar range profile super-resolution using real data. The results from both synthetic and real experimentation validate the numerical and empirical superiority of SwinFreq and CVSwinFreq to the state-of-the-art deep learning-based techniques and traditional frequency estimation algorithms. The code and models are publicly available at https://github.com/josiahwsmith10/spectral-super-resolution-swin.

Emerging Approaches for THz Array Imaging: A Tutorial Review and Software Tool

Sep 16, 2023Abstract:Accelerated by the increasing attention drawn by 5G, 6G, and Internet of Things applications, communication and sensing technologies have rapidly evolved from millimeter-wave (mmWave) to terahertz (THz) in recent years. Enabled by significant advancements in electromagnetic (EM) hardware, mmWave and THz frequency regimes spanning 30 GHz to 300 GHz and 300 GHz to 3000 GHz, respectively, can be employed for a host of applications. The main feature of THz systems is high-bandwidth transmission, enabling ultra-high-resolution imaging and high-throughput communications; however, challenges in both the hardware and algorithmic arenas remain for the ubiquitous adoption of THz technology. Spectra comprising mmWave and THz frequencies are well-suited for synthetic aperture radar (SAR) imaging at sub-millimeter resolutions for a wide spectrum of tasks like material characterization and nondestructive testing (NDT). This article provides a tutorial review of systems and algorithms for THz SAR in the near-field with an emphasis on emerging algorithms that combine signal processing and machine learning techniques. As part of this study, an overview of classical and data-driven THz SAR algorithms is provided, focusing on object detection for security applications and SAR image super-resolution. We also discuss relevant issues, challenges, and future research directions for emerging algorithms and THz SAR, including standardization of system and algorithm benchmarking, adoption of state-of-the-art deep learning techniques, signal processing-optimized machine learning, and hybrid data-driven signal processing algorithms...

Deep Learning-Based Multiband Signal Fusion for 3-D SAR Super-Resolution

May 03, 2023Abstract:Three-dimensional (3-D) synthetic aperture radar (SAR) is widely used in many security and industrial applications requiring high-resolution imaging of concealed or occluded objects. The ability to resolve intricate 3-D targets is essential to the performance of such applications and depends directly on system bandwidth. However, because high-bandwidth systems face several prohibitive hurdles, an alternative solution is to operate multiple radars at distinct frequency bands and fuse the multiband signals. Current multiband signal fusion methods assume a simple target model and a small number of point reflectors, which is invalid for realistic security screening and industrial imaging scenarios wherein the target model effectively consists of a large number of reflectors. To the best of our knowledge, this study presents the first use of deep learning for multiband signal fusion. The proposed network, called kR-Net, employs a hybrid, dual-domain complex-valued convolutional neural network (CV-CNN) to fuse multiband signals and impute the missing samples in the frequency gaps between subbands. By exploiting the relationships in both the wavenumber domain and wavenumber spectral domain, the proposed framework overcomes the drawbacks of existing multiband imaging techniques for realistic scenarios at a fraction of the computation time of existing multiband fusion algorithms. Our method achieves high-resolution imaging of intricate targets previously impossible using conventional techniques and enables finer resolution capacity for concealed weapon detection and occluded object classification using multiband signaling without requiring more advanced hardware. Furthermore, a fully integrated multiband imaging system is developed using commercially available millimeter-wave (mmWave) radars for efficient multiband imaging.

Near-Field MIMO-ISAR Millimeter-Wave Imaging

May 03, 2023

Abstract:Multiple-input-multiple-output (MIMO) millimeter-wave (mmWave) sensors for synthetic aperture radar (SAR) and inverse SAR (ISAR) address the fundamental challenges of cost-effectiveness and scalability inherent to near-field imaging. In this paper, near-field MIMO-ISAR mmWave imaging systems are discussed and developed. The rotational ISAR (R-ISAR) regime investigated in this paper requires rotating the target at a constant radial distance from the transceiver and scanning the transceiver along a vertical track. Using a 77GHz mmWave radar, a high resolution three-dimensional (3-D) image can be reconstructed from this two-dimensional scanning taking into account the spherical near-field wavefront. While prior work in literature consists of single-input-single-output circular synthetic aperture radar (SISO-CSAR) algorithms or computationally sluggish MIMO-CSAR image reconstruction algorithms, this paper proposes a novel algorithm for efficient MIMO 3-D holographic imaging and details the design of a MIMO R-ISAR imaging system. The proposed algorithm applies a multistatic-to-monostatic phase compensation to the R-ISAR regime allowing for use of highly efficient monostatic algorithms. We demonstrate the algorithm's performance in real-world imaging scenarios on a prototyped MIMO R-ISAR platform. Our fully integrated system, consisting of a mechanical scanner and efficient imaging algorithm, is capable of pairing the scanning efficiency of the MIMO regime with the computational efficiency of single pixel image reconstruction algorithms.

Efficient 3-D Near-Field MIMO-SAR Imaging for Irregular Scanning Geometries

May 03, 2023

Abstract:In this article, we introduce a novel algorithm for efficient near-field synthetic aperture radar (SAR) imaging for irregular scanning geometries. With the emergence of fifth-generation (5G) millimeter-wave (mmWave) devices, near-field SAR imaging is no longer confined to laboratory environments. Recent advances in positioning technology have attracted significant interest for a diverse set of new applications in mmWave imaging. However, many use cases, such as automotive-mounted SAR imaging, unmanned aerial vehicle (UAV) imaging, and freehand imaging with smartphones, are constrained to irregular scanning geometries. Whereas traditional near-field SAR imaging systems and quick personnel security (QPS) scanners employ highly precise motion controllers to create ideal synthetic arrays, emerging applications, mentioned previously, inherently cannot achieve such ideal positioning. In addition, many Internet of Things (IoT) and 5G applications impose strict size and computational complexity limitations that must be considered for edge mmWave imaging technology. In this study, we propose a novel algorithm to leverage the advantages of non-cooperative SAR scanning patterns, small form-factor multiple-input multiple-output (MIMO) radars, and efficient monostatic planar image reconstruction algorithms. We propose a framework to mathematically decompose arbitrary and irregular sampling geometries and a joint solution to mitigate multistatic array imaging artifacts. The proposed algorithm is validated through simulations and an empirical study of arbitrary scanning scenarios. Our algorithm achieves high-resolution and high-efficiency near-field MIMO-SAR imaging, and is an elegant solution to computationally constrained irregularly sampled imaging problems.

* Accepted to IEEE Access

An FCNN-Based Super-Resolution mmWave Radar Framework for Contactless Musical Instrument Interface

May 03, 2023Abstract:In this article, we propose a framework for contactless human-computer interaction (HCI) using novel tracking techniques based on deep learning-based super-resolution and tracking algorithms. Our system offers unprecedented high-resolution tracking of hand position and motion characteristics by leveraging spatial and temporal features embedded in the reflected radar waveform. Rather than classifying samples from a predefined set of hand gestures, as common in existing work on deep learning with mmWave radar, our proposed imager employs a regressive full convolutional neural network (FCNN) approach to improve localization accuracy by spatial super-resolution. While the proposed techniques are suitable for a host of tracking applications, this article focuses on their application as a musical interface to demonstrate the robustness of the gesture sensing pipeline and deep learning signal processing chain. The user can control the instrument by varying the position and velocity of their hand above the vertically-facing sensor. By employing a commercially available multiple-input-multiple-output (MIMO) radar rather than a traditional optical sensor, our framework demonstrates the efficacy of the mmWave sensing modality for fine motion tracking and offers an elegant solution to a host of HCI tasks. Additionally, we provide a freely available software package and user interface for controlling the device, streaming the data to MATLAB in real-time, and increasing accessibility to the signal processing and device interface functionality utilized in this article.

* Accepted to IEEE Transactions on Multimedia

Efficient CNN-based Super Resolution Algorithms for mmWave Mobile Radar Imaging

May 03, 2023

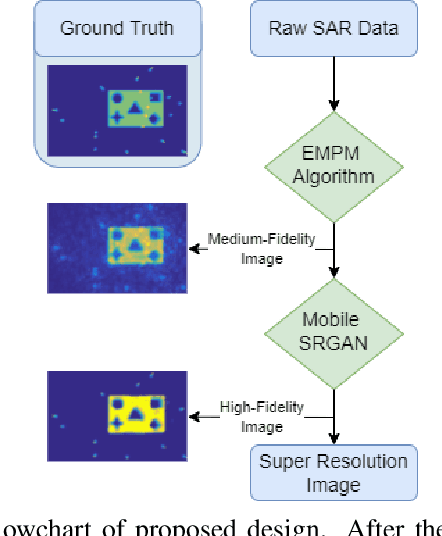

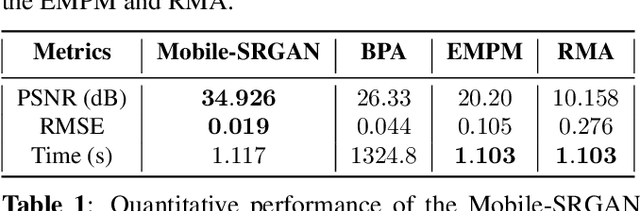

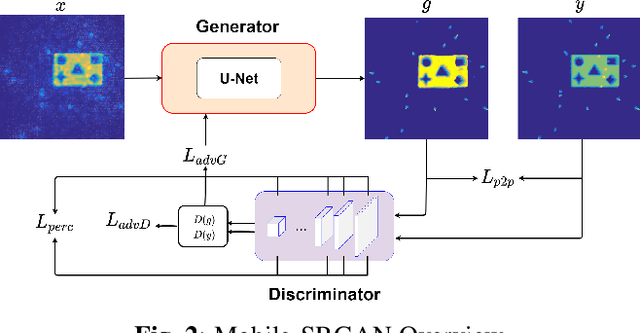

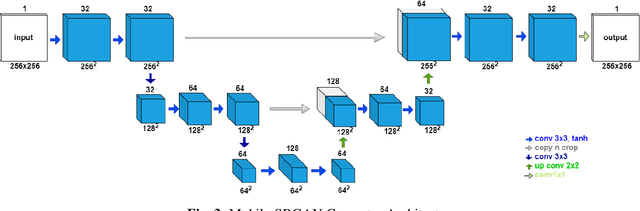

Abstract:In this paper, we introduce an innovative super resolution approach to emerging modes of near-field synthetic aperture radar (SAR) imaging. Recent research extends convolutional neural network (CNN) architectures from the optical to the electromagnetic domain to achieve super resolution on images generated from radar signaling. Specifically, near-field synthetic aperture radar (SAR) imaging, a method for generating high-resolution images by scanning a radar across space to create a synthetic aperture, is of interest due to its high-fidelity spatial sensing capability, low cost devices, and large application space. Since SAR imaging requires large aperture sizes to achieve high resolution, super-resolution algorithms are valuable for many applications. Freehand smartphone SAR, an emerging sensing modality, requires irregular SAR apertures in the near-field and computation on mobile devices. Achieving efficient high-resolution SAR images from irregularly sampled data collected by freehand motion of a smartphone is a challenging task. In this paper, we propose a novel CNN architecture to achieve SAR image super-resolution for mobile applications by employing state-of-the-art SAR processing and deep learning techniques. The proposed algorithm is verified via simulation and an empirical study. Our algorithm demonstrates high-efficiency and high-resolution radar imaging for near-field scenarios with irregular scanning geometries.

Improved Static Hand Gesture Classification on Deep Convolutional Neural Networks using Novel Sterile Training Technique

May 03, 2023Abstract:In this paper, we investigate novel data collection and training techniques towards improving classification accuracy of non-moving (static) hand gestures using a convolutional neural network (CNN) and frequency-modulated-continuous-wave (FMCW) millimeter-wave (mmWave) radars. Recently, non-contact hand pose and static gesture recognition have received considerable attention in many applications ranging from human-computer interaction (HCI), augmented/virtual reality (AR/VR), and even therapeutic range of motion for medical applications. While most current solutions rely on optical or depth cameras, these methods require ideal lighting and temperature conditions. mmWave radar devices have recently emerged as a promising alternative offering low-cost system-on-chip sensors whose output signals contain precise spatial information even in non-ideal imaging conditions. Additionally, deep convolutional neural networks have been employed extensively in image recognition by learning both feature extraction and classification simultaneously. However, little work has been done towards static gesture recognition using mmWave radars and CNNs due to the difficulty involved in extracting meaningful features from the radar return signal, and the results are inferior compared with dynamic gesture classification. This article presents an efficient data collection approach and a novel technique for deep CNN training by introducing ``sterile'' images which aid in distinguishing distinct features among the static gestures and subsequently improve the classification accuracy. Applying the proposed data collection and training methods yields an increase in classification rate of static hand gestures from $85\%$ to $93\%$ and $90\%$ to $95\%$ for range and range-angle profiles, respectively.

* Accepted to IEEE Access

Two Dimensional Array Imaging with Beam Steered Data

Apr 17, 2014

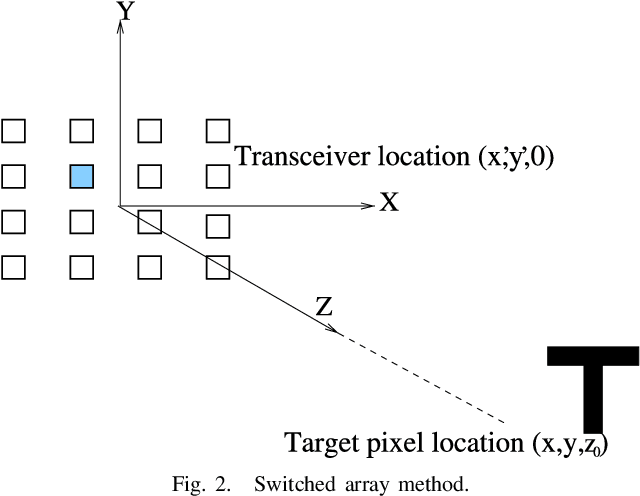

Abstract:This paper discusses different approaches used for millimeter wave imaging of two-dimensional objects. Imaging of a two dimensional object requires reflected wave data to be collected across two distinct dimensions. In this paper, we propose a reconstruction method that uses narrowband waveforms along with two dimensional beam steering. The beam is steered in azimuthal and elevation direction, which forms the two distinct dimensions required for the reconstruction. The Reconstruction technique uses inverse Fourier transform along with amplitude and phase correction factors. In addition, this reconstruction technique does not require interpolation of the data in either wavenumber or spatial domain. Use of the two dimensional beam steering offers better performance in the presence of noise compared with the existing methods, such as switched array imaging system. Effects of RF impairments such as quantization of the phase of beam steering weights and timing jitter which add to phase noise, are analyzed.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge