Mundher Al-Shabi

3D Axial-Attention for Lung Nodule Classification

Jan 02, 2021

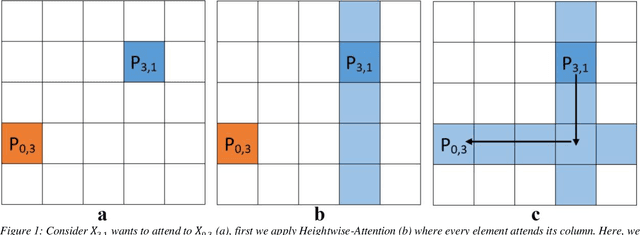

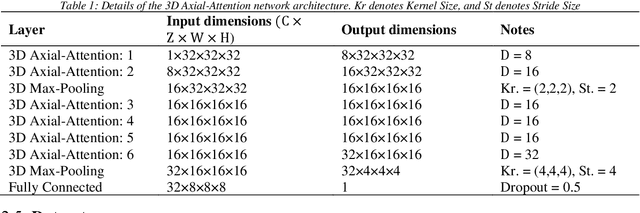

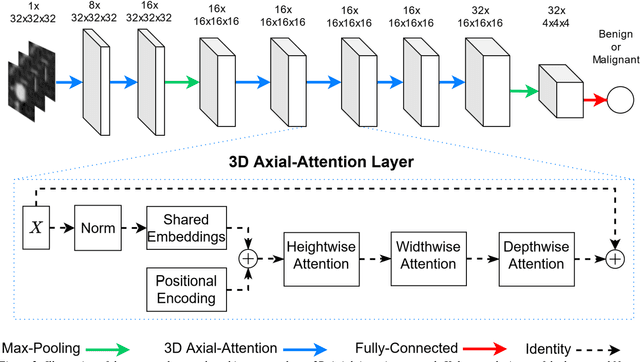

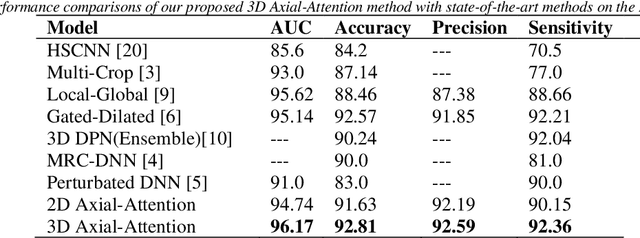

Abstract:Purpose: In recent years, Non-Local based methods have been successfully applied to lung nodule classification. However, these methods offer 2D attention or a limited 3D attention to low-resolution feature maps. Moreover, they still depend on a convenient local filter such as convolution as full 3D attention is expensive to compute and requires a big dataset, which might not be available. Methods: We propose to use 3D Axial-Attention, which requires a fraction of the computing power of a regular Non-Local network. Additionally, we solve the position invariant problem of the Non-Local network by proposing adding 3D positional encoding to shared embeddings. Results: We validated the proposed method on the LIDC-IDRI dataset by following a rigorous experimental setup using only nodules annotated by at least three radiologists. Our results show that the 3D Axial-Attention model achieves state-of-the-art performance on all evaluation metrics including AUC and Accuracy. Conclusions: The proposed model provides full 3D attention effectively, which can be used in all layers without the need for local filters. The experimental results show the importance of full 3D attention for classifying lung nodules.

A new semi-supervised self-training method for lung cancer prediction

Dec 17, 2020

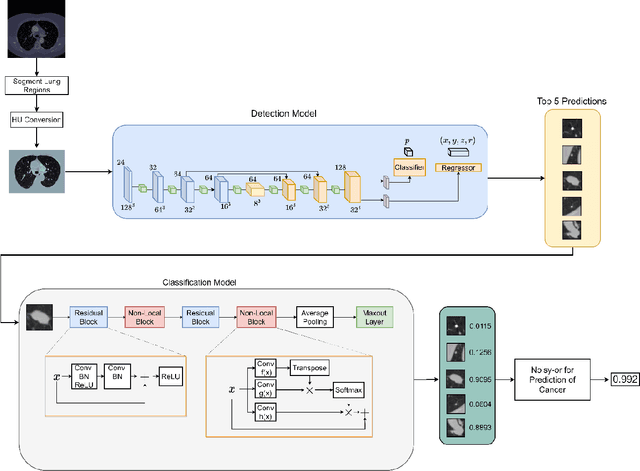

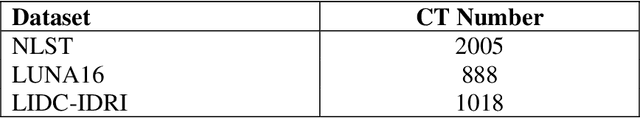

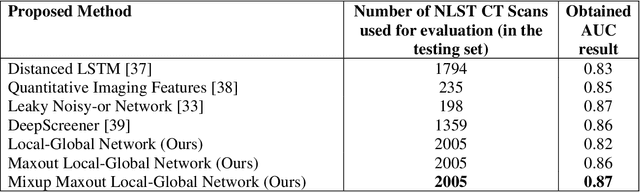

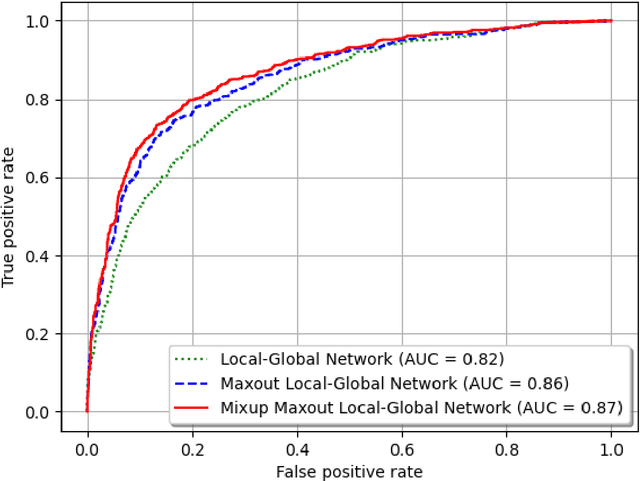

Abstract:Background and Objective: Early detection of lung cancer is crucial as it has high mortality rate with patients commonly present with the disease at stage 3 and above. There are only relatively few methods that simultaneously detect and classify nodules from computed tomography (CT) scans. Furthermore, very few studies have used semi-supervised learning for lung cancer prediction. This study presents a complete end-to-end scheme to detect and classify lung nodules using the state-of-the-art Self-training with Noisy Student method on a comprehensive CT lung screening dataset of around 4,000 CT scans. Methods: We used three datasets, namely LUNA16, LIDC and NLST, for this study. We first utilise a three-dimensional deep convolutional neural network model to detect lung nodules in the detection stage. The classification model known as Maxout Local-Global Network uses non-local networks to detect global features including shape features, residual blocks to detect local features including nodule texture, and a Maxout layer to detect nodule variations. We trained the first Self-training with Noisy Student model to predict lung cancer on the unlabelled NLST datasets. Then, we performed Mixup regularization to enhance our scheme and provide robustness to erroneous labels. Results and Conclusions: Our new Mixup Maxout Local-Global network achieves an AUC of 0.87 on 2,005 completely independent testing scans from the NLST dataset. Our new scheme significantly outperformed the next highest performing method at the 5% significance level using DeLong's test (p = 0.0001). This study presents a new complete end-to-end scheme to predict lung cancer using Self-training with Noisy Student combined with Mixup regularization. On a completely independent dataset of 2,005 scans, we achieved state-of-the-art performance even with more images as compared to other methods.

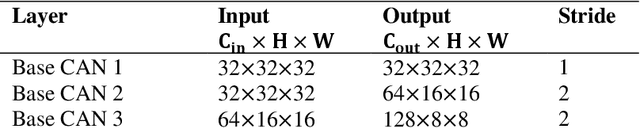

ProCAN: Progressive Growing Channel Attentive Non-Local Network for Lung Nodule Classification

Nov 14, 2020

Abstract:Lung cancer classification in screening computed tomography (CT) scans is one of the most crucial tasks for early detection of this disease. Many lives can be saved if we are able to accurately classify malignant/ cancerous lung nodules. Consequently, several deep learning based models have been proposed recently to classify lung nodules as malignant or benign. Nevertheless, the large variation in the size and heterogeneous appearance of the nodules makes this task an extremely challenging one. We propose a new Progressive Growing Channel Attentive Non-Local (ProCAN) network for lung nodule classification. The proposed method addresses this challenge from three different aspects. First, we enrich the Non-Local network by adding channel-wise attention capability to it. Second, we apply Curriculum Learning principles, whereby we first train our model on easy examples before hard/ difficult ones. Third, as the classification task gets harder during the Curriculum learning, our model is progressively grown to increase its capability of handling the task at hand. We examined our proposed method on two different public datasets and compared its performance with state-of-the-art methods in the literature. The results show that the ProCAN model outperforms state-of-the-art methods and achieves an AUC of 98.05% and accuracy of 95.28% on the LIDC-IDRI dataset. Moreover, we conducted extensive ablation studies to analyze the contribution and effects of each new component of our proposed method.

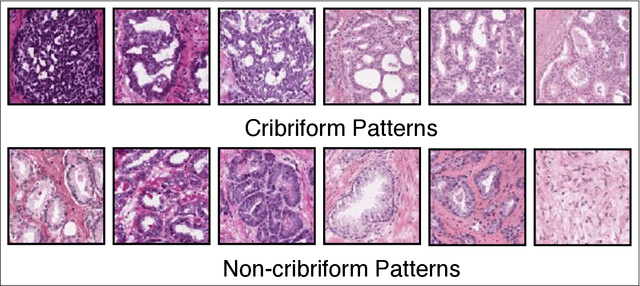

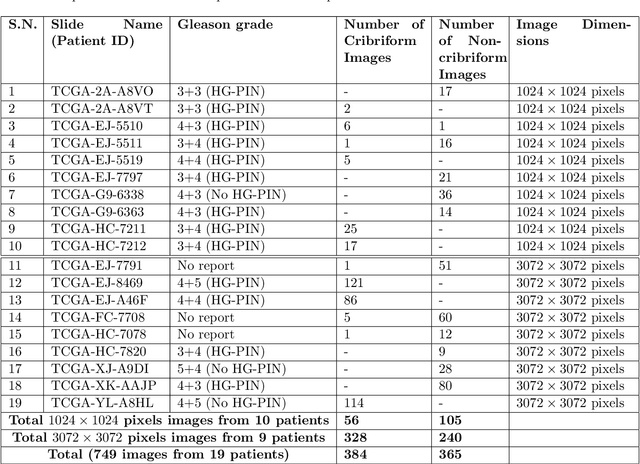

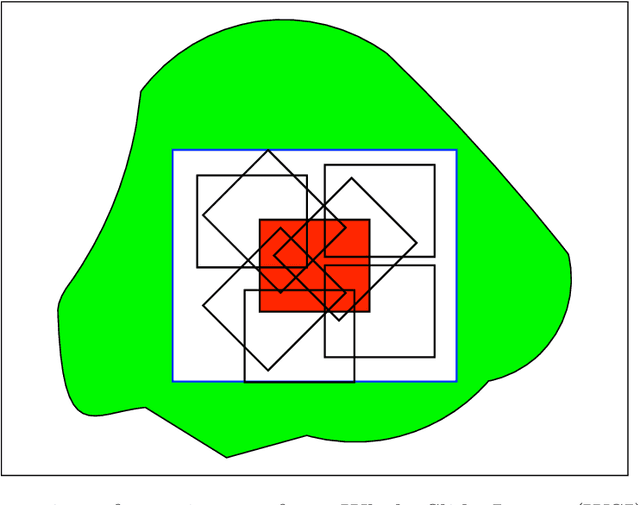

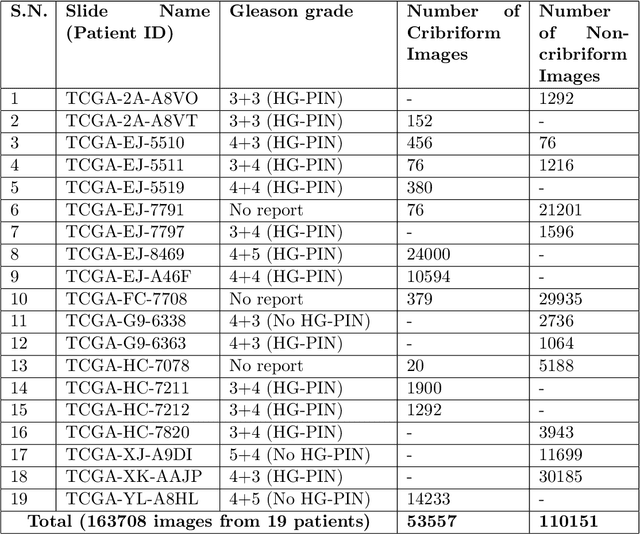

Cribriform pattern detection in prostate histopathological images using deep learning models

Oct 09, 2019

Abstract:Architecture, size, and shape of glands are most important patterns used by pathologists for assessment of cancer malignancy in prostate histopathological tissue slides. Varying structures of glands along with cumbersome manual observations may result in subjective and inconsistent assessment. Cribriform gland with irregular border is an important feature in Gleason pattern 4. We propose using deep neural networks for cribriform pattern classification in prostate histopathological images. $163708$ Hematoxylin and Eosin (H\&E) stained images were extracted from histopathologic tissue slides of $19$ patients with prostate cancer and annotated for cribriform patterns. Our automated image classification system analyses the H\&E images to classify them as either `Cribriform' or `Non-cribriform'. Our system uses various deep learning approaches and hand-crafted image pixel intensity-based features. We present our results for cribriform pattern detection across various parameters and configuration allowed by our system. The combination of fine-tuned deep learning models outperformed the state-of-art nuclei feature based methods. Our image classification system achieved the testing accuracy of $85.93~\pm~7.54$ (cross-validated) and $88.04~\pm~5.63$ ( additional unseen test set) across three folds. In this paper, we present an annotated cribriform dataset along with analysis of deep learning models and hand-crafted features for cribriform pattern detection in prostate histopathological images.

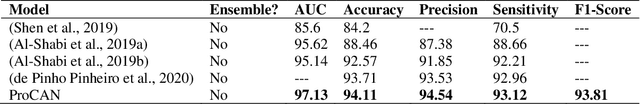

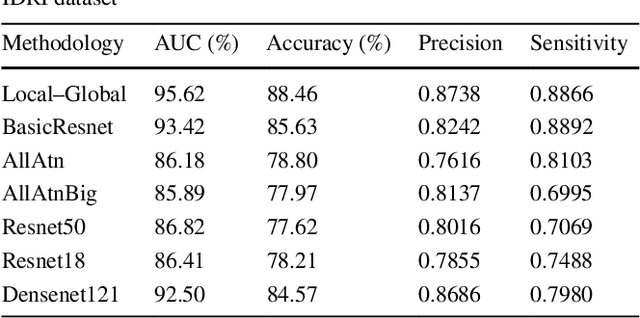

Lung Nodule Classification using Deep Local-Global Networks

Apr 23, 2019

Abstract:Purpose: Lung nodules have very diverse shapes and sizes, which makes classifying them as benign/malignant a challenging problem. In this paper, we propose a novel method to predict the malignancy of nodules that have the capability to analyze the shape and size of a nodule using a global feature extractor, as well as the density and structure of the nodule using a local feature extractor. Methods: We propose to use Residual Blocks with a 3x3 kernel size for local feature extraction, and Non-Local Blocks to extract the global features. The Non-Local Block has the ability to extract global features without using a huge number of parameters. The key idea behind the Non-Local Block is to apply matrix multiplications between features on the same feature maps. Results: We trained and validated the proposed method on the LIDC-IDRI dataset which contains 1,018 computed tomography (CT) scans. We followed a rigorous procedure for experimental setup namely, 10-fold cross-validation and ignored the nodules that had been annotated by less than 3 radiologists. The proposed method achieved state-of-the-art results with AUC=95.62%, while significantly outperforming other baseline methods. Conclusions: Our proposed Deep Local-Global network has the capability to accurately extract both local and global features. Our new method outperforms state-of-the-art architecture including Densenet and Resnet with transfer learning.

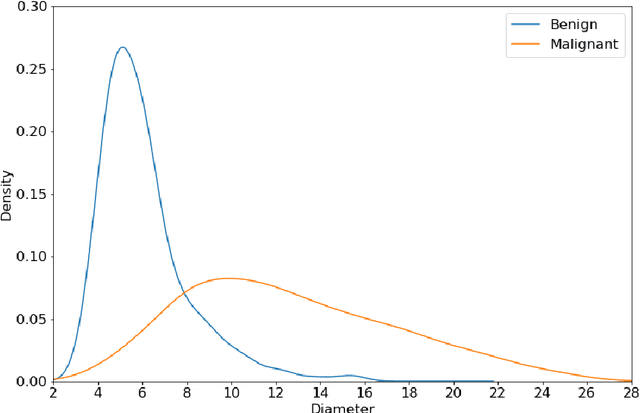

Gated-Dilated Networks for Lung Nodule Classification in CT scans

Jan 01, 2019

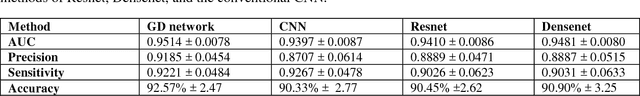

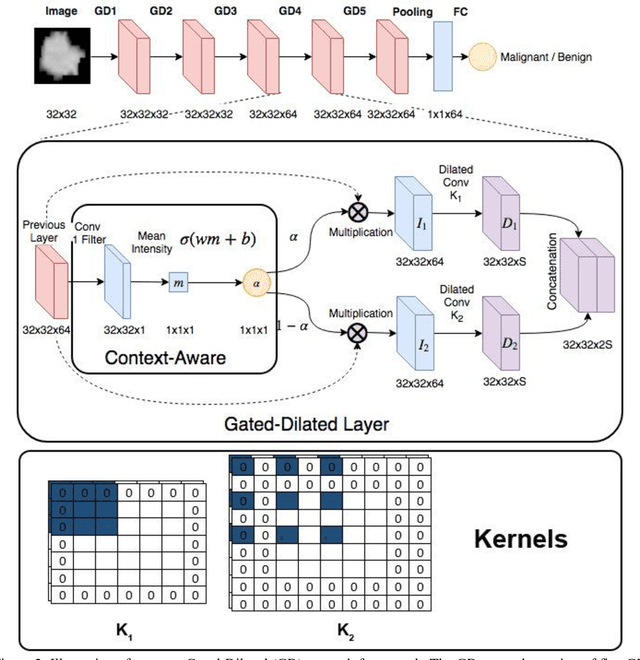

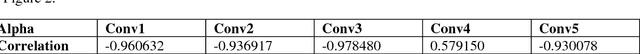

Abstract:Different types of Convolutional Neural Networks (CNNs) have been applied to detect cancerous lung nodules from computed tomography (CT) scans. However, the size of a nodule is very diverse and can range anywhere between 3 and 30 millimeters. The high variation of nodule sizes makes classifying them a difficult and challenging task. In this study, we propose a novel CNN architecture called Gated-Dilated (GD) Networks to classify nodules as malignant or benign. Unlike previous studies, the GD network uses multiple dilated convolutions instead of max-poolings to capture the scale variations. Moreover, the GD network has a Context-Aware sub-network that analyzes the input features and guides the features to a suitable dilated convolution. We evaluated the proposed network on more than 1,000 CT scans from the LIDC-LDRI dataset. Our proposed network outperforms baseline models including conventional CNNs, Resnet, and Densenet, with an AUC of >0.95. Compared to the baseline models, the GD network improves the classification accuracies of mid-range sized nodules. Furthermore, we observe a relationship between the size of the nodule and the attention signal generated by the Context-Aware sub-network, which validates our new network architecture.

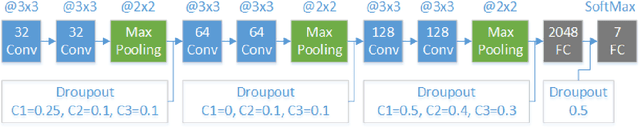

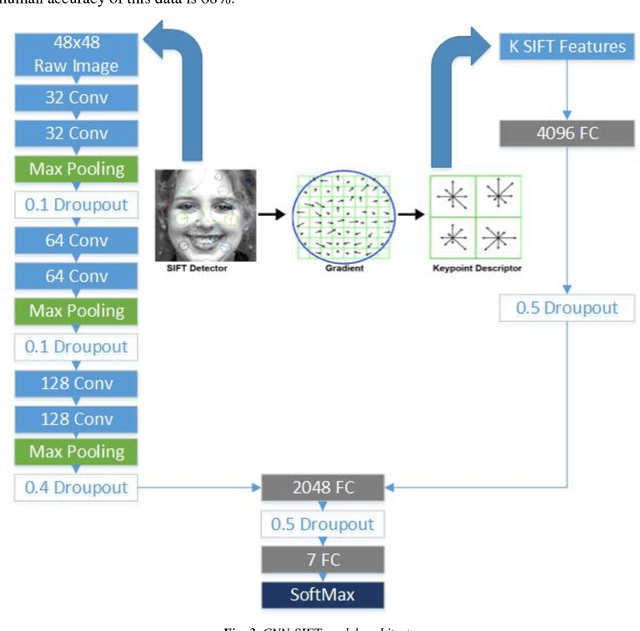

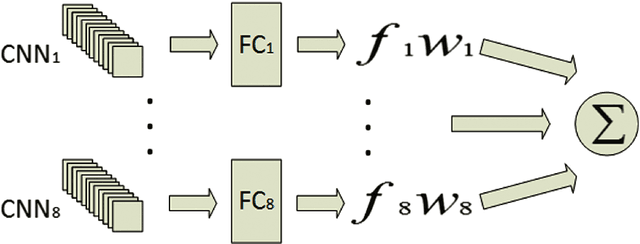

Facial Expression Recognition Using a Hybrid CNN-SIFT Aggregator

Aug 12, 2017

Abstract:Deriving an effective facial expression recognition component is important for a successful human-computer interaction system. Nonetheless, recognizing facial expression remains a challenging task. This paper describes a novel approach towards facial expression recognition task. The proposed method is motivated by the success of Convolutional Neural Networks (CNN) on the face recognition problem. Unlike other works, we focus on achieving good accuracy while requiring only a small sample data for training. Scale Invariant Feature Transform (SIFT) features are used to increase the performance on small data as SIFT does not require extensive training data to generate useful features. In this paper, both Dense SIFT and regular SIFT are studied and compared when merged with CNN features. Moreover, an aggregator of the models is developed. The proposed approach is tested on the FER-2013 and CK+ datasets. Results demonstrate the superiority of CNN with Dense SIFT over conventional CNN and CNN with SIFT. The accuracy even increased when all the models are aggregated which generates state-of-art results on FER-2013 and CK+ datasets, where it achieved 73.4% on FER-2013 and 99.1% on CK+.

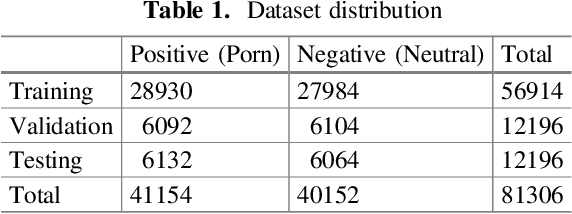

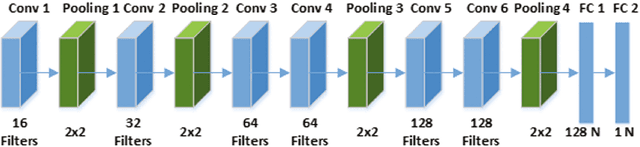

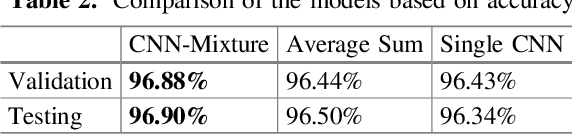

Smart Content Recognition from Images Using a Mixture of Convolutional Neural Networks

Jul 14, 2017

Abstract:With rapid development of the Internet, web contents become huge. Most of the websites are publicly available, and anyone can access the contents from anywhere such as workplace, home and even schools. Nevertheless, not all the web contents are appropriate for all users, especially children. An example of these contents is pornography images which should be restricted to certain age group. Besides, these images are not safe for work (NSFW) in which employees should not be seen accessing such contents during work. Recently, convolutional neural networks have been successfully applied to many computer vision problems. Inspired by these successes, we propose a mixture of convolutional neural networks for adult content recognition. Unlike other works, our method is formulated on a weighted sum of multiple deep neural network models. The weights of each CNN models are expressed as a linear regression problem learned using Ordinary Least Squares (OLS). Experimental results demonstrate that the proposed model outperforms both single CNN model and the average sum of CNN models in adult content recognition.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge